```

├── .gitattributes

├── .github/

├── ISSUE_TEMPLATE/

├── bug_report.md

├── feature_request.md

├── workflows/

├── python-publish.yaml

├── tests.yml

├── .gitignore

├── .pre-commit-config.yaml

├── CONTRIBUTING.md

├── LICENSE

├── README.md

├── img/

├── top-10-customers.png

├── vanna-readme-diagram.png

├── papers/

├── ai-sql-accuracy-2023-08-17.md

├── img/

├── accuracy-by-llm.png

├── accuracy-using-contextual-examples.png

├── accuracy-using-schema-only.png

├── accuracy-using-static-examples.png

├── chat-gpt-question.png

├── chatgpt-results.png

├── framework-for-sql-generation.png

├── question-flow.png

├── schema-only.png

├── sql-error.png

├── summary-table.png

├── summary.png

├── test-architecture.png

├── test-levers.png

├── using-contextually-relevant-examples.png

├── using-sql-examples.png

├── pyproject.toml

├── setup.cfg

├── src/

├── .editorconfig

├── vanna/

├── ZhipuAI/

├── ZhipuAI_Chat.py

├── ZhipuAI_embeddings.py

├── __init__.py

├── __init__.py

├── advanced/

├── __init__.py

├── anthropic/

├── __init__.py

├── anthropic_chat.py

├── azuresearch/

├── __init__.py

├── azuresearch_vector.py

├── base/

├── __init__.py

├── base.py

├── bedrock/

├── __init__.py

├── bedrock_converse.py

├── chromadb/

├── __init__.py

├── chromadb_vector.py

├── cohere/

├── __init__.py

├── cohere_chat.py

├── cohere_embeddings.py

├── deepseek/

├── __init__.py

├── deepseek_chat.py

├── exceptions/

├── __init__.py

├── faiss/

├── __init__.py

├── faiss.py

├── flask/

├── __init__.py

├── assets.py

```

## /.gitattributes

```gitattributes path="/.gitattributes"

*.ipynb linguist-detectable=false

```

## /.github/ISSUE_TEMPLATE/bug_report.md

---

name: Bug report

about: Create a report to help us improve

title: ''

labels: ["bug"]

assignees: ''

---

**Describe the bug**

A clear and concise description of what the bug is.

**To Reproduce**

Steps to reproduce the behavior:

1. Go to '...'

2. Click on '....'

3. Scroll down to '....'

4. See error

**Expected behavior**

A clear and concise description of what you expected to happen.

**Error logs/Screenshots**

If applicable, add logs/screenshots to give more information about the issue.

**Desktop (please complete the following information where):**

- OS: [e.g. Ubuntu]

- Version: [e.g. 20.04]

- Python: [3.9]

- Vanna: [2.8.0]

**Additional context**

Add any other context about the problem here.

## /.github/ISSUE_TEMPLATE/feature_request.md

---

name: Feature request

about: Suggest an idea for this project

title: ''

labels: ["enhancements"]

assignees: ''

---

**Is your feature request related to a problem? Please describe.**

A clear and concise description of what the problem is. Ex. I'm always frustrated when [...]

**Describe the solution you'd like**

A clear and concise description of what you want to happen.

**Describe alternatives you've considered**

A clear and concise description of any alternative solutions or features you've considered.

**Additional context**

Add any other context or screenshots about the feature request here.

## /.github/workflows/python-publish.yaml

```yaml path="/.github/workflows/python-publish.yaml"

# This workflow will upload a Python Package using Twine when a release is created

# For more information see: https://docs.github.com/en/actions/automating-builds-and-tests/building-and-testing-python#publishing-to-package-registries

# This workflow uses actions that are not certified by GitHub.

# They are provided by a third-party and are governed by

# separate terms of service, privacy policy, and support

# documentation.

name: Upload Python Package

on:

release:

types: [published]

permissions:

contents: read

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Set up Python

uses: actions/setup-python@v3

with:

python-version: '3.x'

- name: Install dependencies

run: |

python -m pip install --upgrade pip

pip install build

- name: Build package

run: python -m build

- name: Publish package

uses: pypa/gh-action-pypi-publish@27b31702a0e7fc50959f5ad993c78deac1bdfc29

with:

user: __token__

password: ${{ secrets.PYPI_API_TOKEN }}

```

## /.github/workflows/tests.yml

```yml path="/.github/workflows/tests.yml"

name: Basic Integration Tests

on:

push:

branches:

- main

permissions:

contents: read

jobs:

build:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- name: Set up Python 3.10

uses: actions/setup-python@v5

with:

python-version: "3.10"

- name: Install pip

run: |

python -m pip install --upgrade pip

pip install tox

- name: Run tests

env:

PROTOCOL_BUFFERS_PYTHON_IMPLEMENTATION: python

VANNA_API_KEY: ${{ secrets.VANNA_API_KEY }}

OPENAI_API_KEY: ${{ secrets.OPENAI_API_KEY }}

MISTRAL_API_KEY: ${{ secrets.MISTRAL_API_KEY }}

ANTHROPIC_API_KEY: ${{ secrets.ANTHROPIC_API_KEY }}

GEMINI_API_KEY: ${{ secrets.GEMINI_API_KEY }}

SNOWFLAKE_ACCOUNT: ${{ secrets.SNOWFLAKE_ACCOUNT }}

SNOWFLAKE_USERNAME: ${{ secrets.SNOWFLAKE_USERNAME }}

SNOWFLAKE_PASSWORD: ${{ secrets.SNOWFLAKE_PASSWORD }}

run: tox -e py310

```

## /.gitignore

```gitignore path="/.gitignore"

build

**.egg-info

venv

.DS_Store

notebooks/*

tests/__pycache__

__pycache__/

.idea

.coverage

docs/*.html

.ipynb_checkpoints/

.tox/

notebooks/chroma.sqlite3

dist

.env

*.sqlite

htmlcov

chroma.sqlite3

*.bin

.coverage.*

milvus.db

.milvus.db.lock

```

## /.pre-commit-config.yaml

```yaml path="/.pre-commit-config.yaml"

exclude: 'docs|node_modules|migrations|.git|.tox|assets.py'

default_stages: [ commit ]

fail_fast: true

repos:

- repo: https://github.com/pre-commit/pre-commit-hooks

rev: v3.2.0

hooks:

- id: trailing-whitespace

- id: end-of-file-fixer

- id: check-merge-conflict

- id: debug-statements

- id: mixed-line-ending

- repo: https://github.com/pycqa/isort

rev: 5.12.0

hooks:

- id: isort

args: [ "--profile", "black", "--filter-files" ]

```

## /CONTRIBUTING.md

# Contributing

## Setup

```bash

git clone https://github.com/vanna-ai/vanna.git

cd vanna/

python3 -m venv venv

source venv/bin/activate

# install package in editable mode

pip install -e '.[all]' tox pre-commit

# Setup pre-commit hooks

pre-commit install

# List dev targets

tox list

# Run tests

tox -e py310

```

## Running the test on a Mac

```bash

tox -e mac

```

## Do this before you submit a PR:

Find the most relevant sample notebook and then replace the install command with:

```bash

%pip install 'git+https://github.com/vanna-ai/vanna@your-branch#egg=vanna[chromadb,snowflake,openai]'

```

Run the necessary cells and verify that it works as expected in a real-world scenario.

## /LICENSE

``` path="/LICENSE"

MIT License

Copyright (c) 2024 Vanna.AI

Permission is hereby granted, free of charge, to any person obtaining a copy

of this software and associated documentation files (the "Software"), to deal

in the Software without restriction, including without limitation the rights

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

copies of the Software, and to permit persons to whom the Software is

furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all

copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

SOFTWARE.

```

## /README.md

| GitHub | PyPI | Documentation | Gurubase |

| ------ | ---- | ------------- | -------- |

| [](https://github.com/vanna-ai/vanna) | [](https://pypi.org/project/vanna/) | [](https://vanna.ai/docs/) | [](https://gurubase.io/g/vanna) |

# Vanna

Vanna is an MIT-licensed open-source Python RAG (Retrieval-Augmented Generation) framework for SQL generation and related functionality.

https://github.com/vanna-ai/vanna/assets/7146154/1901f47a-515d-4982-af50-f12761a3b2ce

## How Vanna works

Vanna works in two easy steps - train a RAG "model" on your data, and then ask questions which will return SQL queries that can be set up to automatically run on your database.

1. **Train a RAG "model" on your data**.

2. **Ask questions**.

If you don't know what RAG is, don't worry -- you don't need to know how this works under the hood to use it. You just need to know that you "train" a model, which stores some metadata and then use it to "ask" questions.

See the [base class](https://github.com/vanna-ai/vanna/blob/main/src/vanna/base/base.py) for more details on how this works under the hood.

## User Interfaces

These are some of the user interfaces that we've built using Vanna. You can use these as-is or as a starting point for your own custom interface.

- [Jupyter Notebook](https://vanna.ai/docs/postgres-openai-vanna-vannadb/)

- [vanna-ai/vanna-streamlit](https://github.com/vanna-ai/vanna-streamlit)

- [vanna-ai/vanna-flask](https://github.com/vanna-ai/vanna-flask)

- [vanna-ai/vanna-slack](https://github.com/vanna-ai/vanna-slack)

## Supported LLMs

- [OpenAI](https://github.com/vanna-ai/vanna/tree/main/src/vanna/openai)

- [Anthropic](https://github.com/vanna-ai/vanna/tree/main/src/vanna/anthropic)

- [Gemini](https://github.com/vanna-ai/vanna/blob/main/src/vanna/google/gemini_chat.py)

- [HuggingFace](https://github.com/vanna-ai/vanna/blob/main/src/vanna/hf/hf.py)

- [AWS Bedrock](https://github.com/vanna-ai/vanna/tree/main/src/vanna/bedrock)

- [Ollama](https://github.com/vanna-ai/vanna/tree/main/src/vanna/ollama)

- [Qianwen](https://github.com/vanna-ai/vanna/tree/main/src/vanna/qianwen)

- [Qianfan](https://github.com/vanna-ai/vanna/tree/main/src/vanna/qianfan)

- [Zhipu](https://github.com/vanna-ai/vanna/tree/main/src/vanna/ZhipuAI)

## Supported VectorStores

- [AzureSearch](https://github.com/vanna-ai/vanna/tree/main/src/vanna/azuresearch)

- [Opensearch](https://github.com/vanna-ai/vanna/tree/main/src/vanna/opensearch)

- [PgVector](https://github.com/vanna-ai/vanna/tree/main/src/vanna/pgvector)

- [PineCone](https://github.com/vanna-ai/vanna/tree/main/src/vanna/pinecone)

- [ChromaDB](https://github.com/vanna-ai/vanna/tree/main/src/vanna/chromadb)

- [FAISS](https://github.com/vanna-ai/vanna/tree/main/src/vanna/faiss)

- [Marqo](https://github.com/vanna-ai/vanna/tree/main/src/vanna/marqo)

- [Milvus](https://github.com/vanna-ai/vanna/tree/main/src/vanna/milvus)

- [Qdrant](https://github.com/vanna-ai/vanna/tree/main/src/vanna/qdrant)

- [Weaviate](https://github.com/vanna-ai/vanna/tree/main/src/vanna/weaviate)

- [Oracle](https://github.com/vanna-ai/vanna/tree/main/src/vanna/oracle)

## Supported Databases

- [PostgreSQL](https://www.postgresql.org/)

- [MySQL](https://www.mysql.com/)

- [PrestoDB](https://prestodb.io/)

- [Apache Hive](https://hive.apache.org/)

- [ClickHouse](https://clickhouse.com/)

- [Snowflake](https://www.snowflake.com/en/)

- [Oracle](https://www.oracle.com/)

- [Microsoft SQL Server](https://www.microsoft.com/en-us/sql-server/sql-server-downloads)

- [BigQuery](https://cloud.google.com/bigquery)

- [SQLite](https://www.sqlite.org/)

- [DuckDB](https://duckdb.org/)

## Getting started

See the [documentation](https://vanna.ai/docs/) for specifics on your desired database, LLM, etc.

If you want to get a feel for how it works after training, you can try this [Colab notebook](https://vanna.ai/docs/app/).

### Install

```bash

pip install vanna

```

There are a number of optional packages that can be installed so see the [documentation](https://vanna.ai/docs/) for more details.

### Import

See the [documentation](https://vanna.ai/docs/) if you're customizing the LLM or vector database.

```python

# The import statement will vary depending on your LLM and vector database. This is an example for OpenAI + ChromaDB

from vanna.openai.openai_chat import OpenAI_Chat

from vanna.chromadb.chromadb_vector import ChromaDB_VectorStore

class MyVanna(ChromaDB_VectorStore, OpenAI_Chat):

def __init__(self, config=None):

ChromaDB_VectorStore.__init__(self, config=config)

OpenAI_Chat.__init__(self, config=config)

vn = MyVanna(config={'api_key': 'sk-...', 'model': 'gpt-4-...'})

# See the documentation for other options

```

## Training

You may or may not need to run these `vn.train` commands depending on your use case. See the [documentation](https://vanna.ai/docs/) for more details.

These statements are shown to give you a feel for how it works.

### Train with DDL Statements

DDL statements contain information about the table names, columns, data types, and relationships in your database.

```python

vn.train(ddl="""

CREATE TABLE IF NOT EXISTS my-table (

id INT PRIMARY KEY,

name VARCHAR(100),

age INT

)

""")

```

### Train with Documentation

Sometimes you may want to add documentation about your business terminology or definitions.

```python

vn.train(documentation="Our business defines XYZ as ...")

```

### Train with SQL

You can also add SQL queries to your training data. This is useful if you have some queries already laying around. You can just copy and paste those from your editor to begin generating new SQL.

```python

vn.train(sql="SELECT name, age FROM my-table WHERE name = 'John Doe'")

```

## Asking questions

```python

vn.ask("What are the top 10 customers by sales?")

```

You'll get SQL

```sql

SELECT c.c_name as customer_name,

sum(l.l_extendedprice * (1 - l.l_discount)) as total_sales

FROM snowflake_sample_data.tpch_sf1.lineitem l join snowflake_sample_data.tpch_sf1.orders o

ON l.l_orderkey = o.o_orderkey join snowflake_sample_data.tpch_sf1.customer c

ON o.o_custkey = c.c_custkey

GROUP BY customer_name

ORDER BY total_sales desc limit 10;

```

If you've connected to a database, you'll get the table:

|

CUSTOMER_NAME |

TOTAL_SALES |

| 0 |

Customer#000143500 |

6757566.0218 |

| 1 |

Customer#000095257 |

6294115.3340 |

| 2 |

Customer#000087115 |

6184649.5176 |

| 3 |

Customer#000131113 |

6080943.8305 |

| 4 |

Customer#000134380 |

6075141.9635 |

| 5 |

Customer#000103834 |

6059770.3232 |

| 6 |

Customer#000069682 |

6057779.0348 |

| 7 |

Customer#000102022 |

6039653.6335 |

| 8 |

Customer#000098587 |

6027021.5855 |

| 9 |

Customer#000064660 |

5905659.6159 |

You'll also get an automated Plotly chart:

## RAG vs. Fine-Tuning

RAG

- Portable across LLMs

- Easy to remove training data if any of it becomes obsolete

- Much cheaper to run than fine-tuning

- More future-proof -- if a better LLM comes out, you can just swap it out

Fine-Tuning

- Good if you need to minimize tokens in the prompt

- Slow to get started

- Expensive to train and run (generally)

## Why Vanna?

1. **High accuracy on complex datasets.**

- Vanna’s capabilities are tied to the training data you give it

- More training data means better accuracy for large and complex datasets

2. **Secure and private.**

- Your database contents are never sent to the LLM or the vector database

- SQL execution happens in your local environment

3. **Self learning.**

- If using via Jupyter, you can choose to "auto-train" it on the queries that were successfully executed

- If using via other interfaces, you can have the interface prompt the user to provide feedback on the results

- Correct question to SQL pairs are stored for future reference and make the future results more accurate

4. **Supports any SQL database.**

- The package allows you to connect to any SQL database that you can otherwise connect to with Python

5. **Choose your front end.**

- Most people start in a Jupyter Notebook.

- Expose to your end users via Slackbot, web app, Streamlit app, or a custom front end.

## Extending Vanna

Vanna is designed to connect to any database, LLM, and vector database. There's a [VannaBase](https://github.com/vanna-ai/vanna/blob/main/src/vanna/base/base.py) abstract base class that defines some basic functionality. The package provides implementations for use with OpenAI and ChromaDB. You can easily extend Vanna to use your own LLM or vector database. See the [documentation](https://vanna.ai/docs/) for more details.

## Vanna in 100 Seconds

https://github.com/vanna-ai/vanna/assets/7146154/eb90ee1e-aa05-4740-891a-4fc10e611cab

## More resources

- [Full Documentation](https://vanna.ai/docs/)

- [Website](https://vanna.ai)

- [Discord group for support](https://discord.gg/qUZYKHremx)

## /img/top-10-customers.png

Binary file available at https://raw.githubusercontent.com/vanna-ai/vanna/refs/heads/main/img/top-10-customers.png

## /img/vanna-readme-diagram.png

Binary file available at https://raw.githubusercontent.com/vanna-ai/vanna/refs/heads/main/img/vanna-readme-diagram.png

## /papers/ai-sql-accuracy-2023-08-17.md

# AI SQL Accuracy: Testing different LLMs + context strategies to maximize SQL generation accuracy

_2023-08-17_

## TLDR

The promise of having an autonomous AI agent that can answer business users’ plain English questions is an attractive but thus far elusive proposition. Many have tried, with limited success, to get ChatGPT to write. The failure is primarily due of a lack of the LLM's knowledge of the particular dataset it’s being asked to query.

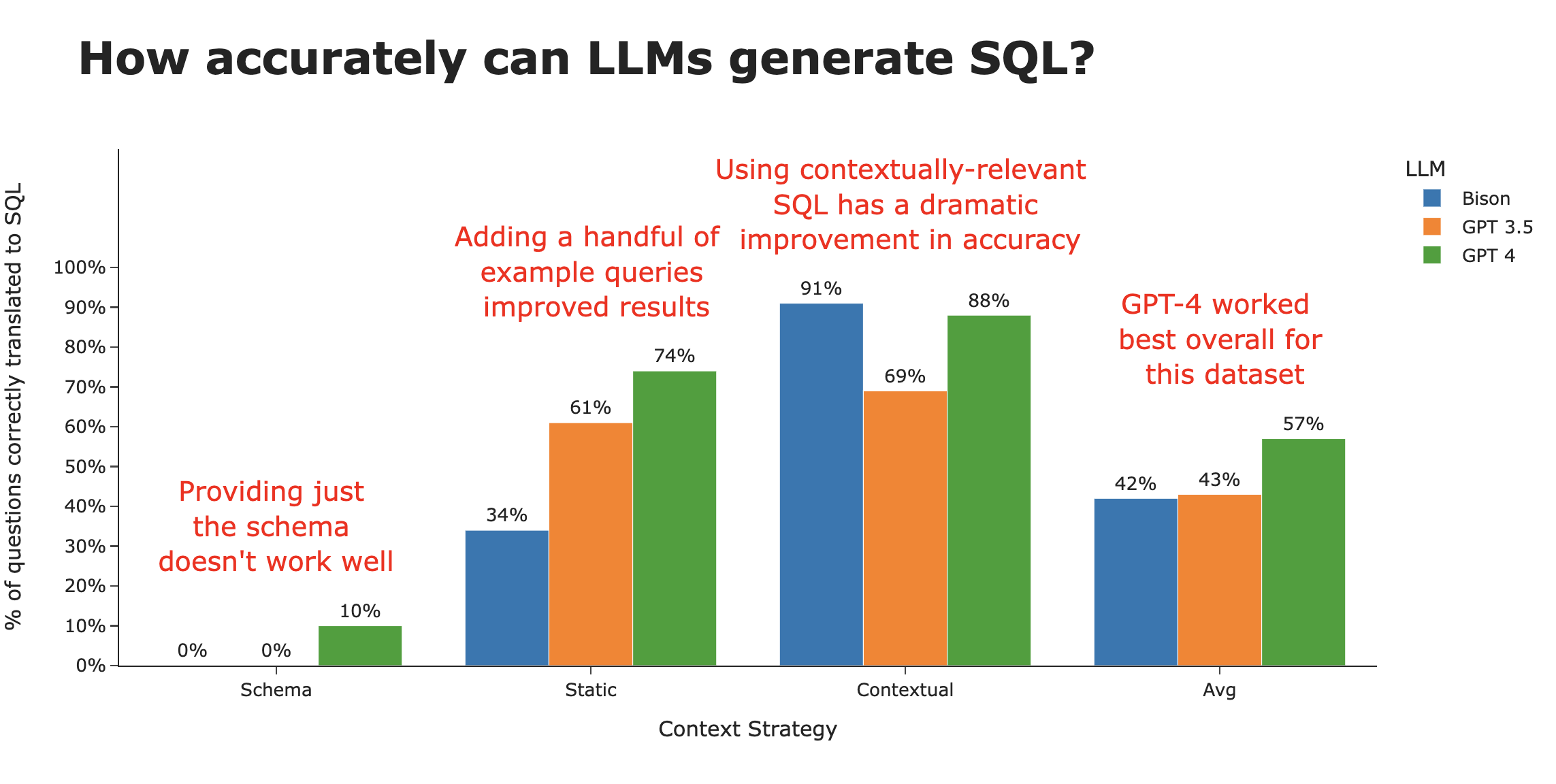

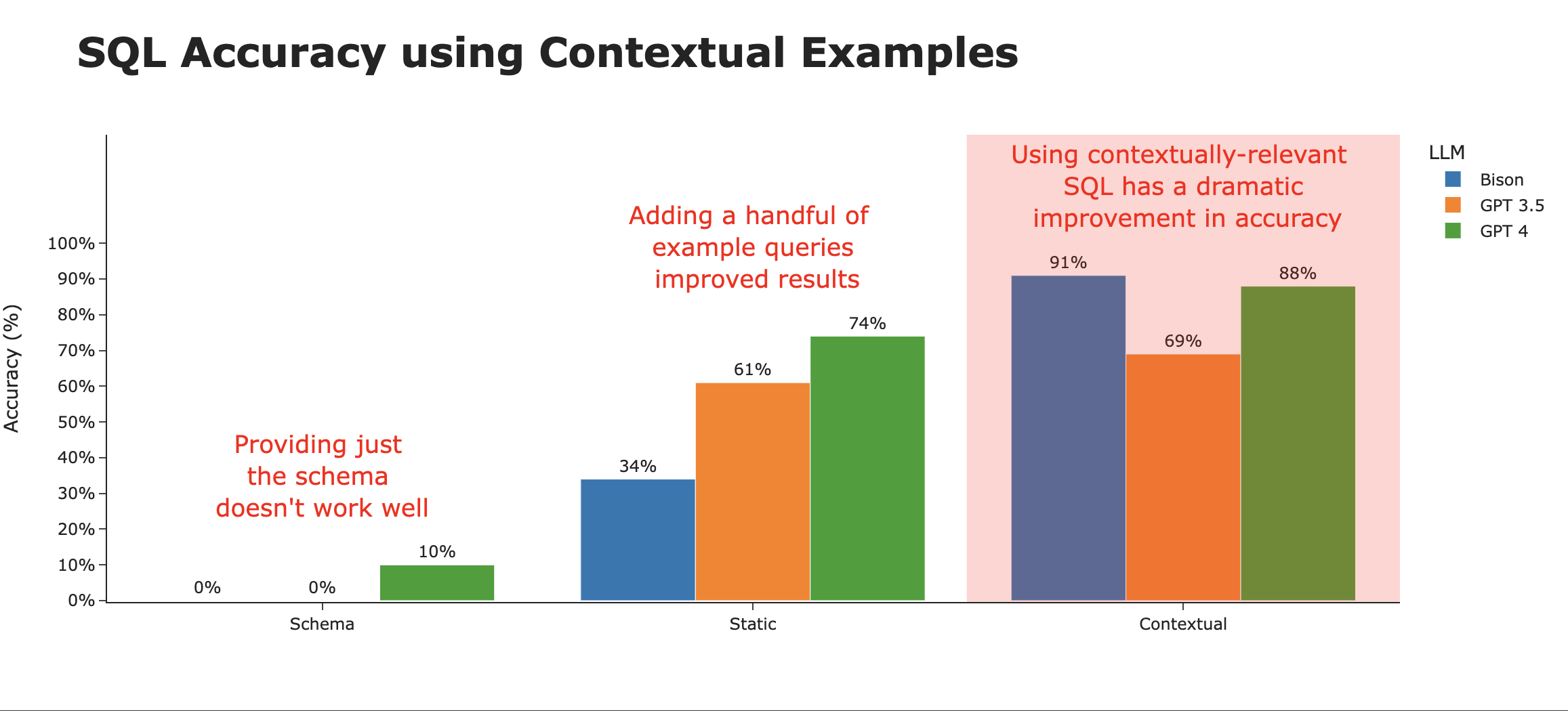

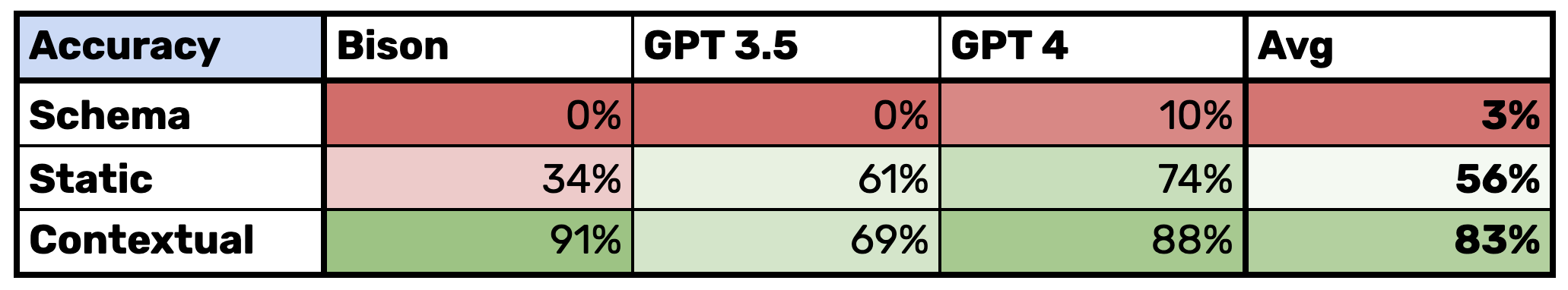

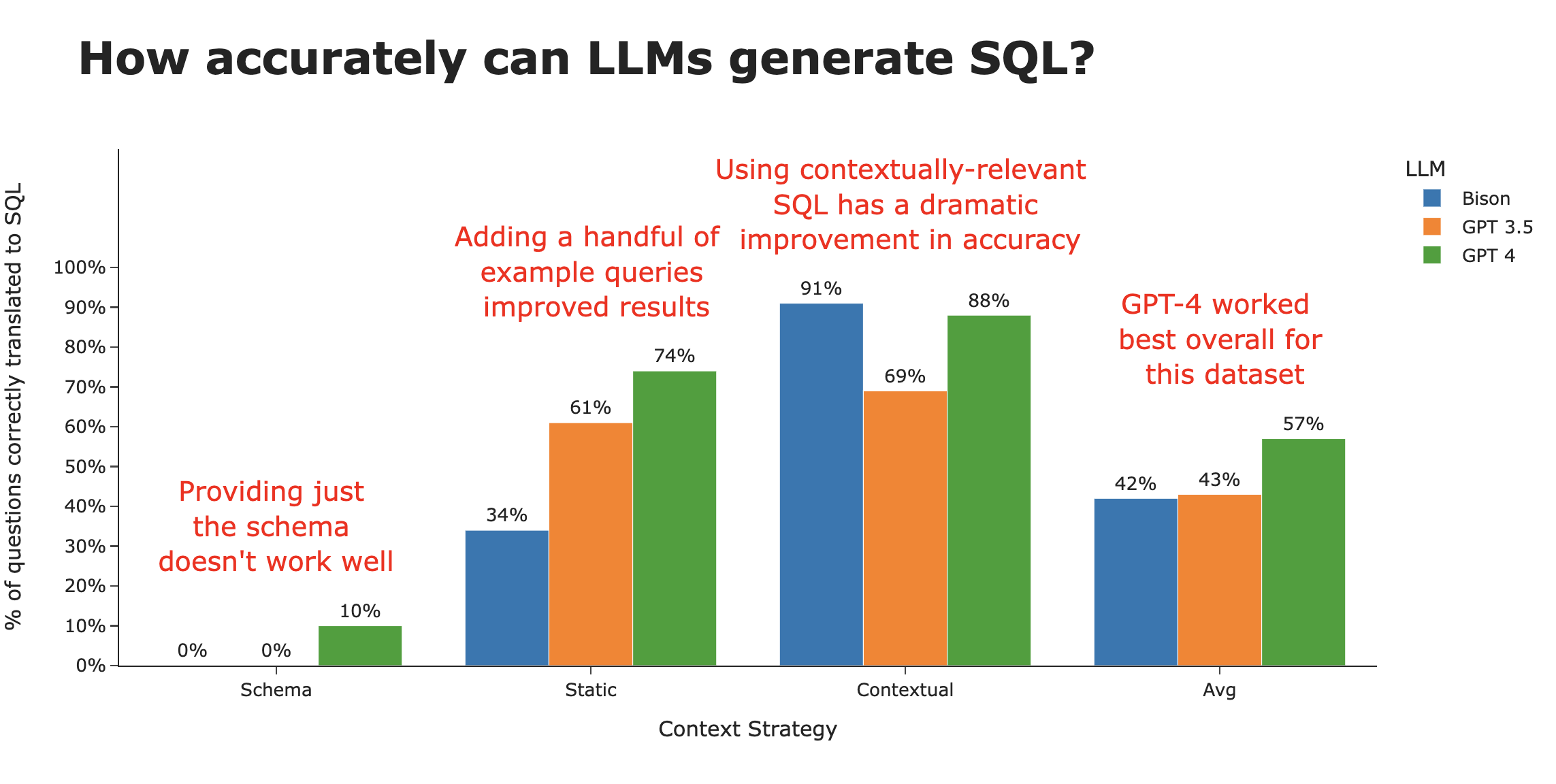

In this paper, **we show that context is everything, and with the right context, we can get from ~3% accuracy to ~80% accuracy**. We go through three different context strategies, and showcase one that is the clear winner - where we combine schema definitions, documentation, and prior SQL queries with a relevance search.

We also compare a few different LLMs - including Google Bison, GPT 3.5, GPT 4, and a brief attempt with Llama 2. While **GPT 4 takes the crown of the best overall LLM for generating SQL**, Google’s Bison is roughly equivalent when enough context is provided.

Finally, we show how you can use the methods demonstrated here to generate SQL for your database.

Here's a summary of our key findings -

## Table of Contents

* [Why use AI to generate SQL?](#why-use-ai-to-generate-sql)

* [Setting up architecture of the test](#setting-up-architecture-of-the-test)

* [Setting up the test levers](#setting-up-the-test-levers)

* [Choosing a dataset](#choosing-a-dataset)

* [Choosing the questions](#choosing-the-questions)

* [Choosing the prompt](#choosing-the-prompt)

* [Choosing the LLMs (Foundational models)](#choosing-the-llms-foundational-models)

* [Choosing the context](#choosing-the-context)

* [Using ChatGPT to generate SQL](#using-chatgpt-to-generate-sql)

* [Using schema only](#using-schema-only)

* [Using SQL examples](#using-sql-examples)

* [Using contextually relevant examples](#using-contextually-relevant-examples)

* [Analyzing the results](#analyzing-the-results)

* [Next steps to getting accuracy even higher](#next-steps-to-getting-accuracy-even-higher)

* [Use AI to write SQL for your dataset](#use-ai-to-write-sql-for-your-dataset)

## Why use AI to generate SQL?

Many organizations have now adopted some sort of data warehouse or data lake - a repository of a lot of the organization’s critical data that is queryable for analytical purposes. This ocean of data is brimming with potential insights, but only a small fraction of people in an enterprise have the two skills required to harness the data —

1. A solid comprehension of **advanced SQL**, and

2. A comprehensive knowledge of the **organization’s unique data structure & schema**

The number of people with both of the above is not only vanishingly small, but likely not the same people that have the majority of the questions.

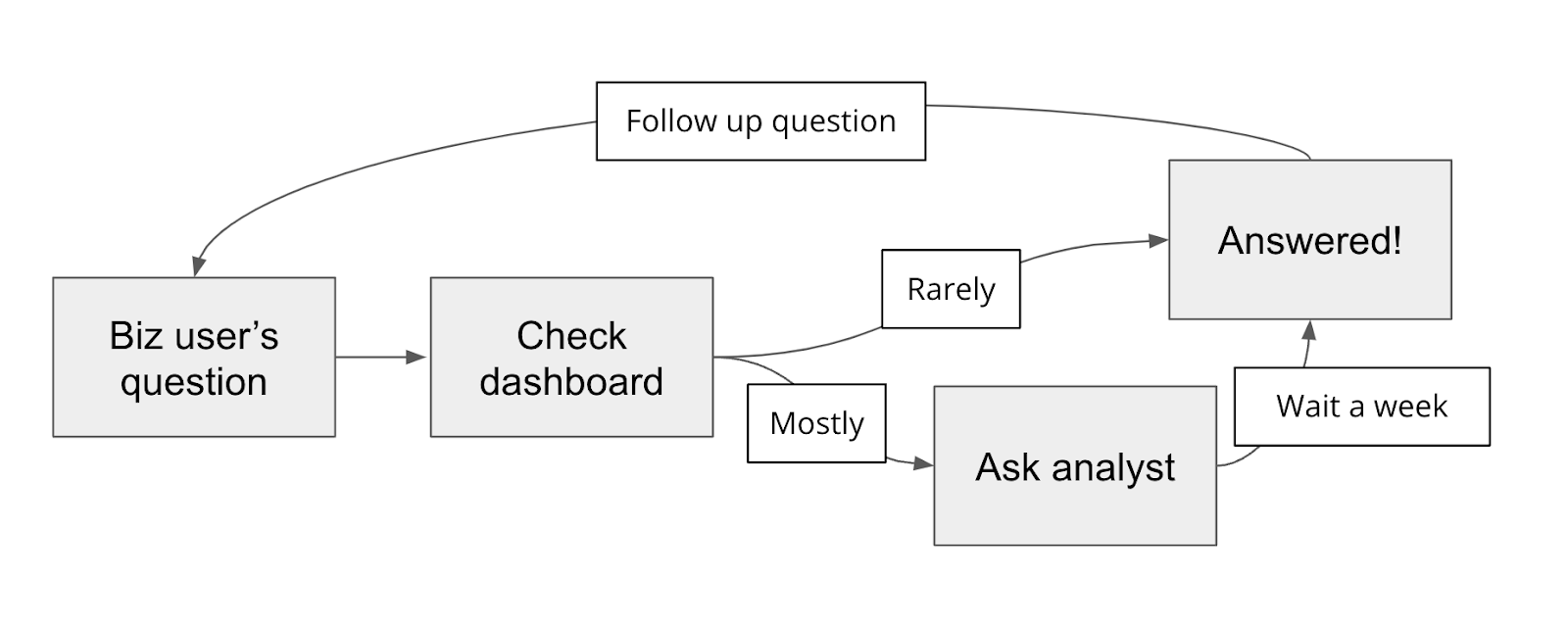

**So what actually happens inside organizations?** Business users, like product managers, sales managers, and executives, have data questions that will inform business decisions and strategy. They’ll first check dashboards, but most questions are ad hoc and specific, and the answers aren’t available, so they’ll ask a data analyst or engineer - whomever possesses the combination of skills above. These people are busy, and take a while to get to the request, and as soon as they get an answer, the business user has follow up questions.

**This process is painful** for both the business user (long lead times to get answers) and the analyst (distracts from their main projects), and leads to many potential insights being lost.

**Enter generative AI!** LLMs potentially give the opportunity to business users to query the database in plain English (with the LLMs doing the SQL translation), and we have heard from dozens of companies that this would be a game changer for their data teams and even their businesses.

**The key challenge is generating accurate SQL for complex and messy databases**. Plenty of people we’ve spoken with have tried to use ChatGPT to write SQL with limited success and a lot of pain. Many have given up and reverted back to the old fashioned way of manually writing SQL. At best, ChatGPT is a sometimes useful co-pilot for analysts to get syntax right.

**But there’s hope!** We’ve spent the last few months immersed in this problem, trying various models, techniques and approaches to improve the accuracy of SQL generated by LLMs. In this paper, we show the performance of various LLMs and how the strategy of providing contextually relevant correct SQL to the LLM can allow the LLM to **achieve extremely high accuracy**.

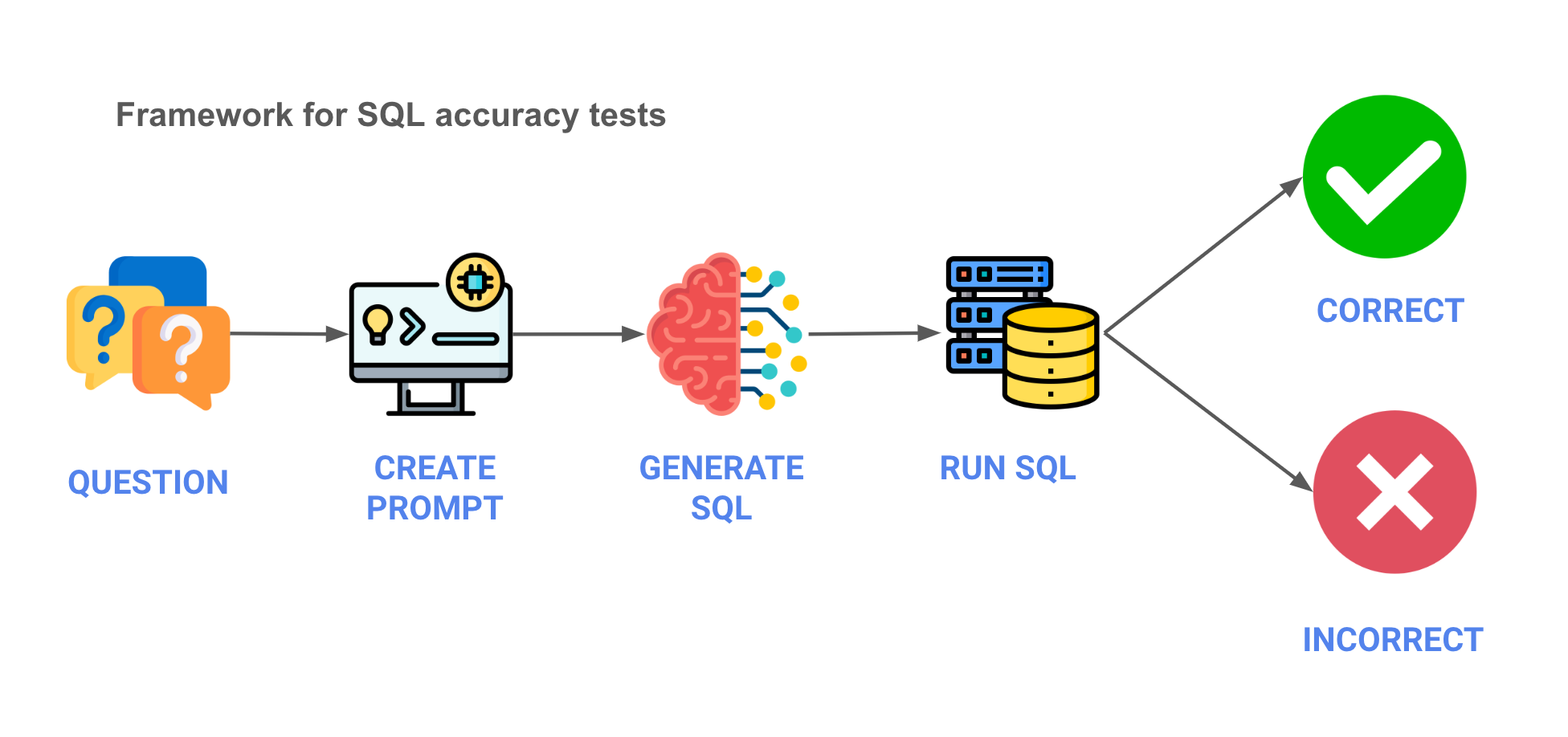

## Setting up architecture of the test

First, we needed to define the architecture of the test. A rough outline is below, in a five step process, with _pseudo code_ below -

1. **Question** - We start with the business question.

```python

question = "how many clients are there in germany"

```

2. **Prompt** - We create the prompt to send to the LLM.

```python

prompt = f"""

Write a SQL statement for the following question:

{question}

"""

```

3. **Generate SQL** - Using an API, we’ll send the prompt to the LLM and get back generated SQL.

```python

sql = llm.api(api_key=api_key, prompt=prompt, parameters=parameters)

```

4. **Run SQL** - We'll run the SQL against the database.

```python

df = db.conn.execute(sql)

```

5. **Validate results** - Finally, we’ll validate that the results are in line with what we expect.

There are some shades of grey when it comes to the results so we did a manual evaluation of the results. You can see those results [here](https://github.com/vanna-ai/research/blob/main/data/sec_evaluation_data_tagged.csv)

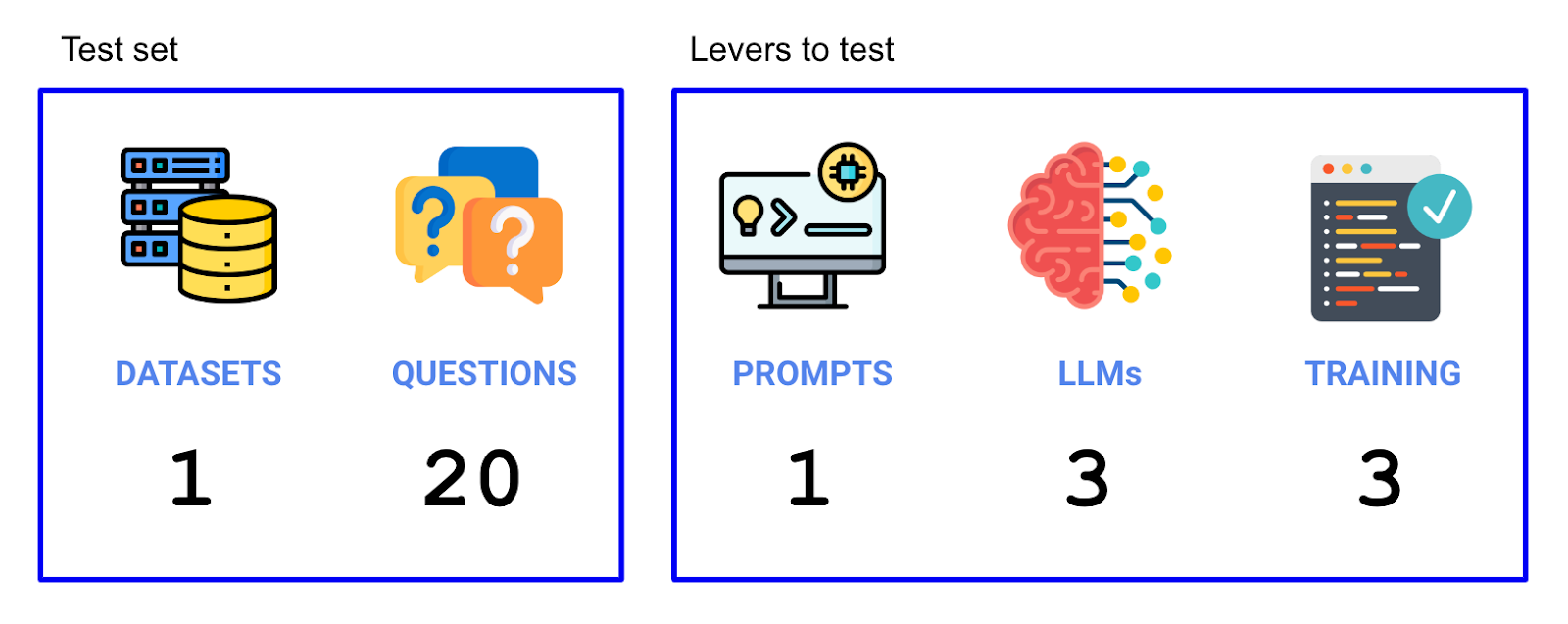

## Setting up the test levers

Now that we have our experiment set up, we’ll need to figure out what levers would impact accuracy, and what our test set would be. We tried two levers (the LLMs and the training data used), and we ran on 20 questions that made up our test set. So we ran a total of 3 LLMs x 3 context strategies x 20 questions = 180 individual trials in this experiment.

### Choosing a dataset

First, we need to **choose an appropriate dataset** to try. We had a few guiding principles -

1. **Representative**. Datasets in enterprises are often complex and this complexity isn’t captured in many demo / sample datasets. We want to use a complicated database that has real-word use cases that contains real-world data.

2. **Accessible**. We also wanted that dataset to be publicly available.

3. **Understandable**. The dataset should be somewhat understandable to a wide audience - anything too niche or technical would be difficult to decipher.

4. **Maintained**. We’d prefer a dataset that’s maintained and updated properly, in reflection of a real database.

A dataset that we found that met the criteria above was the Cybersyn SEC filings dataset, which is available for free on the Snowflake marketplace:

https://docs.cybersyn.com/our-data-products/economic-and-financial/sec-filings

### Choosing the questions

Next, we need to **choose the questions**. Here are some sample questions (see them all in this [file](https://github.com/vanna-ai/research/blob/main/data/questions_sec.csv)) -

1. How many companies are there in the dataset?

2. What annual measures are available from the 'ALPHABET INC.' Income Statement?

3. What are the quarterly 'Automotive sales' and 'Automotive leasing' for Tesla?

4. How many Chipotle restaurants are there currently?

Now that we have the dataset + questions, we’ll need to come up with the levers.

### Choosing the prompt

For the **prompt**, for this run, we are going to hold the prompt constant, though we’ll do a follow up which varies the prompt.

### Choosing the LLMs (Foundational models)

For the **LLMs** to test, we’ll try the following -

1. [**Bison (Google)**](https://cloud.google.com/vertex-ai/docs/generative-ai/learn/models) - Bison is the version of [PaLM 2](https://blog.google/technology/ai/google-palm-2-ai-large-language-model/) that’s available via GCP APIs.

2. [**GPT 3.5 Turbo (OpenAI)**](https://platform.openai.com/docs/models/gpt-3-5) - GPT 3.5 until recently was the flagship OpenAI model despite 4 being available because of latency and cost benefits, and not a huge accuracy difference (well - we’ll put that to the test) especially for basic tasks.

3. [**GPT 4 (OpenAI)**](https://platform.openai.com/docs/models/gpt-4) - The more advanced but less performant OpenAI model. GPT 4 is multi-modal, though we won’t be using that functionality.

4. [**Llama 2 (Meta)**](https://ai.meta.com/llama/) - We really wanted to include an open source model - and the leading one is Meta’s Llama 2. But our setup through [Replicate](https://replicate.com/replicate/llama-2-70b-chat) quickly conked out, and we weren’t able to fix it in time for publishing this. In our early runs, when it did work, we found performance to be mediocre at best.

### Choosing the context

Finally, we’ll have three types of **context**. Context refers to what we send to the LLM that helps give the LLM context on our specific dataset.

1. **Schema only**. We put the schema (using DDL) in the context window.

2. **Static examples**. We put static example SQL queries in the context windows.

3. **Contextually relevant examples**. Finally, we put the most relevant context (SQL / DDL / documentation) into the context window, finding it via a vector search based on embeddings.

## Using ChatGPT to generate SQL

This section is a bit unfair to ChatGPT, but it’s a helpful place to get started. Let’s just take a question and ask ChatGPT to generate the SQL -

**Prompt**

```

Write Snowflake SQL to answer the following question -

What are the quarterly revenues by business segment for Alphabet?

Return SQL only

```

**Response**

Certainly, here's the SQL query:

```sql

SELECT

EXTRACT(QUARTER FROM date_column) AS quarter,

business_segment,

SUM(revenue) AS quarterly_revenue

FROM

revenue_table

WHERE

company_name = 'Alphabet'

GROUP BY

quarter,

business_segment

ORDER BY

quarter,

business_segment;

-- Replace date_column, business_segment, revenue, revenue_table, and adjust any other identifiers to match your specific database schema.

```

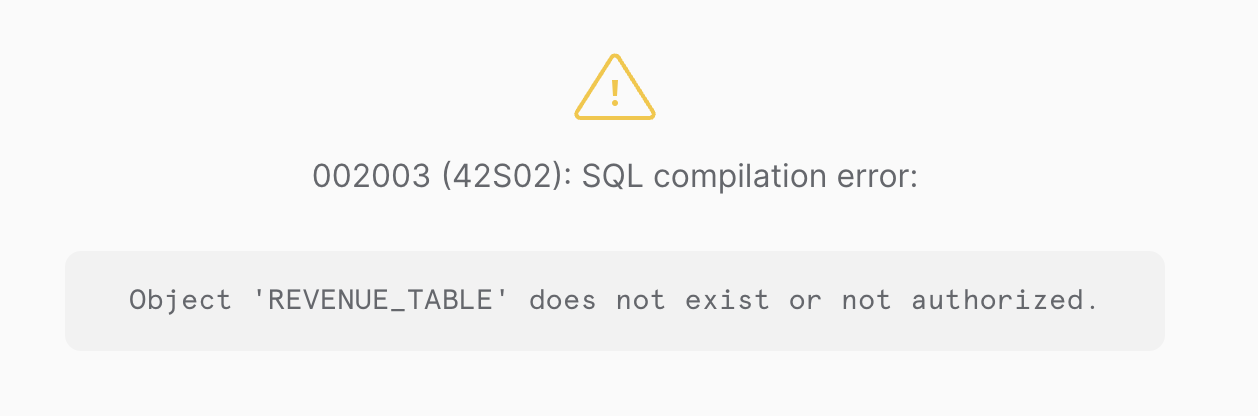

Inevitably, when we try to run this, we hit an error, because it made up the table name since we didn't provide it -

Of course, we are being unfair to the LLMs - as magical as they are, they cannot (unfortunately? luckily?) possibly know what’s in our database - yet. So let’s hop into the tests where we give more context.

## Using schema only

First, we take the schema of the dataset and put it into the context window. This is usually what we've seen people do with ChatGPT or in tutorials.

An example prompt may look like this (in reality we used the information schema because of how Snowflake shares work but this shows the principle) -

```

The user provides a question and you provide SQL. You will only respond with SQL code and not with any explanations.

Respond with only SQL code. Do not answer with any explanations -- just the code.

You may use the following DDL statements as a reference for what tables might be available.

CREATE TABLE Table1...

CREATE TABLE Table2...

CREATE TABLE Table3...

```

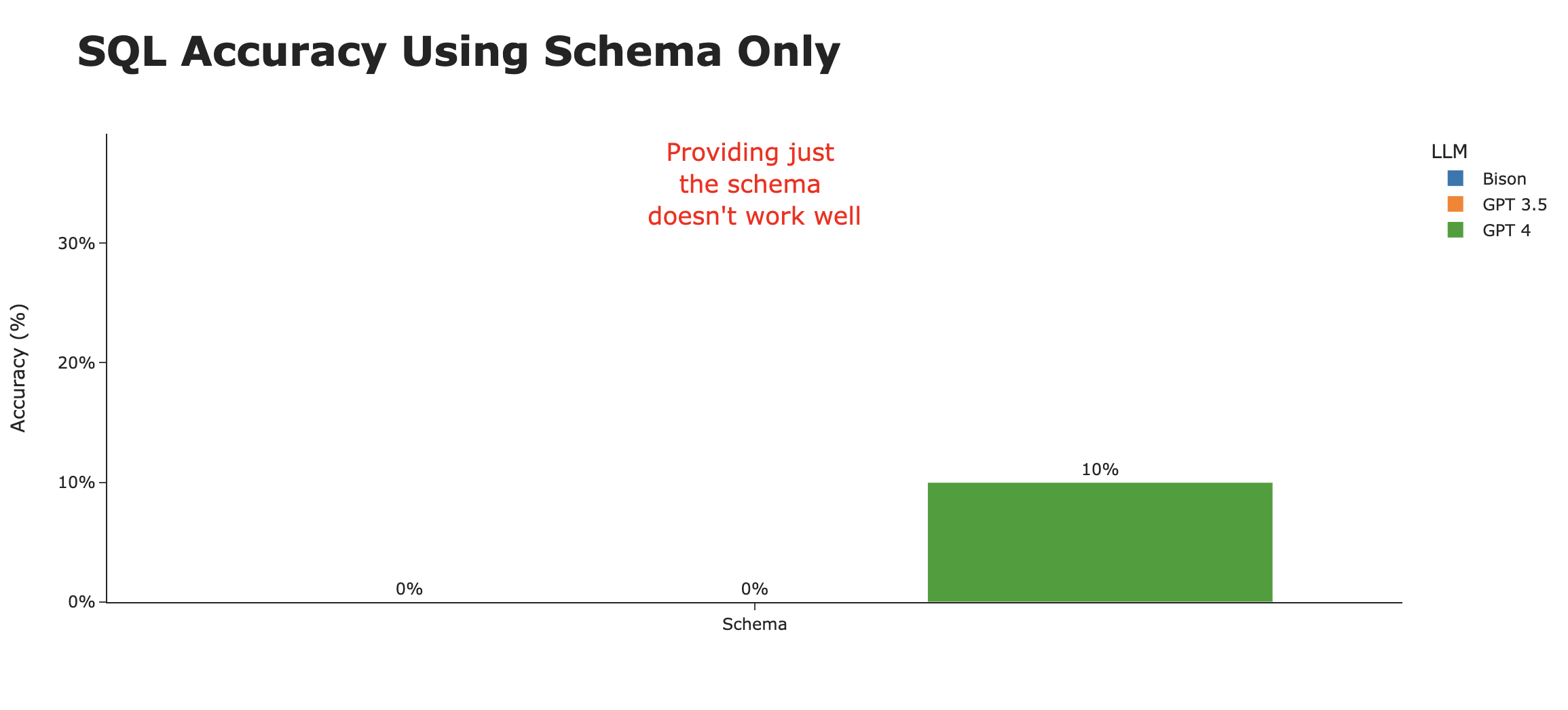

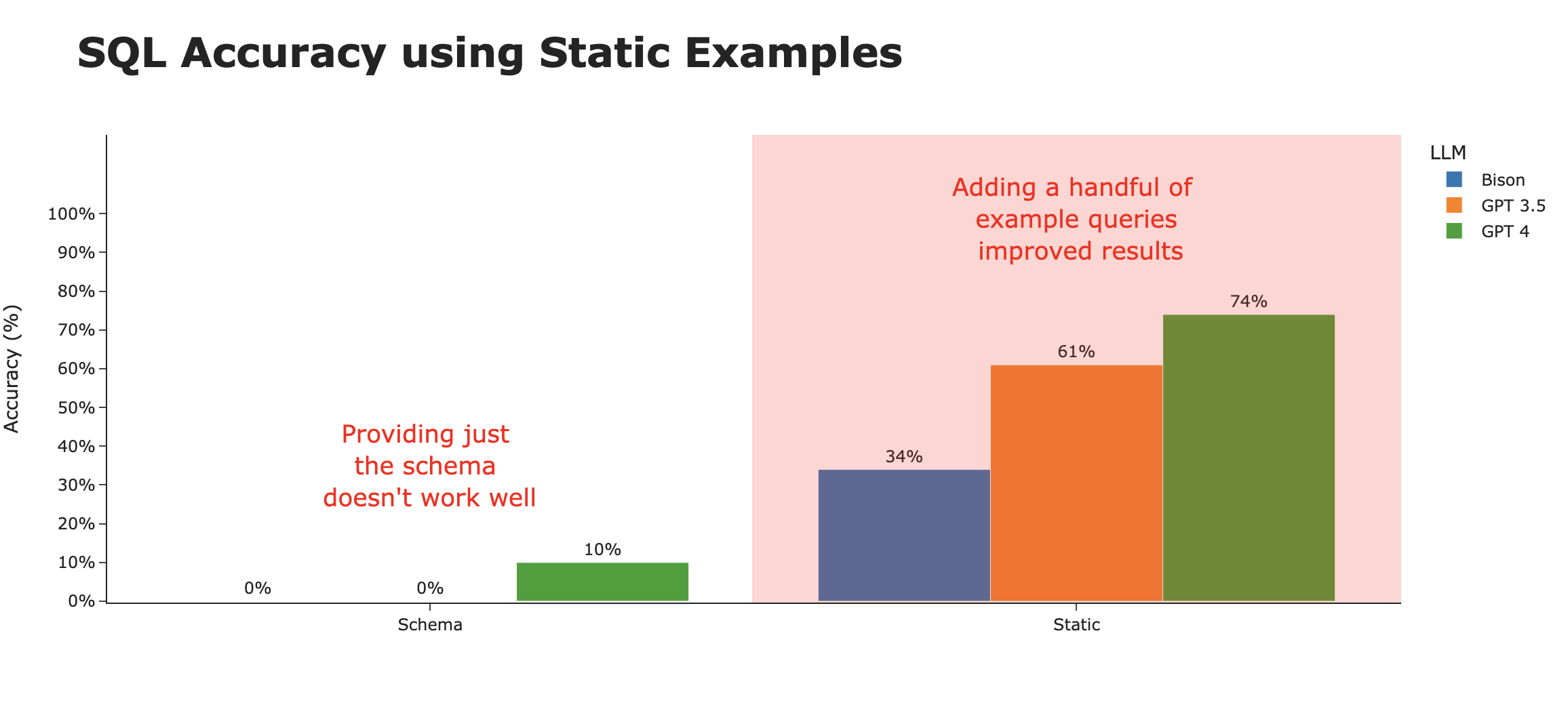

The results were, in a word, terrible. Of the 60 attempts (20 questions x 3 models), only two questions were answered correctly (both by GPT 4), **for an abysmal accuracy rate of 3%**. Here are the two questions that GPT 4 managed to get right -

1. What are the top 10 measure descriptions by frequency?

2. What are the distinct statements in the report attributes?

It’s evident that by just using the schema, we don’t get close to meeting the bar of a helpful AI SQL agent, though it may be somewhat useful in being an analyst copilot.

## Using SQL examples

If we put ourselves in the shoes of a human who’s exposed to this dataset for the first time, in addition to the table definitions, they’d first look at the example queries to see _how_ to query the database correctly.

These queries can give additional context not available in the schema - for example, which columns to use, how tables join together, and other intricacies of querying that particular dataset.

Cybersyn, as with other data providers on the Snowflake marketplace, provides a few (in this case 3) example queries in their documentation. Let’s include these in the context window.

By providing just those 3 example queries, we see substantial improvements to the correctness of the SQL generated. However, this accuracy greatly varies by the underlying LLM. It seems that GPT-4 is the most able to generalize the example queries in a way that generates the most accurate SQL.

## Using contextually relevant examples

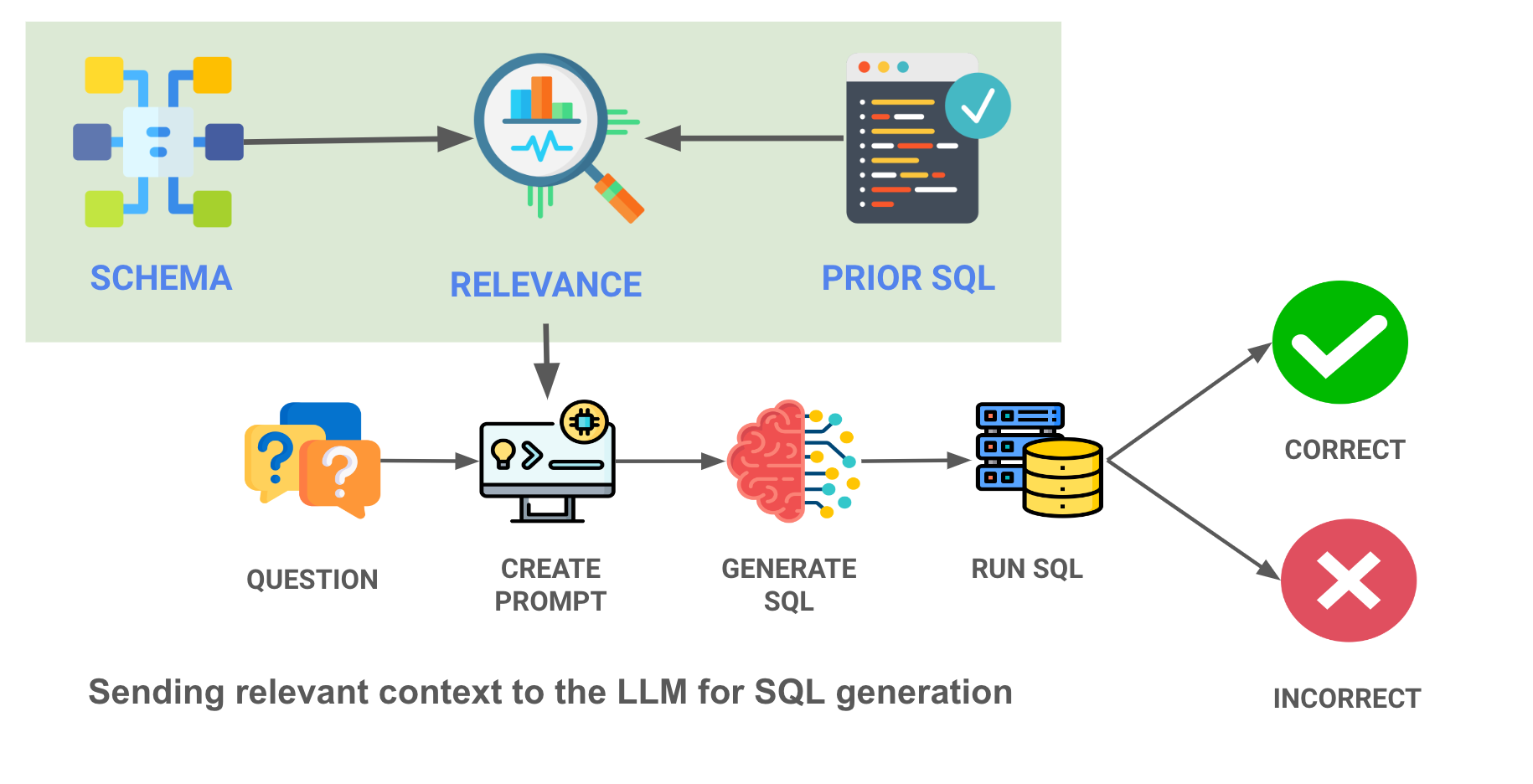

Enterprise data warehouses often contain 100s (or even 1000s) of tables, and an order of magnitude more queries that cover all the use cases within their organizations. Given the limited size of the context windows of modern LLMs, we can’t just shove all the prior queries and schema definitions into the prompt.

Our final approach to context is a more sophisticated ML approach - load embeddings of prior queries and the table schemas into a vector database, and only choose the most relevant queries / tables to the question asked. Here's a diagram of what we are doing - note the contextual relevance search in the green box -

By surfacing the most relevant examples of those SQL queries to the LLM, we can drastically improve performance of even the less capable LLMs. Here, we give the LLM the 10 most relevant SQL query examples for the question (from a list of 30 examples stored), and accuracy rates skyrocket.

We can improve performance even more by maintaining a history of SQL statements that were executable and correctly answer actual questions that users have had.

## Analyzing the results

It’s clear that the biggest difference is not in the type of LLM, but rather in the strategy employed to give the appropriate context to the LLM (eg the “training data” used).

When looking at SQL accuracy by context strategy, it’s clear that this is what makes the difference. We go from ~3% accurate using just the schema, to ~80% accurate when intelligently using contextual examples.

There are still interesting trends with the LLMs themselves. While Bison starts out at the bottom of the heap in both the Schema and Static context strategies, it rockets to the top with a full Contextual strategy. Averaged across the three strategies, **GPT 4 takes the crown as the best LLM for SQL generation**.

## Next steps to getting accuracy even higher

We'll soon do a follow up on this analysis to get even deeper into accurate SQL generation. Some next steps are -

1. **Use other datasets**: We'd love to try this on other, real world, enterprise datasets. What happens when you get to 100 tables? 1000 tables?

2. **Add more training data**: While 30 queries is great, what happens when you 10x, 100x that number?

3. **Try more databases**: This test was run on a Snowflake database, but we've also gotten this working on BigQuery, Postgres, Redshift, and SQL Server.

4. **Experiment with more foundational models:** We are close to being able to use Llama 2, and we'd love to try other LLMs.

We have some anecdotal evidence for the above but we'll be expanding and refining our tests to include more of these items.

## Use AI to write SQL for your dataset

While the SEC data is a good start, you must be wondering whether this could be relevant for your data and your organization. We’re building a [Python package](https://vanna.ai) that can generate SQL for your database as well as additional functionality like being able to generate Plotly code for the charts, follow-up questions, and various other functions.

Here's an overview of how it works

```python

import vanna as vn

```

1. **Train Using Schema**

```python

vn.train(ddl="CREATE TABLE ...")

```

2. **Train Using Documentation**

```python

vn.train(documentation="...")

```

3. **Train Using SQL Examples**

```python

vn.train(sql="SELECT ...")

```

4. **Generating SQL**

The easiest ways to use Vanna out of the box are `vn.ask(question="What are the ...")` which will return the SQL, table, and chart as you can see in this [example notebook](https://vanna.ai/docs/getting-started.html). `vn.ask` is a wrapper around `vn.generate_sql`, `vn.run_sql`, `vn.generate_plotly_code`, `vn.get_plotly_figure`, and `vn.generate_followup_questions`. This will use optimized context to generate SQL for your question where Vanna will call the LLM for you.

Alternately, you can use `vn.get_related_training_data(question="What are the ...")` as shown in this [notebook](https://github.com/vanna-ai/research/blob/main/notebooks/test-cybersyn-sec.ipynb) which will retrieve the most relevant context that you can use to construct your own prompt to send to any LLM.

This [notebook](https://github.com/vanna-ai/research/blob/main/notebooks/train-cybersyn-sec-3.ipynb) shows an example of how the "Static" context strategy was used to train Vanna on the Cybersyn SEC dataset.

## A note on nomenclature

* **Foundational Model**: This is the underlying LLM

* **Context Model (aka Vanna Model)**: This is a layer that sits on top of the LLM and provides context to the LLM

* **Training**: Generally when we refer to "training" we're talking about training the context model.

## Contact Us

Ping us on [Slack](https://join.slack.com/t/vanna-ai/shared_invite/zt-1unu0ipog-iE33QCoimQiBDxf2o7h97w), [Discord](https://discord.com/invite/qUZYKHremx), or [set up a 1:1 call](https://calendly.com/d/y7j-yqq-yz4/meet-with-both-vanna-co-founders) if you have any issues.

## /papers/img/accuracy-by-llm.png

Binary file available at https://raw.githubusercontent.com/vanna-ai/vanna/refs/heads/main/papers/img/accuracy-by-llm.png

## /papers/img/accuracy-using-contextual-examples.png

Binary file available at https://raw.githubusercontent.com/vanna-ai/vanna/refs/heads/main/papers/img/accuracy-using-contextual-examples.png

## /papers/img/accuracy-using-schema-only.png

Binary file available at https://raw.githubusercontent.com/vanna-ai/vanna/refs/heads/main/papers/img/accuracy-using-schema-only.png

## /papers/img/accuracy-using-static-examples.png

Binary file available at https://raw.githubusercontent.com/vanna-ai/vanna/refs/heads/main/papers/img/accuracy-using-static-examples.png

## /papers/img/chat-gpt-question.png

Binary file available at https://raw.githubusercontent.com/vanna-ai/vanna/refs/heads/main/papers/img/chat-gpt-question.png

## /papers/img/chatgpt-results.png

Binary file available at https://raw.githubusercontent.com/vanna-ai/vanna/refs/heads/main/papers/img/chatgpt-results.png

## /papers/img/framework-for-sql-generation.png

Binary file available at https://raw.githubusercontent.com/vanna-ai/vanna/refs/heads/main/papers/img/framework-for-sql-generation.png

## /papers/img/question-flow.png

Binary file available at https://raw.githubusercontent.com/vanna-ai/vanna/refs/heads/main/papers/img/question-flow.png

## /papers/img/schema-only.png

Binary file available at https://raw.githubusercontent.com/vanna-ai/vanna/refs/heads/main/papers/img/schema-only.png

## /papers/img/sql-error.png

Binary file available at https://raw.githubusercontent.com/vanna-ai/vanna/refs/heads/main/papers/img/sql-error.png

## /papers/img/summary-table.png

Binary file available at https://raw.githubusercontent.com/vanna-ai/vanna/refs/heads/main/papers/img/summary-table.png

## /papers/img/summary.png

Binary file available at https://raw.githubusercontent.com/vanna-ai/vanna/refs/heads/main/papers/img/summary.png

## /papers/img/test-architecture.png

Binary file available at https://raw.githubusercontent.com/vanna-ai/vanna/refs/heads/main/papers/img/test-architecture.png

## /papers/img/test-levers.png

Binary file available at https://raw.githubusercontent.com/vanna-ai/vanna/refs/heads/main/papers/img/test-levers.png

## /papers/img/using-contextually-relevant-examples.png

Binary file available at https://raw.githubusercontent.com/vanna-ai/vanna/refs/heads/main/papers/img/using-contextually-relevant-examples.png

## /papers/img/using-sql-examples.png

Binary file available at https://raw.githubusercontent.com/vanna-ai/vanna/refs/heads/main/papers/img/using-sql-examples.png

## /pyproject.toml

```toml path="/pyproject.toml"

[build-system]

requires = ["flit_core >=3.2,<4"]

build-backend = "flit_core.buildapi"

[project]

name = "vanna"

version = "0.7.9"

authors = [

{ name="Zain Hoda", email="zain@vanna.ai" },

]

description = "Generate SQL queries from natural language"

readme = "README.md"

requires-python = ">=3.9"

classifiers = [

"Programming Language :: Python :: 3",

"License :: OSI Approved :: MIT License",

"Operating System :: OS Independent",

]

dependencies = [

"requests", "tabulate", "plotly", "pandas", "sqlparse", "kaleido", "flask", "flask-sock", "flasgger", "sqlalchemy"

]

[project.urls]

"Homepage" = "https://github.com/vanna-ai/vanna"

"Bug Tracker" = "https://github.com/vanna-ai/vanna/issues"

[project.optional-dependencies]

postgres = ["psycopg2-binary", "db-dtypes"]

mysql = ["PyMySQL"]

clickhouse = ["clickhouse_connect"]

bigquery = ["google-cloud-bigquery"]

snowflake = ["snowflake-connector-python"]

duckdb = ["duckdb"]

google = ["google-generativeai", "google-cloud-aiplatform"]

all = ["psycopg2-binary", "db-dtypes", "PyMySQL", "google-cloud-bigquery", "snowflake-connector-python", "duckdb", "openai", "qianfan", "mistralai>=1.0.0", "chromadb<1.0.0", "anthropic", "zhipuai", "marqo", "google-generativeai", "google-cloud-aiplatform", "qdrant-client", "fastembed", "ollama", "httpx", "opensearch-py", "opensearch-dsl", "transformers", "pinecone", "pymilvus[model]","weaviate-client", "azure-search-documents", "azure-identity", "azure-common", "faiss-cpu", "boto", "boto3", "botocore", "langchain_core", "langchain_postgres", "langchain-community", "langchain-huggingface", "xinference-client"]

test = ["tox"]

chromadb = ["chromadb<1.0.0"]

openai = ["openai"]

qianfan = ["qianfan"]

mistralai = ["mistralai>=1.0.0"]

anthropic = ["anthropic"]

gemini = ["google-generativeai"]

marqo = ["marqo"]

zhipuai = ["zhipuai"]

ollama = ["ollama", "httpx"]

qdrant = ["qdrant-client", "fastembed"]

vllm = ["vllm"]

pinecone = ["pinecone", "fastembed"]

opensearch = ["opensearch-py", "opensearch-dsl", "langchain-community", "langchain-huggingface"]

hf = ["transformers"]

milvus = ["pymilvus[model]"]

bedrock = ["boto3", "botocore"]

weaviate = ["weaviate-client"]

azuresearch = ["azure-search-documents", "azure-identity", "azure-common", "fastembed"]

pgvector = ["langchain-postgres>=0.0.12"]

faiss-cpu = ["faiss-cpu"]

faiss-gpu = ["faiss-gpu"]

xinference-client = ["xinference-client"]

oracle = ["oracledb", "chromadb<1.0.0"]

```

## /setup.cfg

```cfg path="/setup.cfg"

[flake8]

ignore = BLK100,W503,E203,E722,F821,F841

max-line-length = 100

exclude = .tox,.git,docs,venv,jupyter_notebook_config.py,jupyter_lab_config.py,assets.py

[tool:brunette]

verbose = true

single-quotes = false

target-version = py39

exclude = .tox,.git,docs,venv,assets.py

```

## /src/.editorconfig

```editorconfig path="/src/.editorconfig"

# top-most EditorConfig file

root = true

# Python files

[*.py]

# Indentation style: space

indent_style = space

# Indentation size: Use 2 spaces

indent_size = 2

# Newline character at the end of file

insert_final_newline = true

# Charset: utf-8

charset = utf-8

# Trim trailing whitespace

trim_trailing_whitespace = true

# Max line length: 79 characters as per PEP 8 guidelines

max_line_length = 79

# Set end of line format to LF

# Exclude specific files or directories

exclude = 'docs|node_modules|migrations|.git|.tox'

```

## /src/vanna/ZhipuAI/ZhipuAI_Chat.py

```py path="/src/vanna/ZhipuAI/ZhipuAI_Chat.py"

import re

from typing import List

import pandas as pd

from zhipuai import ZhipuAI

from ..base import VannaBase

class ZhipuAI_Chat(VannaBase):

def __init__(self, config=None):

VannaBase.__init__(self, config=config)

if config is None:

return

if "api_key" not in config:

raise Exception("Missing api_key in config")

self.api_key = config["api_key"]

self.model = config["model"] if "model" in config else "glm-4"

self.api_url = "https://open.bigmodel.cn/api/paas/v4/chat/completions"

# Static methods similar to those in ZhipuAI_Chat for message formatting and utility

@staticmethod

def system_message(message: str) -> dict:

return {"role": "system", "content": message}

@staticmethod

def user_message(message: str) -> dict:

return {"role": "user", "content": message}

@staticmethod

def assistant_message(message: str) -> dict:

return {"role": "assistant", "content": message}

@staticmethod

def str_to_approx_token_count(string: str) -> int:

return len(string) / 4

@staticmethod

def add_ddl_to_prompt(

initial_prompt: str, ddl_list: List[str], max_tokens: int = 14000

) -> str:

if len(ddl_list) > 0:

initial_prompt += "\nYou may use the following DDL statements as a reference for what tables might be available. Use responses to past questions also to guide you:\n\n"

for ddl in ddl_list:

if (

ZhipuAI_Chat.str_to_approx_token_count(initial_prompt)

+ ZhipuAI_Chat.str_to_approx_token_count(ddl)

< max_tokens

):

initial_prompt += f"{ddl}\n\n"

return initial_prompt

@staticmethod

def add_documentation_to_prompt(

initial_prompt: str, documentation_List: List[str], max_tokens: int = 14000

) -> str:

if len(documentation_List) > 0:

initial_prompt += "\nYou may use the following documentation as a reference for what tables might be available. Use responses to past questions also to guide you:\n\n"

for documentation in documentation_List:

if (

ZhipuAI_Chat.str_to_approx_token_count(initial_prompt)

+ ZhipuAI_Chat.str_to_approx_token_count(documentation)

< max_tokens

):

initial_prompt += f"{documentation}\n\n"

return initial_prompt

@staticmethod

def add_sql_to_prompt(

initial_prompt: str, sql_List: List[str], max_tokens: int = 14000

) -> str:

if len(sql_List) > 0:

initial_prompt += "\nYou may use the following SQL statements as a reference for what tables might be available. Use responses to past questions also to guide you:\n\n"

for question in sql_List:

if (

ZhipuAI_Chat.str_to_approx_token_count(initial_prompt)

+ ZhipuAI_Chat.str_to_approx_token_count(question["sql"])

< max_tokens

):

initial_prompt += f"{question['question']}\n{question['sql']}\n\n"

return initial_prompt

def get_sql_prompt(

self,

question: str,

question_sql_list: List,

ddl_list: List,

doc_list: List,

**kwargs,

):

initial_prompt = "The user provides a question and you provide SQL. You will only respond with SQL code and not with any explanations.\n\nRespond with only SQL code. Do not answer with any explanations -- just the code.\n"

initial_prompt = ZhipuAI_Chat.add_ddl_to_prompt(

initial_prompt, ddl_list, max_tokens=14000

)

initial_prompt = ZhipuAI_Chat.add_documentation_to_prompt(

initial_prompt, doc_list, max_tokens=14000

)

message_log = [ZhipuAI_Chat.system_message(initial_prompt)]

for example in question_sql_list:

if example is None:

print("example is None")

else:

if example is not None and "question" in example and "sql" in example:

message_log.append(ZhipuAI_Chat.user_message(example["question"]))

message_log.append(ZhipuAI_Chat.assistant_message(example["sql"]))

message_log.append({"role": "user", "content": question})

return message_log

def get_followup_questions_prompt(

self,

question: str,

df: pd.DataFrame,

question_sql_list: List,

ddl_list: List,

doc_list: List,

**kwargs,

):

initial_prompt = f"The user initially asked the question: '{question}': \n\n"

initial_prompt = ZhipuAI_Chat.add_ddl_to_prompt(

initial_prompt, ddl_list, max_tokens=14000

)

initial_prompt = ZhipuAI_Chat.add_documentation_to_prompt(

initial_prompt, doc_list, max_tokens=14000

)

initial_prompt = ZhipuAI_Chat.add_sql_to_prompt(

initial_prompt, question_sql_list, max_tokens=14000

)

message_log = [ZhipuAI_Chat.system_message(initial_prompt)]

message_log.append(

ZhipuAI_Chat.user_message(

"Generate a List of followup questions that the user might ask about this data. Respond with a List of questions, one per line. Do not answer with any explanations -- just the questions."

)

)

return message_log

def generate_question(self, sql: str, **kwargs) -> str:

response = self.submit_prompt(

[

self.system_message(

"The user will give you SQL and you will try to guess what the business question this query is answering. Return just the question without any additional explanation. Do not reference the table name in the question."

),

self.user_message(sql),

],

**kwargs,

)

return response

def _extract_python_code(self, markdown_string: str) -> str:

# Regex pattern to match Python code blocks

pattern = r"\`\`\`[\w\s]*python\n([\s\S]*?)\`\`\`|\`\`\`([\s\S]*?)\`\`\`"

# Find all matches in the markdown string

matches = re.findall(pattern, markdown_string, re.IGNORECASE)

# Extract the Python code from the matches

python_code = []

for match in matches:

python = match[0] if match[0] else match[1]

python_code.append(python.strip())

if len(python_code) == 0:

return markdown_string

return python_code[0]

def _sanitize_plotly_code(self, raw_plotly_code: str) -> str:

# Remove the fig.show() statement from the plotly code

plotly_code = raw_plotly_code.replace("fig.show()", "")

return plotly_code

def generate_plotly_code(

self, question: str = None, sql: str = None, df_metadata: str = None, **kwargs

) -> str:

if question is not None:

system_msg = f"The following is a pandas DataFrame that contains the results of the query that answers the question the user asked: '{question}'"

else:

system_msg = "The following is a pandas DataFrame "

if sql is not None:

system_msg += f"\n\nThe DataFrame was produced using this query: {sql}\n\n"

system_msg += f"The following is information about the resulting pandas DataFrame 'df': \n{df_metadata}"

message_log = [

self.system_message(system_msg),

self.user_message(

"Can you generate the Python plotly code to chart the results of the dataframe? Assume the data is in a pandas dataframe called 'df'. If there is only one value in the dataframe, use an Indicator. Respond with only Python code. Do not answer with any explanations -- just the code."

),

]

plotly_code = self.submit_prompt(message_log, kwargs=kwargs)

return self._sanitize_plotly_code(self._extract_python_code(plotly_code))

def submit_prompt(

self, prompt, max_tokens=500, temperature=0.7, top_p=0.7, stop=None, **kwargs

):

if prompt is None:

raise Exception("Prompt is None")

if len(prompt) == 0:

raise Exception("Prompt is empty")

client = ZhipuAI(api_key=self.api_key)

response = client.chat.completions.create(

model="glm-4",

max_tokens=max_tokens,

temperature=temperature,

top_p=top_p,

stop=stop,

messages=prompt,

)

return response.choices[0].message.content

```

## /src/vanna/ZhipuAI/ZhipuAI_embeddings.py

```py path="/src/vanna/ZhipuAI/ZhipuAI_embeddings.py"

from typing import List

from zhipuai import ZhipuAI

from chromadb import Documents, EmbeddingFunction, Embeddings

from ..base import VannaBase

class ZhipuAI_Embeddings(VannaBase):

"""

[future functionality] This function is used to generate embeddings from ZhipuAI.

Args:

VannaBase (_type_): _description_

"""

def __init__(self, config=None):

VannaBase.__init__(self, config=config)

if "api_key" not in config:

raise Exception("Missing api_key in config")

self.api_key = config["api_key"]

self.client = ZhipuAI(api_key=self.api_key)

def generate_embedding(self, data: str, **kwargs) -> List[float]:

embedding = self.client.embeddings.create(

model="embedding-2",

input=data,

)

return embedding.data[0].embedding

class ZhipuAIEmbeddingFunction(EmbeddingFunction[Documents]):

"""

A embeddingFunction that uses ZhipuAI to generate embeddings which can use in chromadb.

usage:

class MyVanna(ChromaDB_VectorStore, ZhipuAI_Chat):

def __init__(self, config=None):

ChromaDB_VectorStore.__init__(self, config=config)

ZhipuAI_Chat.__init__(self, config=config)

config={'api_key': 'xxx'}

zhipu_embedding_function = ZhipuAIEmbeddingFunction(config=config)

config = {"api_key": "xxx", "model": "glm-4","path":"xy","embedding_function":zhipu_embedding_function}

vn = MyVanna(config)

"""

def __init__(self, config=None):

if config is None or "api_key" not in config:

raise ValueError("Missing 'api_key' in config")

self.api_key = config["api_key"]

self.model_name = config.get("model_name", "embedding-2")

try:

self.client = ZhipuAI(api_key=self.api_key)

except Exception as e:

raise ValueError(f"Error initializing ZhipuAI client: {e}")

def __call__(self, input: Documents) -> Embeddings:

# Replace newlines, which can negatively affect performance.

input = [t.replace("\n", " ") for t in input]

all_embeddings = []

print(f"Generating embeddings for {len(input)} documents")

# Iterating over each document for individual API calls

for document in input:

try:

response = self.client.embeddings.create(

model=self.model_name,

input=document

)

# print(response)

embedding = response.data[0].embedding

all_embeddings.append(embedding)

# print(f"Cost required: {response.usage.total_tokens}")

except Exception as e:

raise ValueError(f"Error generating embedding for document: {e}")

return all_embeddings

```

## /src/vanna/ZhipuAI/__init__.py

```py path="/src/vanna/ZhipuAI/__init__.py"

from .ZhipuAI_Chat import ZhipuAI_Chat

from .ZhipuAI_embeddings import ZhipuAI_Embeddings, ZhipuAIEmbeddingFunction

```

## /src/vanna/__init__.py

```py path="/src/vanna/__init__.py"

import dataclasses

import json

import os

from dataclasses import dataclass

from typing import Callable, List, Tuple, Union

import pandas as pd

import requests

import plotly.graph_objs

from .exceptions import (

OTPCodeError,

ValidationError,

)

from .types import (

ApiKey,

Status,

TrainingData,

UserEmail,

UserOTP,

)

from .utils import sanitize_model_name, validate_config_path

api_key: Union[str, None] = None # API key for Vanna.AI

fig_as_img: bool = False # Whether or not to return Plotly figures as images

run_sql: Union[

Callable[[str], pd.DataFrame], None

] = None # Function to convert SQL to a Pandas DataFrame

"""

**Example**

\`\`\`python

vn.run_sql = lambda sql: pd.read_sql(sql, engine)

\`\`\`

Set the SQL to DataFrame function for Vanna.AI. This is used in the [`vn.ask(...)`][vanna.ask] function.

Instead of setting this directly you can also use [`vn.connect_to_snowflake(...)`][vanna.connect_to_snowflake] to set this.

"""

__org: Union[str, None] = None # Organization name for Vanna.AI

_unauthenticated_endpoint = "https://ask.vanna.ai/unauthenticated_rpc"

def error_deprecation():

raise Exception("""

Please switch to the following method for initializing Vanna:

from vanna.remote import VannaDefault

api_key = # Your API key from https://vanna.ai/account/profile

vanna_model_name = # Your model name from https://vanna.ai/account/profile

vn = VannaDefault(model=vanna_model_name, api_key=api_key)

""")

def __unauthenticated_rpc_call(method, params):

headers = {

"Content-Type": "application/json",

}

data = {"method": method, "params": [__dataclass_to_dict(obj) for obj in params]}

response = requests.post(

_unauthenticated_endpoint, headers=headers, data=json.dumps(data)

)

return response.json()

def __dataclass_to_dict(obj):

return dataclasses.asdict(obj)

def get_api_key(email: str, otp_code: Union[str, None] = None) -> str:

"""

**Example:**

\`\`\`python

vn.get_api_key(email="my-email@example.com")

\`\`\`

Login to the Vanna.AI API.

Args:

email (str): The email address to login with.

otp_code (Union[str, None]): The OTP code to login with. If None, an OTP code will be sent to the email address.

Returns:

str: The API key.

"""

vanna_api_key = os.environ.get("VANNA_API_KEY", None)

if vanna_api_key is not None:

return vanna_api_key

if email == "my-email@example.com":

raise ValidationError(

"Please replace 'my-email@example.com' with your email address."

)

if otp_code is None:

params = [UserEmail(email=email)]

d = __unauthenticated_rpc_call(method="send_otp", params=params)

if "result" not in d:

raise OTPCodeError("Error sending OTP code.")

status = Status(**d["result"])

if not status.success:

raise OTPCodeError(f"Error sending OTP code: {status.message}")

otp_code = input("Check your email for the code and enter it here: ")

params = [UserOTP(email=email, otp=otp_code)]

d = __unauthenticated_rpc_call(method="verify_otp", params=params)

if "result" not in d:

raise OTPCodeError("Error verifying OTP code.")

key = ApiKey(**d["result"])

if key is None:

raise OTPCodeError("Error verifying OTP code.")

api_key = key.key

return api_key

def set_api_key(key: str) -> None:

error_deprecation()

def get_models() -> List[str]:

error_deprecation()

def create_model(model: str, db_type: str) -> bool:

error_deprecation()

def add_user_to_model(model: str, email: str, is_admin: bool) -> bool:

error_deprecation()

def update_model_visibility(public: bool) -> bool:

error_deprecation()

def set_model(model: str):

error_deprecation()

def add_sql(

question: str, sql: str, tag: Union[str, None] = "Manually Trained"

) -> bool:

error_deprecation()

def add_ddl(ddl: str) -> bool:

error_deprecation()

def add_documentation(documentation: str) -> bool:

error_deprecation()

@dataclass

class TrainingPlanItem:

item_type: str

item_group: str

item_name: str

item_value: str

def __str__(self):

if self.item_type == self.ITEM_TYPE_SQL:

return f"Train on SQL: {self.item_group} {self.item_name}"

elif self.item_type == self.ITEM_TYPE_DDL:

return f"Train on DDL: {self.item_group} {self.item_name}"

elif self.item_type == self.ITEM_TYPE_IS:

return f"Train on Information Schema: {self.item_group} {self.item_name}"

ITEM_TYPE_SQL = "sql"

ITEM_TYPE_DDL = "ddl"

ITEM_TYPE_IS = "is"

class TrainingPlan:

"""

A class representing a training plan. You can see what's in it, and remove items from it that you don't want trained.

**Example:**

\`\`\`python

plan = vn.get_training_plan()

plan.get_summary()

\`\`\`

"""

_plan: List[TrainingPlanItem]

def __init__(self, plan: List[TrainingPlanItem]):

self._plan = plan

def __str__(self):

return "\n".join(self.get_summary())

def __repr__(self):

return self.__str__()

def get_summary(self) -> List[str]:

"""

**Example:**

\`\`\`python

plan = vn.get_training_plan()

plan.get_summary()

\`\`\`

Get a summary of the training plan.

Returns:

List[str]: A list of strings describing the training plan.

"""

return [f"{item}" for item in self._plan]

def remove_item(self, item: str):

"""

**Example:**

\`\`\`python

plan = vn.get_training_plan()

plan.remove_item("Train on SQL: What is the average salary of employees?")

\`\`\`

Remove an item from the training plan.

Args:

item (str): The item to remove.

"""

for plan_item in self._plan:

if str(plan_item) == item:

self._plan.remove(plan_item)

break

def get_training_plan_postgres(

filter_databases: Union[List[str], None] = None,

filter_schemas: Union[List[str], None] = None,

include_information_schema: bool = False,

use_historical_queries: bool = True,

) -> TrainingPlan:

error_deprecation()

def get_training_plan_generic(df) -> TrainingPlan:

error_deprecation()

def get_training_plan_experimental(

filter_databases: Union[List[str], None] = None,

filter_schemas: Union[List[str], None] = None,

include_information_schema: bool = False,

use_historical_queries: bool = True,

) -> TrainingPlan:

error_deprecation()

def train(

question: str = None,

sql: str = None,

ddl: str = None,

documentation: str = None,

json_file: str = None,

sql_file: str = None,

plan: TrainingPlan = None,

) -> bool:

error_deprecation()

def flag_sql_for_review(

question: str, sql: Union[str, None] = None, error_msg: Union[str, None] = None

) -> bool:

error_deprecation()

def remove_sql(question: str) -> bool:

error_deprecation()

def remove_training_data(id: str) -> bool:

error_deprecation()

def generate_sql(question: str) -> str:

error_deprecation()

def get_related_training_data(question: str) -> TrainingData:

error_deprecation()

def generate_meta(question: str) -> str:

error_deprecation()

def generate_followup_questions(question: str, df: pd.DataFrame) -> List[str]:

error_deprecation()

def generate_questions() -> List[str]:

error_deprecation()

def ask(

question: Union[str, None] = None,

print_results: bool = True,

auto_train: bool = True,

generate_followups: bool = True,

) -> Union[

Tuple[

Union[str, None],

Union[pd.DataFrame, None],

Union[plotly.graph_objs.Figure, None],

Union[List[str], None],

],

None,

]:

error_deprecation()

def generate_plotly_code(

question: Union[str, None],

sql: Union[str, None],

df: pd.DataFrame,

chart_instructions: Union[str, None] = None,

) -> str:

error_deprecation()

def get_plotly_figure(

plotly_code: str, df: pd.DataFrame, dark_mode: bool = True

) -> plotly.graph_objs.Figure:

error_deprecation()

def get_results(cs, default_database: str, sql: str) -> pd.DataFrame:

error_deprecation()

def generate_explanation(sql: str) -> str:

error_deprecation()

def generate_question(sql: str) -> str:

error_deprecation()

def get_all_questions() -> pd.DataFrame:

error_deprecation()

def get_training_data() -> pd.DataFrame:

error_deprecation()

def connect_to_sqlite(url: str):

error_deprecation()

def connect_to_snowflake(

account: str,

username: str,

password: str,

database: str,

schema: Union[str, None] = None,

role: Union[str, None] = None,

):

error_deprecation()

def connect_to_postgres(

host: str = None,

dbname: str = None,

user: str = None,

password: str = None,

port: int = None,

):

error_deprecation()

def connect_to_bigquery(cred_file_path: str = None, project_id: str = None):

error_deprecation()

def connect_to_duckdb(url: str="memory", init_sql: str = None):

error_deprecation()

```

## /src/vanna/advanced/__init__.py

```py path="/src/vanna/advanced/__init__.py"

from abc import ABC, abstractmethod

class VannaAdvanced(ABC):

def __init__(self, config=None):

self.config = config

@abstractmethod

def get_function(self, question: str, additional_data: dict = {}) -> dict:

pass

@abstractmethod

def create_function(self, question: str, sql: str, plotly_code: str, **kwargs) -> dict:

pass

@abstractmethod

def update_function(self, old_function_name: str, updated_function: dict) -> bool:

pass

@abstractmethod

def delete_function(self, function_name: str) -> bool:

pass

@abstractmethod

def get_all_functions(self) -> list:

pass

```

## /src/vanna/anthropic/__init__.py

```py path="/src/vanna/anthropic/__init__.py"

from .anthropic_chat import Anthropic_Chat

```

## /src/vanna/anthropic/anthropic_chat.py

```py path="/src/vanna/anthropic/anthropic_chat.py"

import os

import anthropic

from ..base import VannaBase

class Anthropic_Chat(VannaBase):

def __init__(self, client=None, config=None):

VannaBase.__init__(self, config=config)

# default parameters - can be overrided using config

self.temperature = 0.7

self.max_tokens = 500

if "temperature" in config:

self.temperature = config["temperature"]

if "max_tokens" in config:

self.max_tokens = config["max_tokens"]

if client is not None:

self.client = client

return

if config is None and client is None:

self.client = anthropic.Anthropic(api_key=os.getenv("ANTHROPIC_API_KEY"))

return

if "api_key" in config:

self.client = anthropic.Anthropic(api_key=config["api_key"])

def system_message(self, message: str) -> any:

return {"role": "system", "content": message}

def user_message(self, message: str) -> any:

return {"role": "user", "content": message}

def assistant_message(self, message: str) -> any:

return {"role": "assistant", "content": message}

def submit_prompt(self, prompt, **kwargs) -> str:

if prompt is None:

raise Exception("Prompt is None")

if len(prompt) == 0:

raise Exception("Prompt is empty")

# Count the number of tokens in the message log

# Use 4 as an approximation for the number of characters per token

num_tokens = 0

for message in prompt:

num_tokens += len(message["content"]) / 4

if self.config is not None and "model" in self.config:

print(

f"Using model {self.config['model']} for {num_tokens} tokens (approx)"

)

# claude required system message is a single filed

# https://docs.anthropic.com/claude/reference/messages_post

system_message = ''

no_system_prompt = []

for prompt_message in prompt:

role = prompt_message['role']

if role == 'system':

system_message = prompt_message['content']

else:

no_system_prompt.append({"role": role, "content": prompt_message['content']})

response = self.client.messages.create(

model=self.config["model"],

messages=no_system_prompt,

system=system_message,

max_tokens=self.max_tokens,

temperature=self.temperature,

)

return response.content[0].text

```

## /src/vanna/azuresearch/__init__.py

```py path="/src/vanna/azuresearch/__init__.py"

from .azuresearch_vector import AzureAISearch_VectorStore

```

## /src/vanna/azuresearch/azuresearch_vector.py

```py path="/src/vanna/azuresearch/azuresearch_vector.py"

import ast

import json

from typing import List

import pandas as pd

from azure.core.credentials import AzureKeyCredential

from azure.search.documents import SearchClient

from azure.search.documents.indexes import SearchIndexClient

from azure.search.documents.indexes.models import (

ExhaustiveKnnAlgorithmConfiguration,

ExhaustiveKnnParameters,

SearchableField,

SearchField,

SearchFieldDataType,

SearchIndex,

VectorSearch,

VectorSearchAlgorithmKind,

VectorSearchAlgorithmMetric,

VectorSearchProfile,

)

from azure.search.documents.models import VectorFilterMode, VectorizedQuery

from fastembed import TextEmbedding

from ..base import VannaBase

from ..utils import deterministic_uuid

class AzureAISearch_VectorStore(VannaBase):

"""

AzureAISearch_VectorStore is a class that provides a vector store for Azure AI Search.

Args:

config (dict): Configuration dictionary. Defaults to {}. You must provide an API key in the config.

- azure_search_endpoint (str, optional): Azure Search endpoint. Defaults to "https://azcognetive.search.windows.net".

- azure_search_api_key (str): Azure Search API key.

- dimensions (int, optional): Dimensions of the embeddings. Defaults to 384 which corresponds to the dimensions of BAAI/bge-small-en-v1.5.

- fastembed_model (str, optional): Fastembed model to use. Defaults to "BAAI/bge-small-en-v1.5".

- index_name (str, optional): Name of the index. Defaults to "vanna-index".

- n_results (int, optional): Number of results to return. Defaults to 10.

- n_results_ddl (int, optional): Number of results to return for DDL queries. Defaults to the value of n_results.

- n_results_sql (int, optional): Number of results to return for SQL queries. Defaults to the value of n_results.

- n_results_documentation (int, optional): Number of results to return for documentation queries. Defaults to the value of n_results.

Raises:

ValueError: If config is None, or if 'azure_search_api_key' is not provided in the config.

"""

def __init__(self, config=None):

VannaBase.__init__(self, config=config)

self.config = config or None

if config is None:

raise ValueError(

"config is required, pass an API key, 'azure_search_api_key', in the config."

)

azure_search_endpoint = config.get("azure_search_endpoint", "https://azcognetive.search.windows.net")

azure_search_api_key = config.get("azure_search_api_key")

self.dimensions = config.get("dimensions", 384)

self.fastembed_model = config.get("fastembed_model", "BAAI/bge-small-en-v1.5")

self.index_name = config.get("index_name", "vanna-index")

self.n_results_ddl = config.get("n_results_ddl", config.get("n_results", 10))

self.n_results_sql = config.get("n_results_sql", config.get("n_results", 10))

self.n_results_documentation = config.get("n_results_documentation", config.get("n_results", 10))

if not azure_search_api_key:

raise ValueError(

"'azure_search_api_key' is required in config to use AzureAISearch_VectorStore"

)

self.index_client = SearchIndexClient(

endpoint=azure_search_endpoint,

credential=AzureKeyCredential(azure_search_api_key)

)

self.search_client = SearchClient(

endpoint=azure_search_endpoint,

index_name=self.index_name,

credential=AzureKeyCredential(azure_search_api_key)

)

if self.index_name not in self._get_indexes():

self._create_index()

def _create_index(self) -> bool:

fields = [

SearchableField(name="id", type=SearchFieldDataType.String, key=True, filterable=True),

SearchableField(name="document", type=SearchFieldDataType.String, searchable=True, filterable=True),

SearchField(name="type", type=SearchFieldDataType.String, filterable=True, searchable=True),

SearchField(name="document_vector", type=SearchFieldDataType.Collection(SearchFieldDataType.Single), searchable=True, vector_search_dimensions=self.dimensions, vector_search_profile_name="ExhaustiveKnnProfile"),

]

vector_search = VectorSearch(

algorithms=[

ExhaustiveKnnAlgorithmConfiguration(

name="ExhaustiveKnn",

kind=VectorSearchAlgorithmKind.EXHAUSTIVE_KNN,

parameters=ExhaustiveKnnParameters(

metric=VectorSearchAlgorithmMetric.COSINE

)

)

],

profiles=[

VectorSearchProfile(

name="ExhaustiveKnnProfile",

algorithm_configuration_name="ExhaustiveKnn",

)

]

)

index = SearchIndex(name=self.index_name, fields=fields, vector_search=vector_search)

result = self.index_client.create_or_update_index(index)

print(f'{result.name} created')

def _get_indexes(self) -> list:

return [index for index in self.index_client.list_index_names()]

def add_ddl(self, ddl: str) -> str:

id = deterministic_uuid(ddl) + "-ddl"

document = {

"id": id,

"document": ddl,

"type": "ddl",

"document_vector": self.generate_embedding(ddl)

}

self.search_client.upload_documents(documents=[document])

return id

def add_documentation(self, doc: str) -> str:

id = deterministic_uuid(doc) + "-doc"

document = {

"id": id,

"document": doc,

"type": "doc",

"document_vector": self.generate_embedding(doc)

}

self.search_client.upload_documents(documents=[document])

return id

def add_question_sql(self, question: str, sql: str) -> str:

question_sql_json = json.dumps({"question": question, "sql": sql}, ensure_ascii=False)

id = deterministic_uuid(question_sql_json) + "-sql"

document = {

"id": id,

"document": question_sql_json,

"type": "sql",

"document_vector": self.generate_embedding(question_sql_json)

}

self.search_client.upload_documents(documents=[document])

return id

def get_related_ddl(self, text: str) -> List[str]:

result = []

vector_query = VectorizedQuery(vector=self.generate_embedding(text), fields="document_vector")

df = pd.DataFrame(

self.search_client.search(

top=self.n_results_ddl,

vector_queries=[vector_query],

select=["id", "document", "type"],

filter=f"type eq 'ddl'"

)

)

if len(df):

result = df["document"].tolist()

return result

def get_related_documentation(self, text: str) -> List[str]:

result = []

vector_query = VectorizedQuery(vector=self.generate_embedding(text), fields="document_vector")

df = pd.DataFrame(

self.search_client.search(

top=self.n_results_documentation,

vector_queries=[vector_query],

select=["id", "document", "type"],

filter=f"type eq 'doc'",

vector_filter_mode=VectorFilterMode.PRE_FILTER

)

)

if len(df):

result = df["document"].tolist()

return result

def get_similar_question_sql(self, question: str) -> List[str]:

result = []

# Vectorize the text

vector_query = VectorizedQuery(vector=self.generate_embedding(question), fields="document_vector")

df = pd.DataFrame(

self.search_client.search(

top=self.n_results_sql,

vector_queries=[vector_query],

select=["id", "document", "type"],

filter=f"type eq 'sql'"

)

)

if len(df): # Check if there is similar query and the result is not empty

result = [ast.literal_eval(element) for element in df["document"].tolist()]

return result

def get_training_data(self) -> List[str]:

search = self.search_client.search(

search_text="*",

select=['id', 'document', 'type'],

filter=f"(type eq 'sql') or (type eq 'ddl') or (type eq 'doc')"

).by_page()

df = pd.DataFrame([item for page in search for item in page])

if len(df):

df.loc[df["type"] == "sql", "question"] = df.loc[df["type"] == "sql"]["document"].apply(lambda x: json.loads(x)["question"])

df.loc[df["type"] == "sql", "content"] = df.loc[df["type"] == "sql"]["document"].apply(lambda x: json.loads(x)["sql"])

df.loc[df["type"] != "sql", "content"] = df.loc[df["type"] != "sql"]["document"]

return df[["id", "question", "content", "type"]]

return pd.DataFrame()

def remove_training_data(self, id: str) -> bool:

result = self.search_client.delete_documents(documents=[{'id':id}])

return result[0].succeeded

def remove_index(self):

self.index_client.delete_index(self.index_name)

def generate_embedding(self, data: str, **kwargs) -> List[float]:

embedding_model = TextEmbedding(model_name=self.fastembed_model)

embedding = next(embedding_model.embed(data))

return embedding.tolist()

```

## /src/vanna/base/__init__.py

```py path="/src/vanna/base/__init__.py"

from .base import VannaBase

```

## /src/vanna/base/base.py

```py path="/src/vanna/base/base.py"

r"""

# Nomenclature

| Prefix | Definition | Examples |

| --- | --- | --- |

| `vn.get_` | Fetch some data | [`vn.get_related_ddl(...)`][vanna.base.base.VannaBase.get_related_ddl] |

| `vn.add_` | Adds something to the retrieval layer | [`vn.add_question_sql(...)`][vanna.base.base.VannaBase.add_question_sql]

[`vn.add_ddl(...)`][vanna.base.base.VannaBase.add_ddl] |

| `vn.generate_` | Generates something using AI based on the information in the model | [`vn.generate_sql(...)`][vanna.base.base.VannaBase.generate_sql]

[`vn.generate_explanation()`][vanna.base.base.VannaBase.generate_explanation] |

| `vn.run_` | Runs code (SQL) | [`vn.run_sql`][vanna.base.base.VannaBase.run_sql] |

| `vn.remove_` | Removes something from the retrieval layer | [`vn.remove_training_data`][vanna.base.base.VannaBase.remove_training_data] |