```

├── .github/

├── FUNDING.yml

├── ISSUE_TEMPLATE/

├── bug_report.md (100 tokens)

├── feature_request.md (100 tokens)

├── workflows/

├── codeql-analyze.yaml (500 tokens)

├── docker.yml (600 tokens)

├── stale.yml (200 tokens)

├── .gitignore (100 tokens)

├── LICENSE (omitted)

├── README.md (1200 tokens)

├── SECURITY.md

├── docker/

├── Dockerfile (600 tokens)

├── README.md (1200 tokens)

├── compose.yaml (100 tokens)

├── entrypoint.sh (1300 tokens)

├── package-lock.json (omitted)

├── package.json

├── qodana.yaml

├── src/

├── Utilities.py (500 tokens)

├── certbot.ini

├── dashboard.py (29.8k tokens)

├── gunicorn.conf.py (200 tokens)

├── modules/

├── DashboardLogger.py (300 tokens)

├── Email.py (600 tokens)

├── Log.py (100 tokens)

├── PeerJob.py (200 tokens)

├── PeerJobLogger.py (500 tokens)

├── SystemStatus.py (800 tokens)

├── requirements.txt

├── static/

├── app/

├── .gitignore (100 tokens)

├── build.sh (100 tokens)

├── dist/

├── assets/

├── bootstrap-icons-BOrJxbIo.woff

├── bootstrap-icons-BtvjY1KL.woff2

├── browser-CjSdxGTc.js (4.8k tokens)

├── configuration-qGYhSc1H.js (100 tokens)

├── configurationList-BY8izVeQ.css

├── configurationList-Cznk60kB.js (2.8k tokens)

├── dayjs.min-PaIL06iQ.js (1400 tokens)

├── editConfiguration-Ca_IlmFH.css (1500 tokens)

├── editConfiguration-gjtFMIrx.js (5.7k tokens)

├── index-2ZxMyhlP.css (400 tokens)

├── index-DFl-XeJT.css (84k tokens)

├── index-DZliHkQD.js (43.9k tokens)

├── index-L60y6kc9.js (300 tokens)

├── index-UG7VB4Xm.js (9.7k tokens)

├── index-myupzXki.js (34.7k tokens)

├── localeText-DG9SnJT8.js (100 tokens)

├── message-BaDb-qC9.css

├── message-DZx7QSKr.js (300 tokens)

├── newConfiguration-CAFzjDsW.css

├── newConfiguration-CPAMxqV6.js (2.5k tokens)

├── osmap-CmjRjQ0N.js (62.2k tokens)

├── osmap-CoctJCk_.css (1100 tokens)

├── peerAddModal-DD-hu-9U.js (4.1k tokens)

├── peerAddModal-s_w1PY7H.css (100 tokens)

├── peerConfigurationFile-BFmmapnE.css (100 tokens)

├── peerConfigurationFile-BaTwLYIc.js (500 tokens)

├── peerJobs-tiQPhspt.js (500 tokens)

├── peerJobs-voXURBEt.css (100 tokens)

├── peerJobsAllModal-BnD87caA.js (500 tokens)

├── peerJobsLogsModal-9H0FTeh1.js (1100 tokens)

├── peerList-CT7gvMs4.js (7.3k tokens)

├── peerList-EJuRsJAQ.css (15k tokens)

├── peerQRCode-B62rUhHV.css

├── peerQRCode-Dxvodnam.js (400 tokens)

├── peerSearchBar-BrbvrjWX.css

├── peerSearchBar-JmFRkc04.js (400 tokens)

├── peerSettings-BkfvIQvk.css

├── peerSettings-Bne_y8f7.js (1600 tokens)

├── peerShareLinkModal-Cl59KMsF.js (2k tokens)

├── peerShareLinkModal-ouYxVldA.css (100 tokens)

├── ping-CrzXKpFV.js (1500 tokens)

├── ping-DojRH9NX.css (100 tokens)

├── protocolBadge-rpLx04Ri.js (100 tokens)

├── restoreConfiguration-CNCGDtFu.js (2.8k tokens)

├── restoreConfiguration-VgIx_N7z.css

├── schedulePeerJob-Ci8HK7bA.js (1200 tokens)

├── schedulePeerJob-DPy59wup.css (100 tokens)

├── selectPeers-7CTijh1V.js (1300 tokens)

├── selectPeers-Wjnh8YUZ.css (100 tokens)

├── settings-CrxzSFju.js (7.3k tokens)

├── settings-DbQs6Bt_.css (200 tokens)

├── setup-BmUSkunY.js (700 tokens)

├── share-B4McccvP.css

├── share-CsPN7pXl.js (600 tokens)

├── signin-BKQo1OCC.js (2.2k tokens)

├── signin-CHulm0U0.css (100 tokens)

├── storageMount-Bl6vLa1N.css

├── storageMount.vue_vue_type_style_index_0_scoped_9509d7a0_lang-qJ02b5eW.js (200 tokens)

├── systemStatus-C-kMgV1x.css (100 tokens)

├── systemStatus-DHmERTYR.js (2.1k tokens)

├── totp-G9lM0GPx.js (600 tokens)

├── traceroute-Bd2NnUB2.js (700 tokens)

├── traceroute-DH1nb6XH.css (100 tokens)

├── vue-datepicker-4ltJE5cT.js (36.6k tokens)

├── img/

├── Logo-1-128x128.png

├── Logo-1-256x256.png

├── Logo-1-384x384.png

├── Logo-1-512x512.png

├── Logo-1-Maskable-512x512.png

├── Logo-1-Rounded-128x128.png

├── Logo-1-Rounded-256x256.png

├── Logo-1-Rounded-384x384.png

├── Logo-1-Rounded-512x512.png

├── Logo-2-128x128.png

├── Logo-2-256x256.png

├── Logo-2-384x384.png

├── Logo-2-512x512.png

├── Logo-2-Rounded-128x128.png

├── Logo-2-Rounded-256x256.png

├── Logo-2-Rounded-384x384.png

├── Logo-2-Rounded-512x512.png

├── index.html (200 tokens)

├── json/

├── manifest.json (300 tokens)

├── index.html (100 tokens)

├── jsconfig.json

├── package-lock.json (52.4k tokens)

├── package.json (200 tokens)

├── proxy.js

├── public/

├── img/

├── Logo-1-128x128.png

├── Logo-1-256x256.png

├── Logo-1-384x384.png

├── Logo-1-512x512.png

├── Logo-1-Maskable-512x512.png

├── Logo-1-Rounded-128x128.png

├── Logo-1-Rounded-256x256.png

├── Logo-1-Rounded-384x384.png

├── Logo-1-Rounded-512x512.png

├── Logo-2-128x128.png

├── Logo-2-256x256.png

├── Logo-2-384x384.png

├── Logo-2-512x512.png

├── Logo-2-Rounded-128x128.png

├── Logo-2-Rounded-256x256.png

├── Logo-2-Rounded-384x384.png

├── Logo-2-Rounded-512x512.png

├── json/

├── manifest.json (300 tokens)

├── src/

├── App.vue (400 tokens)

├── components/

├── configurationComponents/

├── backupRestoreComponents/

├── backup.vue (1200 tokens)

├── configurationBackupRestore.vue (700 tokens)

├── deleteConfiguration.vue (900 tokens)

├── editConfiguration.vue (2.1k tokens)

├── editConfigurationComponents/

├── editRawConfigurationFile.vue (700 tokens)

├── updateConfigurationName.vue (700 tokens)

├── newPeersComponents/

├── allowedIPsInput.vue (1400 tokens)

├── bulkAdd.vue (400 tokens)

├── dnsInput.vue (300 tokens)

├── endpointAllowedIps.vue (400 tokens)

├── mtuInput.vue (100 tokens)

├── nameInput.vue (100 tokens)

├── persistentKeepAliveInput.vue (100 tokens)

├── presharedKeyInput.vue (200 tokens)

├── privatePublicKeyInput.vue (800 tokens)

├── peer.vue (900 tokens)

├── peerAddModal.vue (1400 tokens)

├── peerConfigurationFile.vue (700 tokens)

├── peerCreate.vue (1300 tokens)

├── peerIntersectionObserver.vue (100 tokens)

├── peerJobs.vue (700 tokens)

├── peerJobsAllModal.vue (600 tokens)

├── peerJobsLogsModal.vue (1200 tokens)

├── peerList.old.vue (5.1k tokens)

├── peerList.vue (3.2k tokens)

├── peerListComponents/

├── peerDataUsageCharts.vue (1400 tokens)

├── peerListModals.vue (600 tokens)

├── peerQRCode.vue (500 tokens)

├── peerRow.vue (400 tokens)

├── peerScheduleJobsComponents/

├── scheduleDropdown.vue (200 tokens)

├── schedulePeerJob.vue (1200 tokens)

├── peerSearch.vue (1500 tokens)

├── peerSearchBar.vue (500 tokens)

├── peerSettings.vue (1900 tokens)

├── peerSettingsDropdown.vue (1500 tokens)

├── peerSettingsDropdownComponents/

├── peerSettingsDropdownTool.vue (200 tokens)

├── peerShareLinkComponents/

├── peerShareWithEmail.vue (1000 tokens)

├── peerShareWithEmailBodyPreview.vue (300 tokens)

├── peerShareLinkModal.vue (1200 tokens)

├── restrictedPeers.vue (100 tokens)

├── selectPeers.vue (1800 tokens)

├── configurationList.vue (1400 tokens)

├── configurationListComponents/

├── configurationCard.vue (900 tokens)

├── map/

├── osmap.vue (700 tokens)

├── messageCentreComponent/

├── message.vue (400 tokens)

├── navbar.vue (1400 tokens)

├── navbarComponents/

├── agentContainer.vue (1000 tokens)

├── agentMessage.vue (300 tokens)

├── agentModal.vue (700 tokens)

├── helpModal.vue (500 tokens)

├── protocolBadge.vue (100 tokens)

├── restoreConfigurationComponents/

├── backupGroup.vue (500 tokens)

├── confirmBackup.vue (2.3k tokens)

├── uploadModal.vue (1000 tokens)

├── settingsComponent/

├── accountSettingsInputPassword.vue (800 tokens)

├── accountSettingsInputUsername.vue (400 tokens)

├── accountSettingsMFA.vue (400 tokens)

├── dashboardAPIKeys.vue (800 tokens)

├── dashboardAPIKeysComponents/

├── dashboardAPIKey.vue (500 tokens)

├── newDashboardAPIKey.vue (700 tokens)

├── dashboardEmailSettings.vue (1200 tokens)

├── dashboardIPPortInput.vue (600 tokens)

├── dashboardLanguage.vue (500 tokens)

├── dashboardSettingsInputIPAddressAndPort.vue (500 tokens)

├── dashboardSettingsInputWireguardConfigurationPath.vue (700 tokens)

├── dashboardSettingsWireguardConfigurationAutostart.vue (400 tokens)

├── dashboardTheme.vue (300 tokens)

├── peersDefaultSettingsInput.vue (500 tokens)

├── setupComponent/

├── totp.vue (800 tokens)

├── signIn/

├── signInInput.vue (100 tokens)

├── signInTOTP.vue (100 tokens)

├── signInComponents/

├── RemoteServer.vue (800 tokens)

├── RemoteServerList.vue (300 tokens)

├── systemStatusComponents/

├── cpuCore.vue (200 tokens)

├── process.vue (100 tokens)

├── storageMount.vue (200 tokens)

├── systemStatusWidget.vue (800 tokens)

├── text/

├── localeText.vue (100 tokens)

├── css/

├── dashboard.css (4.6k tokens)

├── main.js (200 tokens)

├── models/

├── WireguardConfigurations.js (100 tokens)

├── router/

├── router.js (900 tokens)

├── stores/

├── DashboardConfigurationStore.js (600 tokens)

├── WireguardConfigurationsStore.js (600 tokens)

├── utilities/

├── cookie.js

├── fetch.js (400 tokens)

├── ipCheck.js

├── locale.js (100 tokens)

├── parseConfigurationFile.js (200 tokens)

├── simple-code-editor/

├── CodeEditor.vue (2.5k tokens)

├── Dropdown.vue (200 tokens)

├── themes/

├── themes.css (1000 tokens)

├── wireguard.js (1600 tokens)

├── views/

├── configuration.vue (100 tokens)

├── index.vue (300 tokens)

├── newConfiguration.vue (2.8k tokens)

├── ping.vue (1700 tokens)

├── restoreConfiguration.vue (1000 tokens)

├── settings.vue (1300 tokens)

├── setup.vue (1000 tokens)

├── share.vue (800 tokens)

├── signin.vue (1300 tokens)

├── systemStatus.vue (2.8k tokens)

├── traceroute.vue (1000 tokens)

├── vite.config.js (200 tokens)

├── locale/

├── active_languages.json (600 tokens)

├── ar-sa.json (3.6k tokens)

├── be.json (3.8k tokens)

├── ca.json (4.2k tokens)

├── cs.json (3.6k tokens)

├── de-de.json (4k tokens)

├── es-es.json (3.6k tokens)

├── fa.json (3.6k tokens)

├── fr-ca.json (3.9k tokens)

├── fr-fr.json (3.9k tokens)

├── hu-hu.json (4k tokens)

├── id.json (3.9k tokens)

├── it-it.json (3.5k tokens)

├── ja-jp.json (3.1k tokens)

├── ko.json (3k tokens)

├── language_template.json (2.3k tokens)

├── nl-nl.json (4k tokens)

├── pl.json (3.9k tokens)

├── ru.json (3.8k tokens)

├── sv-se.json (3.8k tokens)

├── th.json (3.6k tokens)

├── tr-tr.json (3.7k tokens)

├── uk.json (3.4k tokens)

├── verify_locale_files.py (400 tokens)

├── zh-cn.json (2.8k tokens)

├── zh-hk.json (2.8k tokens)

├── test.sh (100 tokens)

├── wg-dashboard.service (100 tokens)

├── wgd.sh (4.1k tokens)

```

## /.github/FUNDING.yml

```yml path="/.github/FUNDING.yml"

# These are supported funding model platforms

github: [donaldzou]

patreon: DonaldDonnyZou

```

## /.github/ISSUE_TEMPLATE/bug_report.md

---

name: Bug report

about: Create a report to help us improve

title: ''

labels: bug

assignees: ''

---

**Describe The Problem**

A clear and concise description of what the bug is.

**Expected Error / Traceback**

```

Please provide the error traceback here

```

**To Reproduce**

Please provide how you run the dashboard

**OS Information:**

- OS: [e.g. Ubuntu 18.02]

- Python Version: [e.g v3.7]

**Sample of your `.conf` file**

```

Please provide a sample of your configuration file that you are having problem with. You can replace your public key and private key to ABCD...

```

## /.github/ISSUE_TEMPLATE/feature_request.md

---

name: Feature request

about: Suggest an idea for this project

title: ''

labels: enhancement

assignees: ''

---

**Is your feature request related to a problem? Please describe.**

A clear and concise description of what the problem is. Ex. I'm always frustrated when [...]

**Describe the solution you'd like**

A clear and concise description of what you want to happen.

## /.github/workflows/codeql-analyze.yaml

```yaml path="/.github/workflows/codeql-analyze.yaml"

# For most projects, this workflow file will not need changing; you simply need

# to commit it to your repository.

#

# You may wish to alter this file to override the set of languages analyzed,

# or to provide custom queries or build logic.

#

# ******** NOTE ********

# We have attempted to detect the languages in your repository. Please check

# the `language` matrix defined below to confirm you have the correct set of

# supported CodeQL languages.

#

name: "CodeQL"

on:

workflow_dispatch:

push:

branches: [ main ]

pull_request:

# The branches below must be a subset of the branches above

branches: [ main ]

schedule:

- cron: '30 5 * * 4'

jobs:

analyze:

name: Analyze

runs-on: ubuntu-latest

permissions:

actions: read

contents: read

security-events: write

strategy:

fail-fast: false

matrix:

language: [ 'javascript', 'python' ]

# CodeQL supports [ 'cpp', 'csharp', 'go', 'java', 'javascript', 'python', 'ruby' ]

# Learn more about CodeQL language support at https://git.io/codeql-language-support

steps:

- name: Checkout repository

uses: actions/checkout@v3

# Initializes the CodeQL tools for scanning.

- name: Initialize CodeQL

uses: github/codeql-action/init@v3

with:

languages: ${{ matrix.language }}

# If you wish to specify custom queries, you can do so here or in a config file.

# By default, queries listed here will override any specified in a config file.

# Prefix the list here with "+" to use these queries and those in the config file.

# queries: ./path/to/local/query, your-org/your-repo/queries@main

# Autobuild attempts to build any compiled languages (C/C++, C#, or Java).

# If this step fails, then you should remove it and run the build manually (see below)

- name: Autobuild

uses: github/codeql-action/autobuild@v3

# ℹ️ Command-line programs to run using the OS shell.

# 📚 https://git.io/JvXDl

# ✏️ If the Autobuild fails above, remove it and uncomment the following three lines

# and modify them (or add more) to build your code if your project

# uses a compiled language

#- run: |

# make bootstrap

# make release

- name: Perform CodeQL Analysis

uses: github/codeql-action/analyze@v3

```

## /.github/workflows/docker.yml

```yml path="/.github/workflows/docker.yml"

name: Docker Build and Push

on:

workflow_dispatch:

push:

branches:

- 'main'

tags:

- '*'

release:

types: [ published ]

env:

DOCKERHUB_PREFIX: docker.io

GITHUB_CONTAINER_PREFIX: ghcr.io

DOCKER_IMAGE: donaldzou/wgdashboard

jobs:

docker_build:

runs-on: ubuntu-latest

strategy:

fail-fast: false

steps:

- name: Checkout repository

uses: actions/checkout@v4

- name: Log in to Docker Hub

uses: docker/login-action@v3

with:

registry: ${{ env.DOCKERHUB_PREFIX }}

username: ${{ secrets.DOCKER_HUB_USERNAME }}

password: ${{ secrets.DOCKER_HUB_PASSWORD }}

- name: Log in to GitHub Container Registry

uses: docker/login-action@v3

with:

registry: ${{ env.GITHUB_CONTAINER_PREFIX }}

username: ${{ github.actor }}

password: ${{ secrets.GITHUB_TOKEN }}

- name: Set up QEMU

uses: docker/setup-qemu-action@v3

with:

platforms: |

- linux/amd64

- linux/arm64

- linux/arm/v7

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v3

- name: Docker meta by docs https://github.com/docker/metadata-action

id: meta

uses: docker/metadata-action@v5

with:

images: |

${{ env.DOCKERHUB_PREFIX }}/${{ env.DOCKER_IMAGE }}

${{ env.GITHUB_CONTAINER_PREFIX }}/${{ env.DOCKER_IMAGE }}

tags: |

type=ref,event=branch

type=ref,event=tag

- name: Build and export (multi-arch)

uses: docker/build-push-action@v6

with:

context: .

file: ./docker/Dockerfile

push: ${{ github.event_name != 'pull_request' }}

tags: ${{ steps.meta.outputs.tags }}

labels: ${{ steps.meta.outputs.labels }}

platforms: linux/amd64,linux/arm64,linux/arm/v7

docker_scan:

if: ${{ github.event_name != 'pull_request' }}

runs-on: ubuntu-latest

needs: docker_build

steps:

- name: Log in to Docker Hub

uses: docker/login-action@v3

with:

registry: ${{ env.DOCKERHUB_PREFIX }}

username: ${{ secrets.DOCKER_HUB_USERNAME }}

password: ${{ secrets.DOCKER_HUB_PASSWORD }}

- name: Docker Scout CVEs

uses: docker/scout-action@v1

with:

command: cves

image: ${{ env.GITHUB_CONTAINER_PREFIX }}/${{ env.DOCKER_IMAGE }}:main

only-severities: critical,high

only-fixed: true

write-comment: true

github-token: ${{ secrets.GITHUB_TOKEN }}

exit-code: true

- name: Docker Scout Compare

uses: docker/scout-action@v1

with:

command: compare

# Set to Github for maximum compat

image: ${{ env.GITHUB_CONTAINER_PREFIX }}/${{ env.DOCKER_IMAGE }}:main

to: ${{ env.GITHUB_CONTAINER_PREFIX }}/${{ env.DOCKER_IMAGE }}:latest

only-severities: critical,high

ignore-unchanged: true

github-token: ${{ secrets.GITHUB_TOKEN }}

```

## /.github/workflows/stale.yml

```yml path="/.github/workflows/stale.yml"

# This workflow warns and then closes issues and PRs that have had no activity for a specified amount of time.

#

# You can adjust the behavior by modifying this file.

# For more information, see:

# https://github.com/actions/stale

name: Mark stale issues and pull requests

on:

workflow_dispatch:

schedule:

- cron: '00 08 * * *'

jobs:

stale:

runs-on: ubuntu-latest

permissions:

issues: write

steps:

- uses: actions/stale@v9

with:

repo-token: ${{ secrets.GITHUB_TOKEN }}

stale-issue-message: 'This issue has not been updated for 20 days'

stale-pr-message: 'This pull request has not been updated for 20 days'

stale-issue-label: 'stale'

exempt-issue-labels: 'enhancement,ongoing'

days-before-stale: 20

```

## /.gitignore

```gitignore path="/.gitignore"

.vscode

.DS_Store

.idea

src/db

__pycache__

src/test.py

*.db

src/wg-dashboard.ini

src/static/pic.xd

*.conf

private_key.txt

public_key.txt

venv/**

log/**

release/*

src/db/wgdashboard.db

.jshintrc

node_modules/**

*/proxy.js

src/static/app/proxy.js

.secrets

# Logs

logs

*.log

npm-debug.log*

yarn-debug.log*

yarn-error.log*

pnpm-debug.log*

lerna-debug.log*

node_modules

.DS_Store

dist-ssr

coverage

*.local

/cypress/videos/

/cypress/screenshots/

# Editor directories and files

.vscode/*

!.vscode/extensions.json

.idea

*.suo

*.ntvs*

*.njsproj

*.sln

*.sw?

*.tsbuildinfo

.vite/*

```

## /README.md

> [!TIP]

> 🎉 I'm excited to announce that WGDashboard is officially listed on DigitalOcean's Marketplace! For more information, please visit [Host WGDashboard & WireGuard with DigitalOcean](https://donaldzou.dev/WGDashboard-Documentation/host-wgdashboard-wireguard-with-digitalocean.html) for more information!

> [!NOTE]

> **Help Wanted 🎉**: Localizing WGDashboard to other languages! If you're willing to help, please visit https://github.com/donaldzou/WGDashboard/issues/397. Many thanks!

This project is supported by

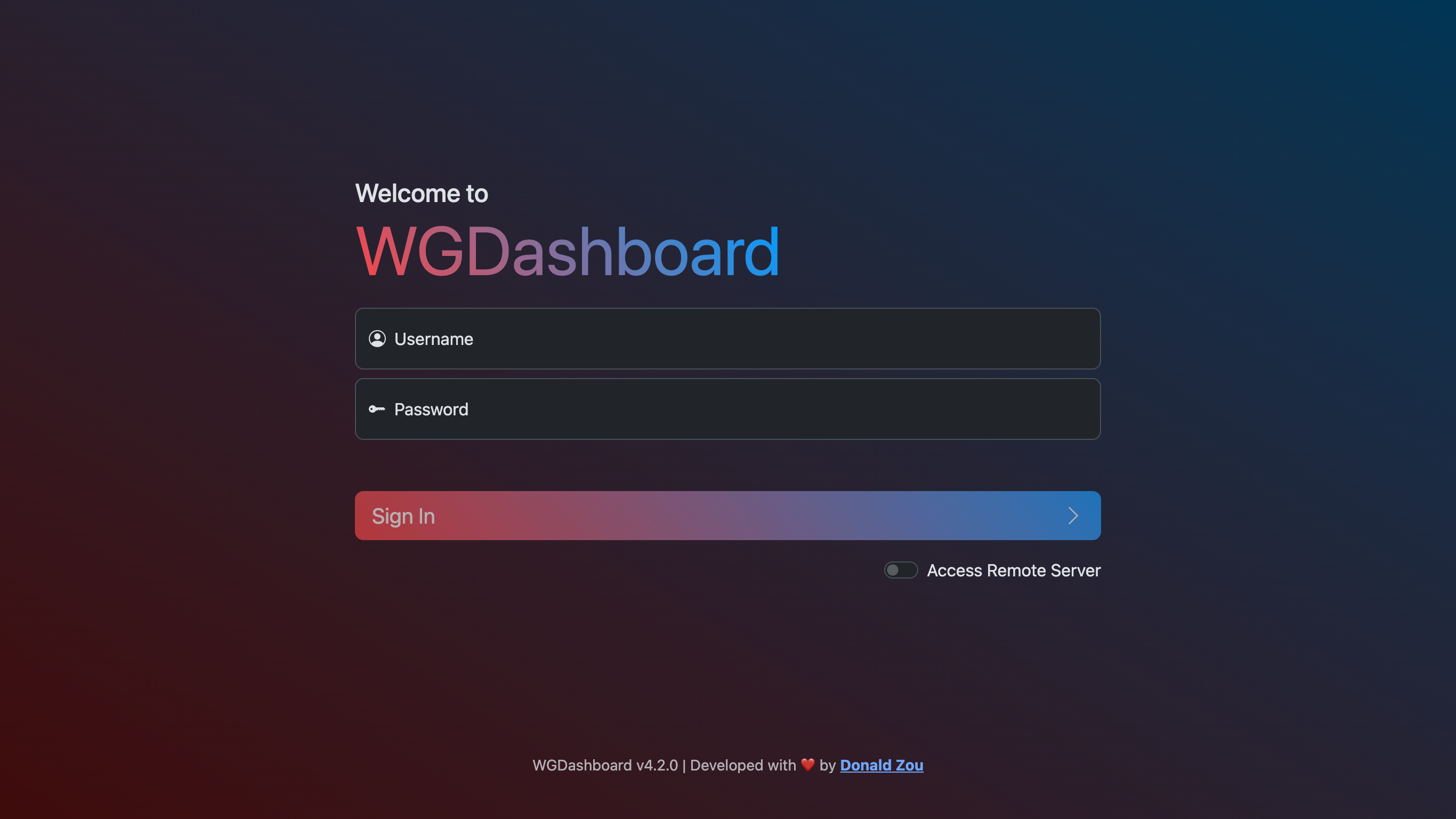

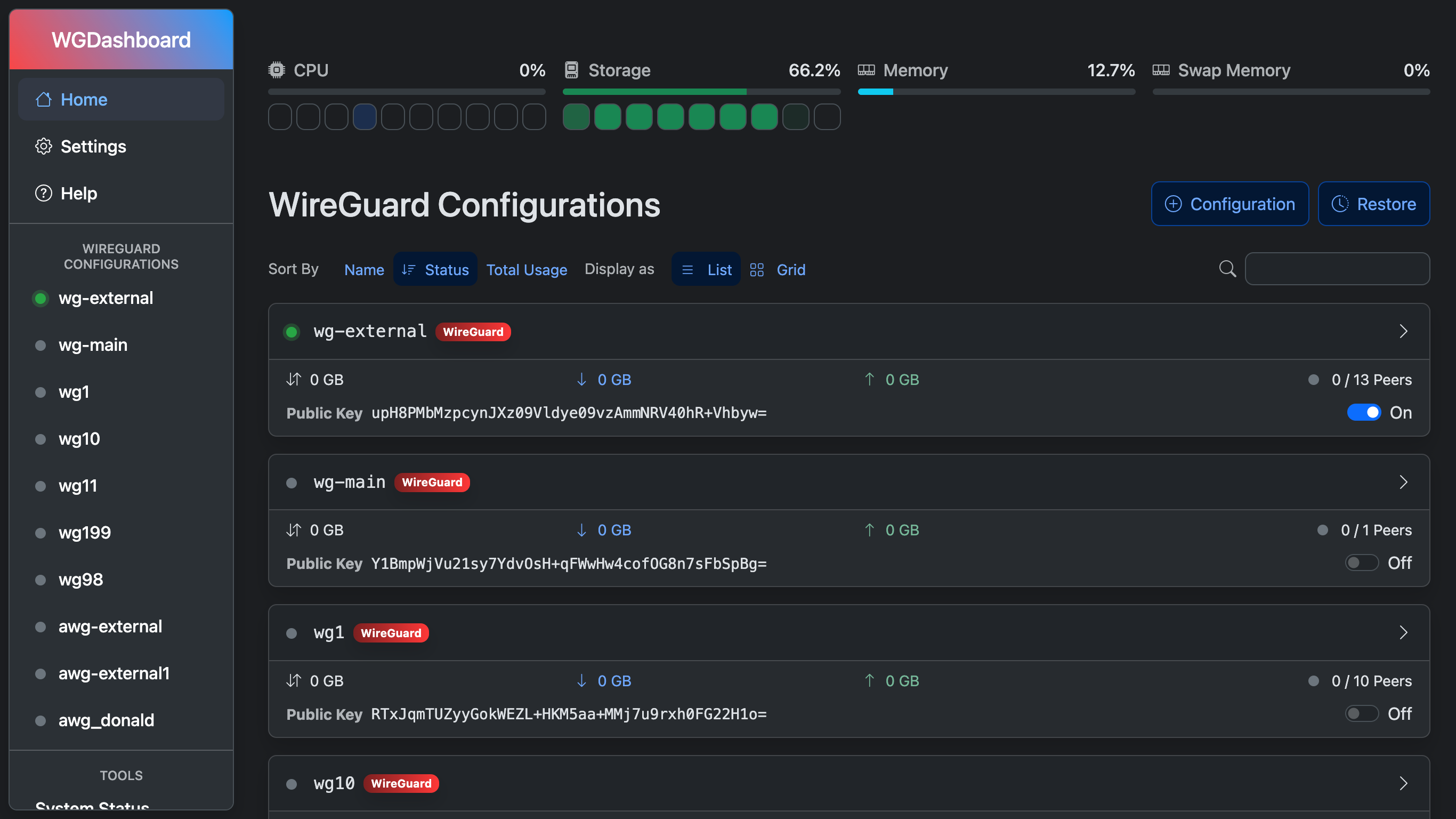

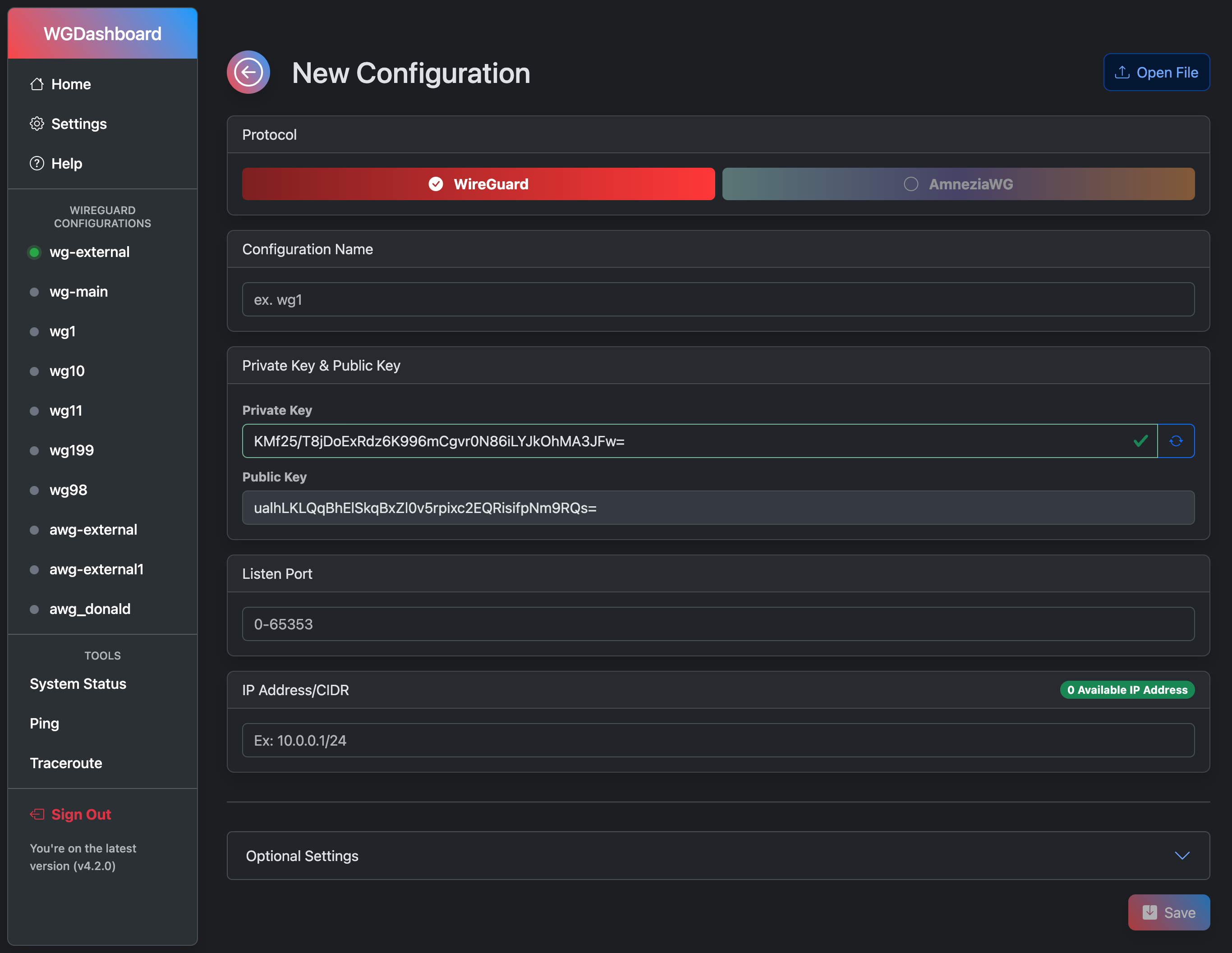

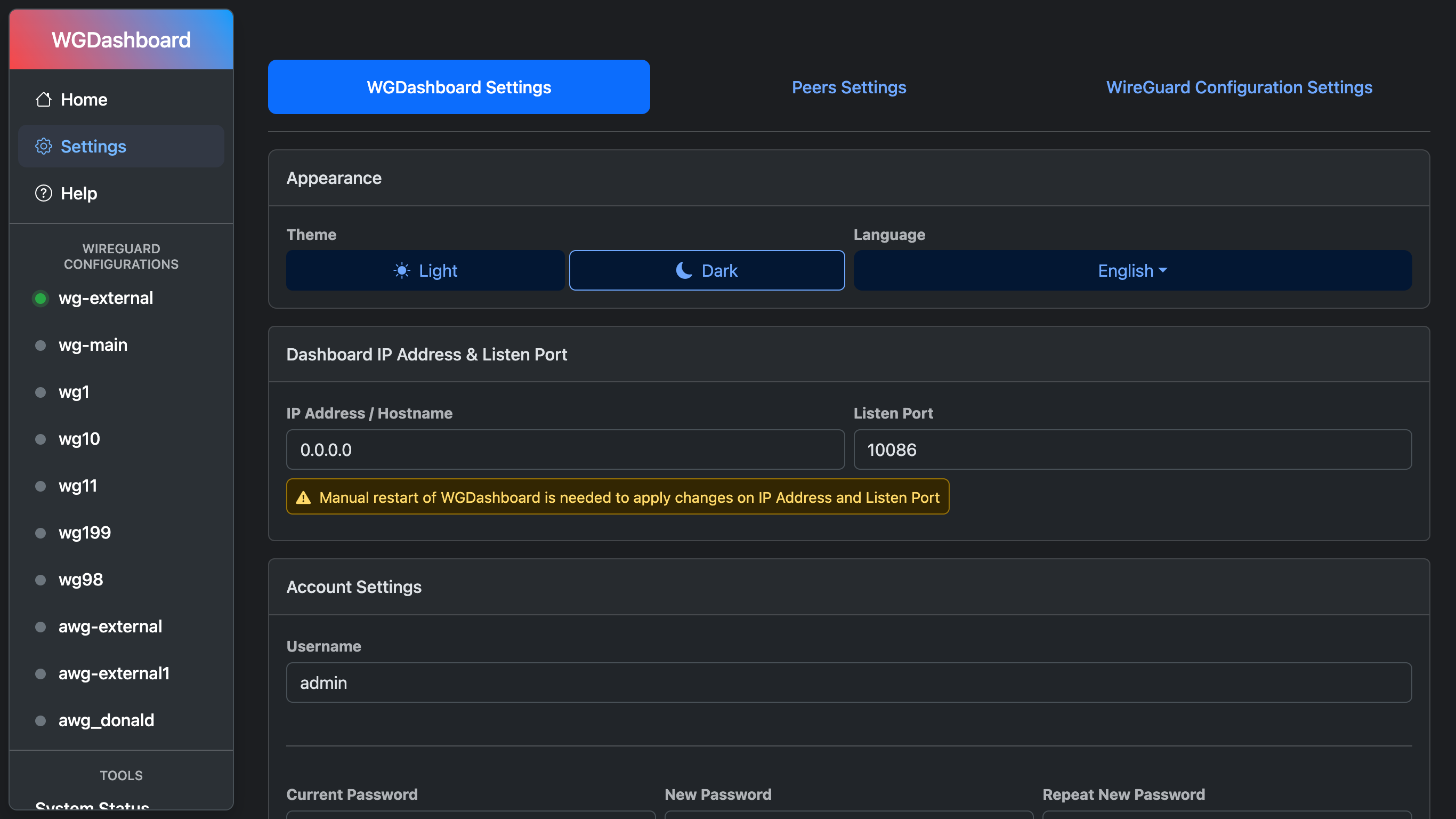

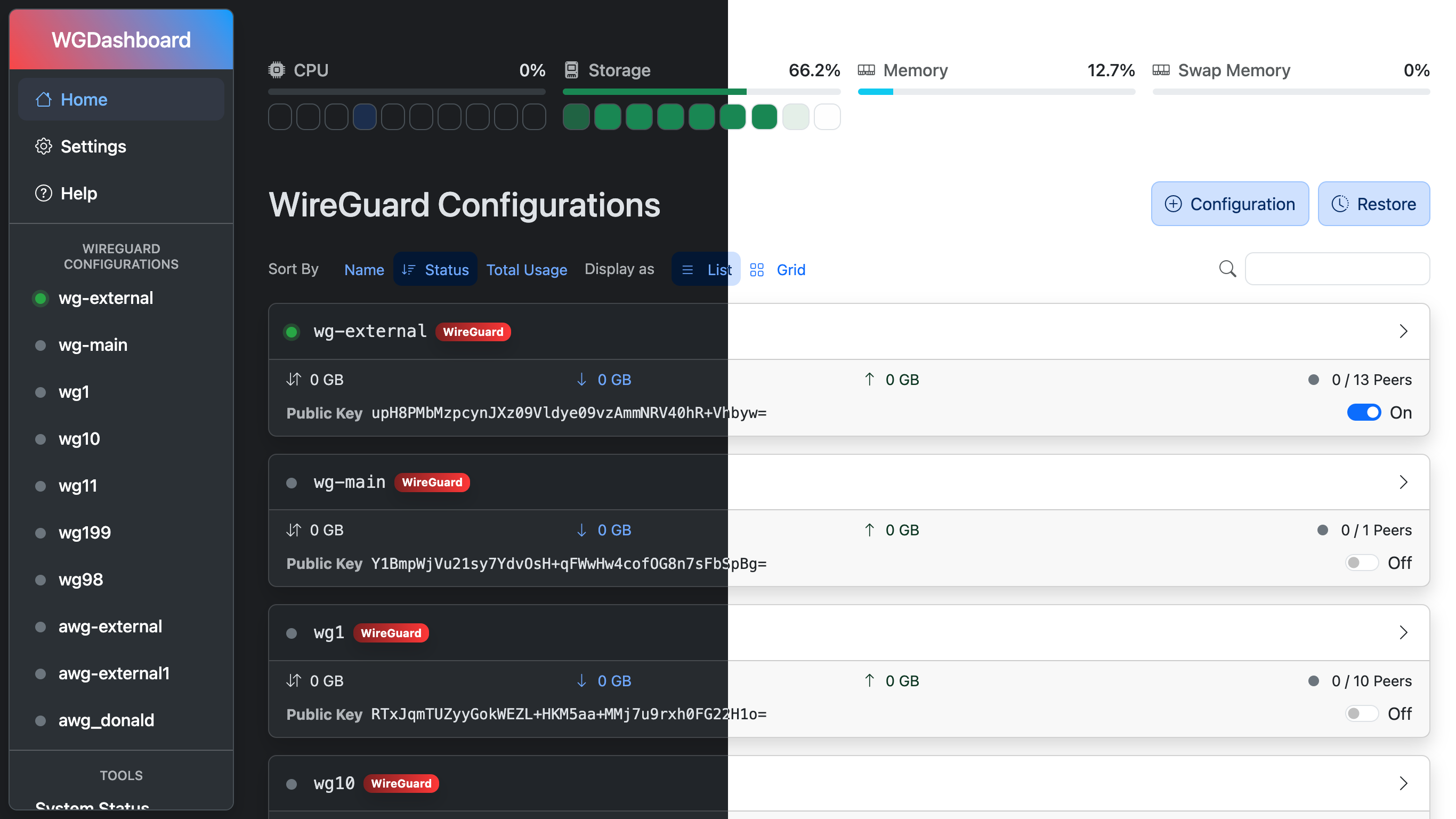

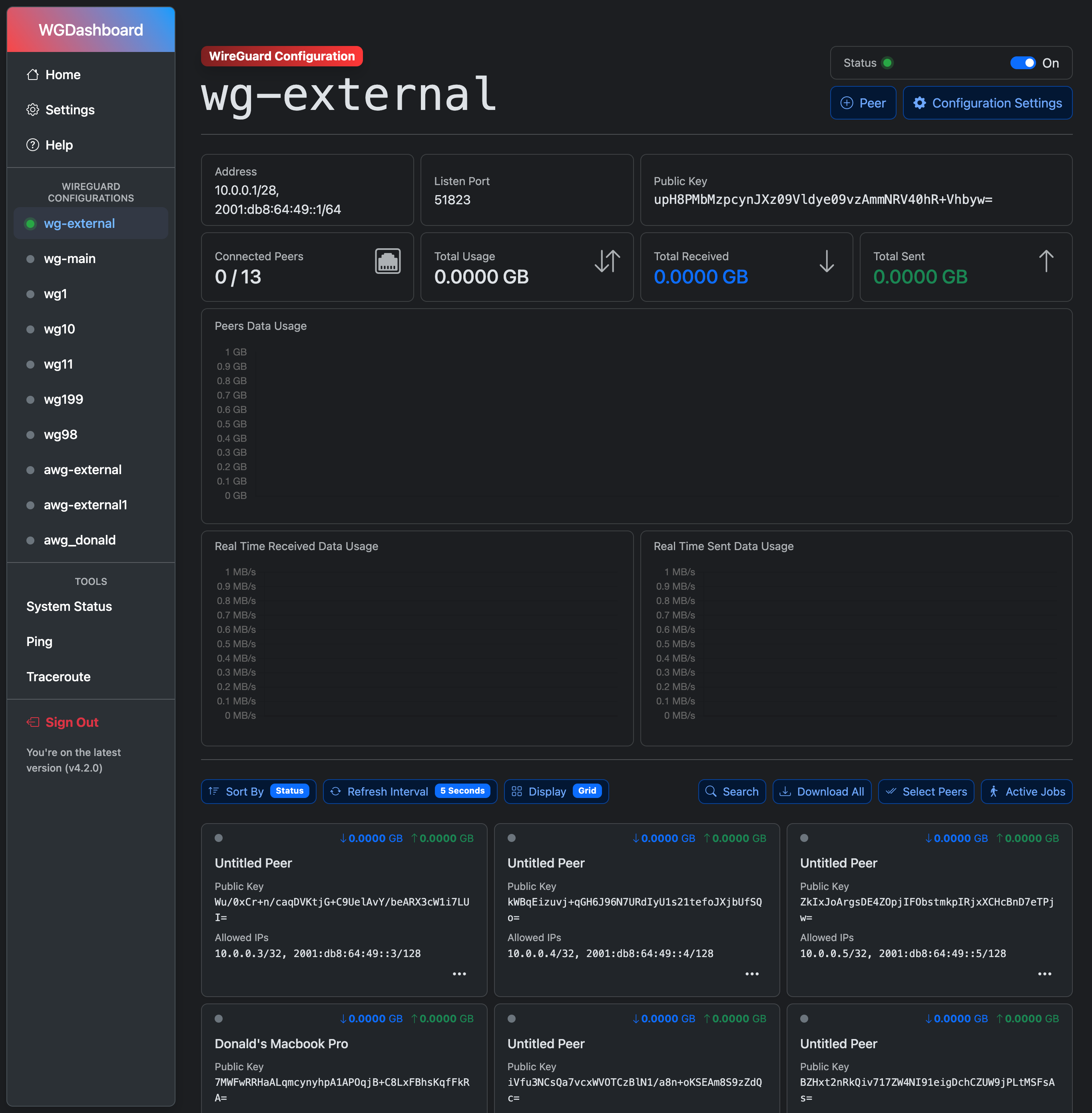

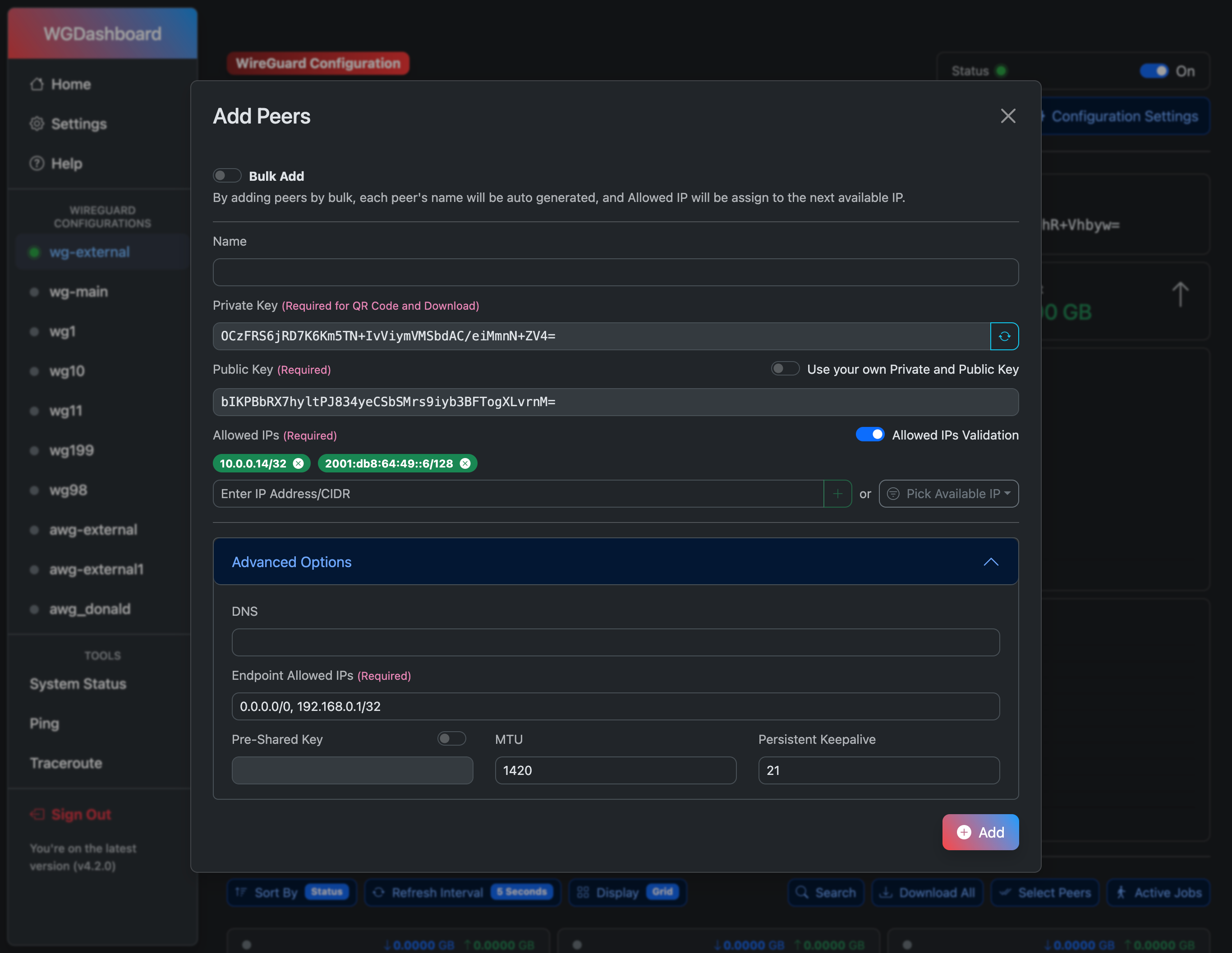

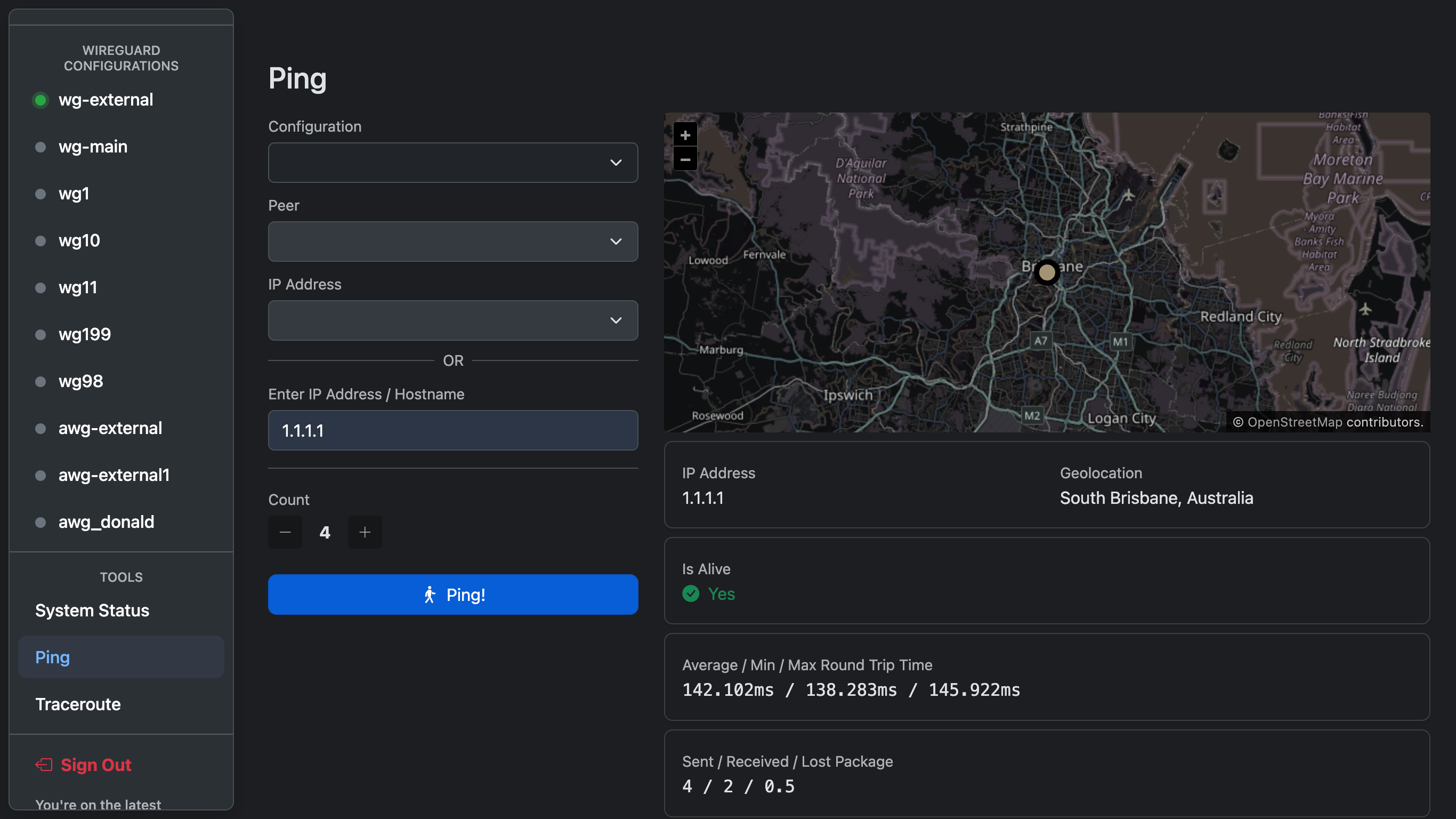

Monitoring WireGuard is not convenient, in most case, you'll need to login to your server and type wg show. That's why this project is being created, to view and manage all WireGuard configurations in a easy way.

With all these awesome features, while keeping it easy to install and use

This project is not affiliate to the official WireGuard Project

Looking for help or want to chat about this project?

You can reach out at

Want to support this project?

You can support via

or, visit our merch store and support us by purchasing a merch for only $USD 17.00 (Including shipping worldwide & duties)

for more information, visit our

# Screenshots

')

def API_updatePeerSettings(configName):

data = request.get_json()

id = data['id']

if len(id) > 0 and configName in WireguardConfigurations.keys():

name = data['name']

private_key = data['private_key']

dns_addresses = data['DNS']

allowed_ip = data['allowed_ip']

endpoint_allowed_ip = data['endpoint_allowed_ip']

preshared_key = data['preshared_key']

mtu = data['mtu']

keepalive = data['keepalive']

wireguardConfig = WireguardConfigurations[configName]

foundPeer, peer = wireguardConfig.searchPeer(id)

if foundPeer:

if wireguardConfig.Protocol == 'wg':

return peer.updatePeer(name, private_key, preshared_key, dns_addresses,

allowed_ip, endpoint_allowed_ip, mtu, keepalive)

return peer.updatePeer(name, private_key, preshared_key, dns_addresses,

allowed_ip, endpoint_allowed_ip, mtu, keepalive, "off")

return ResponseObject(False, "Peer does not exist")

@app.post(f'{APP_PREFIX}/api/resetPeerData/')

def API_resetPeerData(configName):

data = request.get_json()

id = data['id']

type = data['type']

if len(id) == 0 or configName not in WireguardConfigurations.keys():

return ResponseObject(False, "Configuration/Peer does not exist")

wgc = WireguardConfigurations.get(configName)

foundPeer, peer = wgc.searchPeer(id)

if not foundPeer:

return ResponseObject(False, "Configuration/Peer does not exist")

resetStatus = peer.resetDataUsage(type)

if resetStatus:

wgc.restrictPeers([id])

wgc.allowAccessPeers([id])

return ResponseObject(status=resetStatus)

@app.post(f'{APP_PREFIX}/api/deletePeers/')

def API_deletePeers(configName: str) -> ResponseObject:

data = request.get_json()

peers = data['peers']

if configName in WireguardConfigurations.keys():

if len(peers) == 0:

return ResponseObject(False, "Please specify one or more peers", status_code=400)

configuration = WireguardConfigurations.get(configName)

return configuration.deletePeers(peers)

return ResponseObject(False, "Configuration does not exist", status_code=404)

@app.post(f'{APP_PREFIX}/api/restrictPeers/')

def API_restrictPeers(configName: str) -> ResponseObject:

data = request.get_json()

peers = data['peers']

if configName in WireguardConfigurations.keys():

if len(peers) == 0:

return ResponseObject(False, "Please specify one or more peers")

configuration = WireguardConfigurations.get(configName)

return configuration.restrictPeers(peers)

return ResponseObject(False, "Configuration does not exist", status_code=404)

@app.post(f'{APP_PREFIX}/api/sharePeer/create')

def API_sharePeer_create():

data: dict[str, str] = request.get_json()

Configuration = data.get('Configuration')

Peer = data.get('Peer')

ExpireDate = data.get('ExpireDate')

if Configuration is None or Peer is None:

return ResponseObject(False, "Please specify configuration and peers")

activeLink = AllPeerShareLinks.getLink(Configuration, Peer)

if len(activeLink) > 0:

return ResponseObject(True,

"This peer is already sharing. Please view data for shared link.",

data=activeLink[0]

)

status, message = AllPeerShareLinks.addLink(Configuration, Peer, ExpireDate)

if not status:

return ResponseObject(status, message)

return ResponseObject(data=AllPeerShareLinks.getLinkByID(message))

@app.post(f'{APP_PREFIX}/api/sharePeer/update')

def API_sharePeer_update():

data: dict[str, str] = request.get_json()

ShareID: str = data.get("ShareID")

ExpireDate: str = data.get("ExpireDate")

if ShareID is None:

return ResponseObject(False, "Please specify ShareID")

if len(AllPeerShareLinks.getLinkByID(ShareID)) == 0:

return ResponseObject(False, "ShareID does not exist")

status, message = AllPeerShareLinks.updateLinkExpireDate(ShareID, ExpireDate)

if not status:

return ResponseObject(status, message)

return ResponseObject(data=AllPeerShareLinks.getLinkByID(ShareID))

@app.get(f'{APP_PREFIX}/api/sharePeer/get')

def API_sharePeer_get():

data = request.args

ShareID = data.get("ShareID")

if ShareID is None or len(ShareID) == 0:

return ResponseObject(False, "Please provide ShareID")

link = AllPeerShareLinks.getLinkByID(ShareID)

if len(link) == 0:

return ResponseObject(False, "This link is either expired to invalid")

l = link[0]

if l.Configuration not in WireguardConfigurations.keys():

return ResponseObject(False, "The peer you're looking for does not exist")

c = WireguardConfigurations.get(l.Configuration)

fp, p = c.searchPeer(l.Peer)

if not fp:

return ResponseObject(False, "The peer you're looking for does not exist")

return ResponseObject(data=p.downloadPeer())

@app.post(f'{APP_PREFIX}/api/allowAccessPeers/')

def API_allowAccessPeers(configName: str) -> ResponseObject:

data = request.get_json()

peers = data['peers']

if configName in WireguardConfigurations.keys():

if len(peers) == 0:

return ResponseObject(False, "Please specify one or more peers")

configuration = WireguardConfigurations.get(configName)

return configuration.allowAccessPeers(peers)

return ResponseObject(False, "Configuration does not exist")

@app.post(f'{APP_PREFIX}/api/addPeers/')

def API_addPeers(configName):

if configName in WireguardConfigurations.keys():

try:

data: dict = request.get_json()

bulkAdd: bool = data.get("bulkAdd", False)

bulkAddAmount: int = data.get('bulkAddAmount', 0)

preshared_key_bulkAdd: bool = data.get('preshared_key_bulkAdd', False)

public_key: str = data.get('public_key', "")

allowed_ips: list[str] = data.get('allowed_ips', [])

allowed_ips_validation: bool = data.get('allowed_ips_validation', True)

endpoint_allowed_ip: str = data.get('endpoint_allowed_ip', DashboardConfig.GetConfig("Peers", "peer_endpoint_allowed_ip")[1])

dns_addresses: str = data.get('DNS', DashboardConfig.GetConfig("Peers", "peer_global_DNS")[1])

mtu: int = data.get('mtu', int(DashboardConfig.GetConfig("Peers", "peer_MTU")[1]))

keep_alive: int = data.get('keepalive', int(DashboardConfig.GetConfig("Peers", "peer_keep_alive")[1]))

preshared_key: str = data.get('preshared_key', "")

if type(mtu) is not int or mtu < 0 or mtu > 1460:

mtu = int(DashboardConfig.GetConfig("Peers", "peer_MTU")[1])

if type(keep_alive) is not int or keep_alive < 0:

keep_alive = int(DashboardConfig.GetConfig("Peers", "peer_keep_alive")[1])

config = WireguardConfigurations.get(configName)

if not config.getStatus():

config.toggleConfiguration()

ipStatus, availableIps = config.getAvailableIP(-1)

ipCountStatus, numberOfAvailableIPs = config.getNumberOfAvailableIP()

defaultIPSubnet = list(availableIps.keys())[0]

if bulkAdd:

if type(preshared_key_bulkAdd) is not bool:

preshared_key_bulkAdd = False

if type(bulkAddAmount) is not int or bulkAddAmount < 1:

return ResponseObject(False, "Please specify amount of peers you want to add")

if not ipStatus:

return ResponseObject(False, "No more available IP can assign")

if len(availableIps.keys()) == 0:

return ResponseObject(False, "This configuration does not have any IP address available")

if bulkAddAmount > sum(list(numberOfAvailableIPs.values())):

return ResponseObject(False,

f"The maximum number of peers can add is {sum(list(numberOfAvailableIPs.values()))}")

keyPairs = []

addedCount = 0

for subnet in availableIps.keys():

for ip in availableIps[subnet]:

newPrivateKey = GenerateWireguardPrivateKey()[1]

addedCount += 1

keyPairs.append({

"private_key": newPrivateKey,

"id": GenerateWireguardPublicKey(newPrivateKey)[1],

"preshared_key": (GenerateWireguardPrivateKey()[1] if preshared_key_bulkAdd else ""),

"allowed_ip": ip,

"name": f"BulkPeer_{(addedCount + 1)}_{datetime.now().strftime('%Y%m%d_%H%M%S')}",

"DNS": dns_addresses,

"endpoint_allowed_ip": endpoint_allowed_ip,

"mtu": mtu,

"keepalive": keep_alive,

"advanced_security": "off"

})

if addedCount == bulkAddAmount:

break

if addedCount == bulkAddAmount:

break

if len(keyPairs) == 0 or (bulkAdd and len(keyPairs) != bulkAddAmount):

return ResponseObject(False, "Generating key pairs by bulk failed")

status, result = config.addPeers(keyPairs)

return ResponseObject(status=status, message=result['message'], data=result['peers'])

else:

if config.searchPeer(public_key)[0] is True:

return ResponseObject(False, f"This peer already exist")

name = data.get("name", "")

private_key = data.get("private_key", "")

if len(public_key) == 0:

if len(private_key) == 0:

private_key = GenerateWireguardPrivateKey()[1]

public_key = GenerateWireguardPublicKey(private_key)[1]

else:

public_key = GenerateWireguardPublicKey(private_key)[1]

else:

if len(private_key) > 0:

genPub = GenerateWireguardPublicKey(private_key)[1]

# Check if provided pubkey match provided private key

if public_key != genPub:

return ResponseObject(False, "Provided Public Key does not match provided Private Key")

# if len(public_key) == 0 and len(private_key) == 0:

# private_key = GenerateWireguardPrivateKey()[1]

# public_key = GenerateWireguardPublicKey(private_key)[1]

# elif len(public_key) == 0 and len(private_key) > 0:

# public_key = GenerateWireguardPublicKey(private_key)[1]

if len(allowed_ips) == 0:

if ipStatus:

for subnet in availableIps.keys():

for ip in availableIps[subnet]:

allowed_ips = [ip]

break

break

else:

return ResponseObject(False, "No more available IP can assign")

if allowed_ips_validation:

for i in allowed_ips:

found = False

for subnet in availableIps.keys():

network = ipaddress.ip_network(subnet, False)

ap = ipaddress.ip_network(i)

if network.version == ap.version and ap.subnet_of(network):

found = True

if not found:

return ResponseObject(False, f"This IP is not available: {i}")

status, result = config.addPeers([

{

"name": name,

"id": public_key,

"private_key": private_key,

"allowed_ip": ','.join(allowed_ips),

"preshared_key": preshared_key,

"endpoint_allowed_ip": endpoint_allowed_ip,

"DNS": dns_addresses,

"mtu": mtu,

"keepalive": keep_alive,

"advanced_security": "off"

}]

)

return ResponseObject(status=status, message=result['message'], data=result['peers'])

except Exception as e:

print(e, str(e.__traceback__))

return ResponseObject(False, "Add peers failed. Please see data for specific issue")

return ResponseObject(False, "Configuration does not exist")

@app.get(f"{APP_PREFIX}/api/downloadPeer/")

def API_downloadPeer(configName):

data = request.args

if configName not in WireguardConfigurations.keys():

return ResponseObject(False, "Configuration does not exist")

configuration = WireguardConfigurations[configName]

peerFound, peer = configuration.searchPeer(data['id'])

if len(data['id']) == 0 or not peerFound:

return ResponseObject(False, "Peer does not exist")

return ResponseObject(data=peer.downloadPeer())

@app.get(f"{APP_PREFIX}/api/downloadAllPeers/")

def API_downloadAllPeers(configName):

if configName not in WireguardConfigurations.keys():

return ResponseObject(False, "Configuration does not exist")

configuration = WireguardConfigurations[configName]

peerData = []

untitledPeer = 0

for i in configuration.Peers:

file = i.downloadPeer()

if file["fileName"] == "UntitledPeer":

file["fileName"] = str(untitledPeer) + "_" + file["fileName"]

untitledPeer += 1

peerData.append(file)

return ResponseObject(data=peerData)

@app.get(f"{APP_PREFIX}/api/getAvailableIPs/")

def API_getAvailableIPs(configName):

if configName not in WireguardConfigurations.keys():

return ResponseObject(False, "Configuration does not exist")

status, ips = WireguardConfigurations.get(configName).getAvailableIP()

return ResponseObject(status=status, data=ips)

@app.get(f"{APP_PREFIX}/api/getNumberOfAvailableIPs/")

def API_getNumberOfAvailableIPs(configName):

if configName not in WireguardConfigurations.keys():

return ResponseObject(False, "Configuration does not exist")

status, ips = WireguardConfigurations.get(configName).getNumberOfAvailableIP()

return ResponseObject(status=status, data=ips)

@app.get(f'{APP_PREFIX}/api/getWireguardConfigurationInfo')

def API_getConfigurationInfo():

configurationName = request.args.get("configurationName")

if not configurationName or configurationName not in WireguardConfigurations.keys():

return ResponseObject(False, "Please provide configuration name")

return ResponseObject(data={

"configurationInfo": WireguardConfigurations[configurationName],

"configurationPeers": WireguardConfigurations[configurationName].getPeersList(),

"configurationRestrictedPeers": WireguardConfigurations[configurationName].getRestrictedPeersList()

})

@app.get(f'{APP_PREFIX}/api/getDashboardTheme')

def API_getDashboardTheme():

return ResponseObject(data=DashboardConfig.GetConfig("Server", "dashboard_theme")[1])

@app.get(f'{APP_PREFIX}/api/getDashboardVersion')

def API_getDashboardVersion():

return ResponseObject(data=DashboardConfig.GetConfig("Server", "version")[1])

@app.post(f'{APP_PREFIX}/api/savePeerScheduleJob')

def API_savePeerScheduleJob():

data = request.json

if "Job" not in data.keys():

return ResponseObject(False, "Please specify job")

job: dict = data['Job']

if "Peer" not in job.keys() or "Configuration" not in job.keys():

return ResponseObject(False, "Please specify peer and configuration")

configuration = WireguardConfigurations.get(job['Configuration'])

if configuration is None:

return ResponseObject(False, "Configuration does not exist")

f, fp = configuration.searchPeer(job['Peer'])

if not f:

return ResponseObject(False, "Peer does not exist")

s, p = AllPeerJobs.saveJob(PeerJob(

job['JobID'], job['Configuration'], job['Peer'], job['Field'], job['Operator'], job['Value'],

job['CreationDate'], job['ExpireDate'], job['Action']))

if s:

return ResponseObject(s, data=p)

return ResponseObject(s, message=p)

@app.post(f'{APP_PREFIX}/api/deletePeerScheduleJob')

def API_deletePeerScheduleJob():

data = request.json

if "Job" not in data.keys():

return ResponseObject(False, "Please specify job")

job: dict = data['Job']

if "Peer" not in job.keys() or "Configuration" not in job.keys():

return ResponseObject(False, "Please specify peer and configuration")

configuration = WireguardConfigurations.get(job['Configuration'])

if configuration is None:

return ResponseObject(False, "Configuration does not exist")

f, fp = configuration.searchPeer(job['Peer'])

if not f:

return ResponseObject(False, "Peer does not exist")

s, p = AllPeerJobs.deleteJob(PeerJob(

job['JobID'], job['Configuration'], job['Peer'], job['Field'], job['Operator'], job['Value'],

job['CreationDate'], job['ExpireDate'], job['Action']))

if s:

return ResponseObject(s, data=p)

return ResponseObject(s, message=p)

@app.get(f'{APP_PREFIX}/api/getPeerScheduleJobLogs/')

def API_getPeerScheduleJobLogs(configName):

if configName not in WireguardConfigurations.keys():

return ResponseObject(False, "Configuration does not exist")

data = request.args.get("requestAll")

requestAll = False

if data is not None and data == "true":

requestAll = True

return ResponseObject(data=JobLogger.getLogs(requestAll, configName))

'''

File Download

'''

@app.get(f'{APP_PREFIX}/fileDownload')

def API_download():

file = request.args.get('file')

if file is None or len(file) == 0:

return ResponseObject(False, "Please specify a file")

if os.path.exists(os.path.join('download', file)):

return send_file(os.path.join('download', file), as_attachment=True)

else:

return ResponseObject(False, "File does not exist")

'''

Tools

'''

@app.get(f'{APP_PREFIX}/api/ping/getAllPeersIpAddress')

def API_ping_getAllPeersIpAddress():

ips = {}

for c in WireguardConfigurations.values():

cips = {}

for p in c.Peers:

allowed_ip = p.allowed_ip.replace(" ", "").split(",")

parsed = []

for x in allowed_ip:

try:

ip = ipaddress.ip_network(x, strict=False)

except ValueError as e:

print(f"{p.id} - {c.Name}")

if len(list(ip.hosts())) == 1:

parsed.append(str(ip.hosts()[0]))

endpoint = p.endpoint.replace(" ", "").replace("(none)", "")

if len(p.name) > 0:

cips[f"{p.name} - {p.id}"] = {

"allowed_ips": parsed,

"endpoint": endpoint

}

else:

cips[f"{p.id}"] = {

"allowed_ips": parsed,

"endpoint": endpoint

}

ips[c.Name] = cips

return ResponseObject(data=ips)

import requests

@app.get(f'{APP_PREFIX}/api/ping/execute')

def API_ping_execute():

if "ipAddress" in request.args.keys() and "count" in request.args.keys():

ip = request.args['ipAddress']

count = request.args['count']

try:

if ip is not None and len(ip) > 0 and count is not None and count.isnumeric():

result = ping(ip, count=int(count), source=None)

data = {

"address": result.address,

"is_alive": result.is_alive,

"min_rtt": result.min_rtt,

"avg_rtt": result.avg_rtt,

"max_rtt": result.max_rtt,

"package_sent": result.packets_sent,

"package_received": result.packets_received,

"package_loss": result.packet_loss,

"geo": None

}

try:

r = requests.get(f"http://ip-api.com/json/{result.address}?field=city")

data['geo'] = r.json()

except Exception as e:

pass

return ResponseObject(data=data)

return ResponseObject(False, "Please specify an IP Address (v4/v6)")

except Exception as exp:

return ResponseObject(False, exp)

return ResponseObject(False, "Please provide ipAddress and count")

@app.get(f'{APP_PREFIX}/api/traceroute/execute')

def API_traceroute_execute():

if "ipAddress" in request.args.keys() and len(request.args.get("ipAddress")) > 0:

ipAddress = request.args.get('ipAddress')

try:

tracerouteResult = traceroute(ipAddress, timeout=1, max_hops=64)

result = []

for hop in tracerouteResult:

if len(result) > 1:

skipped = False

for i in range(result[-1]["hop"] + 1, hop.distance):

result.append(

{

"hop": i,

"ip": "*",

"avg_rtt": "*",

"min_rtt": "*",

"max_rtt": "*"

}

)

skip = True

if skipped: continue

result.append(

{

"hop": hop.distance,

"ip": hop.address,

"avg_rtt": hop.avg_rtt,

"min_rtt": hop.min_rtt,

"max_rtt": hop.max_rtt

})

try:

r = requests.post(f"http://ip-api.com/batch?fields=city,country,lat,lon,query",

data=json.dumps([x['ip'] for x in result]))

d = r.json()

for i in range(len(result)):

result[i]['geo'] = d[i]

except Exception as e:

return ResponseObject(data=result, message="Failed to request IP address geolocation")

return ResponseObject(data=result)

except Exception as exp:

return ResponseObject(False, exp)

else:

return ResponseObject(False, "Please provide ipAddress")

@app.get(f'{APP_PREFIX}/api/getDashboardUpdate')

def API_getDashboardUpdate():

import urllib.request as req

try:

r = req.urlopen("https://api.github.com/repos/donaldzou/WGDashboard/releases/latest", timeout=5).read()

data = dict(json.loads(r))

tagName = data.get('tag_name')

htmlUrl = data.get('html_url')

if tagName is not None and htmlUrl is not None:

if version.parse(tagName) > version.parse(DASHBOARD_VERSION):

return ResponseObject(message=f"{tagName} is now available for update!", data=htmlUrl)

else:

return ResponseObject(message="You're on the latest version")

return ResponseObject(False)

except Exception as e:

return ResponseObject(False, f"Request to GitHub API failed.")

'''

Sign Up

'''

@app.get(f'{APP_PREFIX}/api/isTotpEnabled')

def API_isTotpEnabled():

return (

ResponseObject(data=DashboardConfig.GetConfig("Account", "enable_totp")[1] and DashboardConfig.GetConfig("Account", "totp_verified")[1]))

@app.get(f'{APP_PREFIX}/api/Welcome_GetTotpLink')

def API_Welcome_GetTotpLink():

if not DashboardConfig.GetConfig("Account", "totp_verified")[1]:

DashboardConfig.SetConfig("Account", "totp_key", pyotp.random_base32(), True)

return ResponseObject(

data=pyotp.totp.TOTP(DashboardConfig.GetConfig("Account", "totp_key")[1]).provisioning_uri(

issuer_name="WGDashboard"))

return ResponseObject(False)

@app.post(f'{APP_PREFIX}/api/Welcome_VerifyTotpLink')

def API_Welcome_VerifyTotpLink():

data = request.get_json()

totp = pyotp.TOTP(DashboardConfig.GetConfig("Account", "totp_key")[1]).now()

if totp == data['totp']:

DashboardConfig.SetConfig("Account", "totp_verified", "true")

DashboardConfig.SetConfig("Account", "enable_totp", "true")

return ResponseObject(totp == data['totp'])

@app.post(f'{APP_PREFIX}/api/Welcome_Finish')

def API_Welcome_Finish():

data = request.get_json()

if DashboardConfig.GetConfig("Other", "welcome_session")[1]:

if data["username"] == "":

return ResponseObject(False, "Username cannot be blank.")

if data["newPassword"] == "" or len(data["newPassword"]) < 8:

return ResponseObject(False, "Password must be at least 8 characters")

updateUsername, updateUsernameErr = DashboardConfig.SetConfig("Account", "username", data["username"])

updatePassword, updatePasswordErr = DashboardConfig.SetConfig("Account", "password",

{

"newPassword": data["newPassword"],

"repeatNewPassword": data["repeatNewPassword"],

"currentPassword": "admin"

})

if not updateUsername or not updatePassword:

return ResponseObject(False, f"{updateUsernameErr},{updatePasswordErr}".strip(","))

DashboardConfig.SetConfig("Other", "welcome_session", False)

return ResponseObject()

class Locale:

def __init__(self):

self.localePath = './static/locale/'

self.activeLanguages = {}

with open(os.path.join(f"{self.localePath}active_languages.json"), "r") as f:

self.activeLanguages = sorted(json.loads(''.join(f.readlines())), key=lambda x : x['lang_name'])

def getLanguage(self) -> dict | None:

currentLanguage = DashboardConfig.GetConfig("Server", "dashboard_language")[1]

if currentLanguage == "en":

return None

if os.path.exists(os.path.join(f"{self.localePath}{currentLanguage}.json")):

with open(os.path.join(f"{self.localePath}{currentLanguage}.json"), "r") as f:

return dict(json.loads(''.join(f.readlines())))

else:

return None

def updateLanguage(self, lang_id):

if not os.path.exists(os.path.join(f"{self.localePath}{lang_id}.json")):

DashboardConfig.SetConfig("Server", "dashboard_language", "en")

else:

DashboardConfig.SetConfig("Server", "dashboard_language", lang_id)

Locale = Locale()

@app.get(f'{APP_PREFIX}/api/locale')

def API_Locale_CurrentLang():

return ResponseObject(data=Locale.getLanguage())

@app.get(f'{APP_PREFIX}/api/locale/available')

def API_Locale_Available():

return ResponseObject(data=Locale.activeLanguages)

@app.post(f'{APP_PREFIX}/api/locale/update')

def API_Locale_Update():

data = request.get_json()

if 'lang_id' not in data.keys():

return ResponseObject(False, "Please specify a lang_id")

Locale.updateLanguage(data['lang_id'])

return ResponseObject(data=Locale.getLanguage())

@app.get(f'{APP_PREFIX}/api/email/ready')

def API_Email_Ready():

return ResponseObject(EmailSender.ready())

@app.post(f'{APP_PREFIX}/api/email/send')

def API_Email_Send():

data = request.get_json()

if "Receiver" not in data.keys():

return ResponseObject(False, "Please at least specify receiver")

body = data.get('Body', '')

download = None

if ("ConfigurationName" in data.keys()

and "Peer" in data.keys()):

if data.get('ConfigurationName') in WireguardConfigurations.keys():

configuration = WireguardConfigurations.get(data.get('ConfigurationName'))

attachmentName = ""

if configuration is not None:

fp, p = configuration.searchPeer(data.get('Peer'))

if fp:

template = Template(body)

download = p.downloadPeer()

body = template.render(peer=p.toJson(), configurationFile=download)

if data.get('IncludeAttachment', False):

u = str(uuid4())

attachmentName = f'{u}.conf'

with open(os.path.join('./attachments', attachmentName,), 'w+') as f:

f.write(download['file'])

s, m = EmailSender.send(data.get('Receiver'), data.get('Subject', ''), body,

data.get('IncludeAttachment', False), (attachmentName if download else ''))

return ResponseObject(s, m)

@app.post(f'{APP_PREFIX}/api/email/previewBody')

def API_Email_PreviewBody():

data = request.get_json()

body = data.get('Body', '')

if len(body) == 0:

return ResponseObject(False, "Nothing to preview")

if ("ConfigurationName" not in data.keys()

or "Peer" not in data.keys() or data.get('ConfigurationName') not in WireguardConfigurations.keys()):

return ResponseObject(False, "Please specify configuration and peer")

configuration = WireguardConfigurations.get(data.get('ConfigurationName'))

fp, p = configuration.searchPeer(data.get('Peer'))

if not fp:

return ResponseObject(False, "Peer does not exist")

try:

template = Template(body)

download = p.downloadPeer()

body = template.render(peer=p.toJson(), configurationFile=download)

return ResponseObject(data=body)

except Exception as e:

return ResponseObject(False, message=str(e))

@app.get(f'{APP_PREFIX}/api/systemStatus')

def API_SystemStatus():

return ResponseObject(data=SystemStatus)

@app.get(f'{APP_PREFIX}/api/protocolsEnabled')

def API_ProtocolsEnabled():

return ResponseObject(data=ProtocolsEnabled())

@app.get(f'{APP_PREFIX}/')

def index():

return render_template('index.html')

def peerInformationBackgroundThread():

global WireguardConfigurations

print(f"[WGDashboard] Background Thread #1 Started", flush=True)

time.sleep(10)

while True:

with app.app_context():

try:

curKeys = list(WireguardConfigurations.keys())

for name in curKeys:

if name in WireguardConfigurations.keys() and WireguardConfigurations.get(name) is not None:

c = WireguardConfigurations.get(name)

if c.getStatus():

c.getPeersTransfer()

c.getPeersLatestHandshake()

c.getPeersEndpoint()

c.getPeersList()

c.getRestrictedPeersList()

except Exception as e:

print(f"[WGDashboard] Background Thread #1 Error: {str(e)}", flush=True)

time.sleep(10)

def peerJobScheduleBackgroundThread():

with app.app_context():

print(f"[WGDashboard] Background Thread #2 Started", flush=True)

time.sleep(10)

while True:

AllPeerJobs.runJob()

time.sleep(180)

def gunicornConfig():

_, app_ip = DashboardConfig.GetConfig("Server", "app_ip")

_, app_port = DashboardConfig.GetConfig("Server", "app_port")

return app_ip, app_port

def ProtocolsEnabled() -> list[str]:

from shutil import which

protocols = []

if which('awg') is not None and which('awg-quick') is not None:

protocols.append("awg")

if which('wg') is not None and which('wg-quick') is not None:

protocols.append("wg")

return protocols

def InitWireguardConfigurationsList(startup: bool = False):

if os.path.exists(DashboardConfig.GetConfig("Server", "wg_conf_path")[1]):

confs = os.listdir(DashboardConfig.GetConfig("Server", "wg_conf_path")[1])

confs.sort()

for i in confs:

if RegexMatch("^(.{1,}).(conf)$", i):

i = i.replace('.conf', '')

try:

if i in WireguardConfigurations.keys():

if WireguardConfigurations[i].configurationFileChanged():

WireguardConfigurations[i] = WireguardConfiguration(i)

else:

WireguardConfigurations[i] = WireguardConfiguration(i, startup=startup)

except WireguardConfiguration.InvalidConfigurationFileException as e:

print(f"{i} have an invalid configuration file.")

if "awg" in ProtocolsEnabled():

confs = os.listdir(DashboardConfig.GetConfig("Server", "awg_conf_path")[1])

confs.sort()

for i in confs:

if RegexMatch("^(.{1,}).(conf)$", i):

i = i.replace('.conf', '')

try:

if i in WireguardConfigurations.keys():

if WireguardConfigurations[i].configurationFileChanged():

WireguardConfigurations[i] = AmneziaWireguardConfiguration(i)

else:

WireguardConfigurations[i] = AmneziaWireguardConfiguration(i, startup=startup)

except WireguardConfigurations.InvalidConfigurationFileException as e:

print(f"{i} have an invalid configuration file.")

AllPeerShareLinks: PeerShareLinks = PeerShareLinks()

AllPeerJobs: PeerJobs = PeerJobs()

JobLogger: PeerJobLogger = PeerJobLogger(CONFIGURATION_PATH, AllPeerJobs)

DashboardLogger: DashboardLogger = DashboardLogger(CONFIGURATION_PATH)

_, app_ip = DashboardConfig.GetConfig("Server", "app_ip")

_, app_port = DashboardConfig.GetConfig("Server", "app_port")

_, WG_CONF_PATH = DashboardConfig.GetConfig("Server", "wg_conf_path")

WireguardConfigurations: dict[str, WireguardConfiguration] = {}

AmneziaWireguardConfigurations: dict[str, AmneziaWireguardConfiguration] = {}

InitWireguardConfigurationsList(startup=True)

def startThreads():

bgThread = threading.Thread(target=peerInformationBackgroundThread, daemon=True)

bgThread.start()

scheduleJobThread = threading.Thread(target=peerJobScheduleBackgroundThread, daemon=True)

scheduleJobThread.start()

if __name__ == "__main__":

startThreads()

app.run(host=app_ip, debug=False, port=app_port)

```

## /src/gunicorn.conf.py

```py path="/src/gunicorn.conf.py"

import os.path

import dashboard, configparser

from datetime import datetime

global sqldb, cursor, DashboardConfig, WireguardConfigurations, AllPeerJobs, JobLogger

app_host, app_port = dashboard.gunicornConfig()

date = datetime.today().strftime('%Y_%m_%d_%H_%M_%S')

def post_worker_init(worker):

dashboard.startThreads()

worker_class = 'gthread'

workers = 1

threads = 1

bind = f"{app_host}:{app_port}"

daemon = True

pidfile = './gunicorn.pid'

wsgi_app = "dashboard:app"

accesslog = f"./log/access_{date}.log"

log_level = "debug"

capture_output = True

errorlog = f"./log/error_{date}.log"

pythonpath = "., ./modules"

print(f"[Gunicorn] WGDashboard w/ Gunicorn will be running on {bind}", flush=True)

print(f"[Gunicorn] Access log file is at {accesslog}", flush=True)

print(f"[Gunicorn] Error log file is at {errorlog}", flush=True)

```

## /src/modules/DashboardLogger.py

```py path="/src/modules/DashboardLogger.py"

"""

Dashboard Logger Class

"""

import sqlite3, os, uuid

class DashboardLogger:

def __init__(self, CONFIGURATION_PATH):

self.loggerdb = sqlite3.connect(os.path.join(CONFIGURATION_PATH, 'db', 'wgdashboard_log.db'),

isolation_level=None,

check_same_thread=False)

self.loggerdb.row_factory = sqlite3.Row

self.__createLogDatabase()

self.log(Message="WGDashboard started")

def __createLogDatabase(self):

with self.loggerdb:

loggerdbCursor = self.loggerdb.cursor()

existingTable = loggerdbCursor.execute("SELECT name from sqlite_master where type='table'").fetchall()

existingTable = [t['name'] for t in existingTable]

if "DashboardLog" not in existingTable:

loggerdbCursor.execute(

"CREATE TABLE DashboardLog (LogID VARCHAR NOT NULL, LogDate DATETIME DEFAULT (strftime('%Y-%m-%d %H:%M:%S','now', 'localtime')), URL VARCHAR, IP VARCHAR, Status VARCHAR, Message VARCHAR, PRIMARY KEY (LogID))")

if self.loggerdb.in_transaction:

self.loggerdb.commit()

def log(self, URL: str = "", IP: str = "", Status: str = "true", Message: str = "") -> bool:

try:

loggerdbCursor = self.loggerdb.cursor()

loggerdbCursor.execute(

"INSERT INTO DashboardLog (LogID, URL, IP, Status, Message) VALUES (?, ?, ?, ?, ?);", (str(uuid.uuid4()), URL, IP, Status, Message,))

loggerdbCursor.close()

self.loggerdb.commit()

return True

except Exception as e:

print(f"[WGDashboard] Access Log Error: {str(e)}")

return False

```

## /src/modules/Email.py

```py path="/src/modules/Email.py"

import os.path

import smtplib

from email import encoders

from email.header import Header

from email.mime.base import MIMEBase

from email.mime.multipart import MIMEMultipart

from email.mime.text import MIMEText

from email.utils import formataddr

class EmailSender:

def __init__(self, DashboardConfig):

self.smtp = None

self.DashboardConfig = DashboardConfig

if not os.path.exists('./attachments'):

os.mkdir('./attachments')

def Server(self):

return self.DashboardConfig.GetConfig("Email", "server")[1]

def Port(self):

return self.DashboardConfig.GetConfig("Email", "port")[1]

def Encryption(self):

return self.DashboardConfig.GetConfig("Email", "encryption")[1]

def Username(self):

return self.DashboardConfig.GetConfig("Email", "username")[1]

def Password(self):

return self.DashboardConfig.GetConfig("Email", "email_password")[1]

def SendFrom(self):

return self.DashboardConfig.GetConfig("Email", "send_from")[1]

def ready(self):

return len(self.Server()) > 0 and len(self.Port()) > 0 and len(self.Encryption()) > 0 and len(self.Username()) > 0 and len(self.Password()) > 0 and len(self.SendFrom())

def send(self, receiver, subject, body, includeAttachment = False, attachmentName = ""):

if self.ready():

try:

self.smtp = smtplib.SMTP(self.Server(), port=int(self.Port()))

self.smtp.ehlo()

if self.Encryption() == "STARTTLS":

self.smtp.starttls()

self.smtp.login(self.Username(), self.Password())

message = MIMEMultipart()

message['Subject'] = subject

message['From'] = self.SendFrom()

message["To"] = receiver

message.attach(MIMEText(body, "plain"))

if includeAttachment and len(attachmentName) > 0:

attachmentPath = os.path.join('./attachments', attachmentName)

if os.path.exists(attachmentPath):

attachment = MIMEBase("application", "octet-stream")

with open(os.path.join('./attachments', attachmentName), 'rb') as f:

attachment.set_payload(f.read())

encoders.encode_base64(attachment)

attachment.add_header("Content-Disposition", f"attachment; filename= {attachmentName}",)

message.attach(attachment)

else:

self.smtp.close()

return False, "Attachment does not exist"

self.smtp.sendmail(self.SendFrom(), receiver, message.as_string())

self.smtp.close()

return True, None

except Exception as e:

return False, f"Send failed | Reason: {e}"

return False, "SMTP not configured"

```

## /src/modules/Log.py

```py path="/src/modules/Log.py"

"""

Log Class

"""

class Log:

def __init__(self, LogID: str, JobID: str, LogDate: str, Status: str, Message: str):

self.LogID = LogID

self.JobID = JobID

self.LogDate = LogDate

self.Status = Status

self.Message = Message

def toJson(self):

return {

"LogID": self.LogID,

"JobID": self.JobID,

"LogDate": self.LogDate,

"Status": self.Status,

"Message": self.Message

}

def __dict__(self):

return self.toJson()

```

## /src/modules/PeerJob.py

```py path="/src/modules/PeerJob.py"

"""

Peer Job

"""

from datetime import datetime

class PeerJob:

def __init__(self, JobID: str, Configuration: str, Peer: str,

Field: str, Operator: str, Value: str, CreationDate: datetime, ExpireDate: datetime, Action: str):

self.Action = Action

self.ExpireDate = ExpireDate

self.CreationDate = CreationDate

self.Value = Value

self.Operator = Operator

self.Field = Field

self.Configuration = Configuration

self.Peer = Peer

self.JobID = JobID

def toJson(self):

return {

"JobID": self.JobID,

"Configuration": self.Configuration,

"Peer": self.Peer,

"Field": self.Field,

"Operator": self.Operator,

"Value": self.Value,

"CreationDate": self.CreationDate,

"ExpireDate": self.ExpireDate,

"Action": self.Action

}

def __dict__(self):

return self.toJson()

```

## /src/modules/PeerJobLogger.py

```py path="/src/modules/PeerJobLogger.py"

"""

Peer Job Logger

"""

import sqlite3, os, uuid

from .Log import Log

class PeerJobLogger:

def __init__(self, CONFIGURATION_PATH, AllPeerJobs):

self.loggerdb = sqlite3.connect(os.path.join(CONFIGURATION_PATH, 'db', 'wgdashboard_log.db'),

check_same_thread=False)

self.loggerdb.row_factory = sqlite3.Row

self.logs: list[Log] = []

self.__createLogDatabase()

self.AllPeerJobs = AllPeerJobs

def __createLogDatabase(self):

with self.loggerdb:

loggerdbCursor = self.loggerdb.cursor()

existingTable = loggerdbCursor.execute("SELECT name from sqlite_master where type='table'").fetchall()

existingTable = [t['name'] for t in existingTable]

if "JobLog" not in existingTable:

loggerdbCursor.execute("CREATE TABLE JobLog (LogID VARCHAR NOT NULL, JobID NOT NULL, LogDate DATETIME DEFAULT (strftime('%Y-%m-%d %H:%M:%S','now', 'localtime')), Status VARCHAR NOT NULL, Message VARCHAR, PRIMARY KEY (LogID))")

if self.loggerdb.in_transaction:

self.loggerdb.commit()

def log(self, JobID: str, Status: bool = True, Message: str = "") -> bool:

try:

with self.loggerdb:

loggerdbCursor = self.loggerdb.cursor()

loggerdbCursor.execute(f"INSERT INTO JobLog (LogID, JobID, Status, Message) VALUES (?, ?, ?, ?)",

(str(uuid.uuid4()), JobID, Status, Message,))

if self.loggerdb.in_transaction:

self.loggerdb.commit()

except Exception as e:

print(f"[WGDashboard] Peer Job Log Error: {str(e)}")

return False

return True

def getLogs(self, all: bool = False, configName = None) -> list[Log]:

logs: list[Log] = []

try:

allJobs = self.AllPeerJobs.getAllJobs(configName)

allJobsID = ", ".join([f"'{x.JobID}'" for x in allJobs])

with self.loggerdb:

loggerdbCursor = self.loggerdb.cursor()

table = loggerdbCursor.execute(f"SELECT * FROM JobLog WHERE JobID IN ({allJobsID}) ORDER BY LogDate DESC").fetchall()

self.logs.clear()

for l in table:

logs.append(

Log(l["LogID"], l["JobID"], l["LogDate"], l["Status"], l["Message"]))

except Exception as e:

return logs

return logs

```

## /src/modules/SystemStatus.py

```py path="/src/modules/SystemStatus.py"

import psutil

class SystemStatus:

def __init__(self):

self.CPU = CPU()

self.MemoryVirtual = Memory('virtual')

self.MemorySwap = Memory('swap')

self.Disks = Disks()

self.NetworkInterfaces = NetworkInterfaces()

self.Processes = Processes()

def toJson(self):

return {

"CPU": self.CPU,

"Memory": {

"VirtualMemory": self.MemoryVirtual,

"SwapMemory": self.MemorySwap

},

"Disks": self.Disks,

"NetworkInterfaces": self.NetworkInterfaces,

"Processes": self.Processes

}

class CPU:

def __init__(self):

self.cpu_percent: float = 0

self.cpu_percent_per_cpu: list[float] = []

def getData(self):

try:

self.cpu_percent_per_cpu = psutil.cpu_percent(interval=0.5, percpu=True)

self.cpu_percent = psutil.cpu_percent(interval=0.5)

except Exception as e:

pass

def toJson(self):

self.getData()

return self.__dict__

class Memory:

def __init__(self, memoryType: str):

self.__memoryType__ = memoryType

self.total = 0

self.available = 0

self.percent = 0

def getData(self):

try:

if self.__memoryType__ == "virtual":

memory = psutil.virtual_memory()

else:

memory = psutil.swap_memory()

self.total = memory.total

self.available = memory.available

self.percent = memory.percent

except Exception as e:

pass

def toJson(self):

self.getData()

return self.__dict__

class Disks:

def __init__(self):

self.disks : list[Disk] = []

def getData(self):

try:

self.disks = list(map(lambda x : Disk(x.mountpoint), psutil.disk_partitions()))

except Exception as e:

pass

def toJson(self):

self.getData()

return self.disks

class Disk:

def __init__(self, mountPoint: str):

self.total = 0

self.used = 0

self.free = 0

self.percent = 0

self.mountPoint = mountPoint

def getData(self):

try:

disk = psutil.disk_usage(self.mountPoint)

self.total = disk.total

self.free = disk.free

self.used = disk.used

self.percent = disk.percent

except Exception as e:

pass

def toJson(self):

self.getData()

return self.__dict__

class NetworkInterfaces:

def __init__(self):

self.interfaces = {}

def getData(self):

try:

network = psutil.net_io_counters(pernic=True, nowrap=True)

for i in network.keys():

self.interfaces[i] = network[i]._asdict()

except Exception as e:

pass

def toJson(self):

self.getData()

return self.interfaces

class Process:

def __init__(self, name, command, pid, percent):

self.name = name

self.command = command

self.pid = pid

self.percent = percent

def toJson(self):

return self.__dict__

class Processes:

def __init__(self):

self.CPU_Top_10_Processes: list[Process] = []

self.Memory_Top_10_Processes: list[Process] = []

def getData(self):

while True:

try:

processes = list(psutil.process_iter())

self.CPU_Top_10_Processes = sorted(

list(map(lambda x : Process(x.name(), " ".join(x.cmdline()), x.pid, x.cpu_percent()), processes)),

key=lambda x : x.percent, reverse=True)[:20]

self.Memory_Top_10_Processes = sorted(

list(map(lambda x : Process(x.name(), " ".join(x.cmdline()), x.pid, x.memory_percent()), processes)),

key=lambda x : x.percent, reverse=True)[:20]

break

except Exception as e:

break

def toJson(self):

self.getData()

return {

"cpu_top_10": self.CPU_Top_10_Processes,

"memory_top_10": self.Memory_Top_10_Processes

}

```

## /src/requirements.txt

bcrypt

ifcfg

psutil

pyotp

Flask

flask-cors

icmplib

gunicorn

requests

tcconfig

## /src/static/app/.gitignore

```gitignore path="/src/static/app/.gitignore"

# Logs

logs

*.log

npm-debug.log*

yarn-debug.log*

yarn-error.log*

pnpm-debug.log*

lerna-debug.log*

node_modules

.DS_Store

dist-ssr

coverage

*.local

/cypress/videos/

/cypress/screenshots/

# Editor directories and files

.vscode/*

!.vscode/extensions.json

.idea

*.suo

*.ntvs*

*.njsproj

*.sln

*.sw?

*.tsbuildinfo

.vite/*

```

## /src/static/app/build.sh

```sh path="/src/static/app/build.sh"

#!/bin/bash

echo "Running vite build..."

if vite build; then

echo "Vite build successful."

else

echo "Vite build failed. Exiting."

exit 1

fi

echo "Checking for changes to commit..."

if git diff-index --quiet HEAD --; then

if git commit -a; then

echo "Git commit successful."

else

echo "Git commit failed. Exiting."

exit 1

fi

else

echo "No changes to commit. Skipping commit."

fi

echo "Pushing changes to remote..."

if git push; then

echo "Git push successful."

else

echo "Git push failed. Exiting."

exit 1

fi

```

## /src/static/app/dist/assets/bootstrap-icons-BOrJxbIo.woff

Binary file available at https://raw.githubusercontent.com/donaldzou/WGDashboard/refs/heads/main/src/static/app/dist/assets/bootstrap-icons-BOrJxbIo.woff

## /src/static/app/dist/assets/bootstrap-icons-BtvjY1KL.woff2

Binary file available at https://raw.githubusercontent.com/donaldzou/WGDashboard/refs/heads/main/src/static/app/dist/assets/bootstrap-icons-BtvjY1KL.woff2

## /src/static/app/dist/assets/browser-CjSdxGTc.js

```js path="/src/static/app/dist/assets/browser-CjSdxGTc.js"

var O={},bt=function(){return typeof Promise=="function"&&Promise.prototype&&Promise.prototype.then},dt={},I={};let it;const Rt=[0,26,44,70,100,134,172,196,242,292,346,404,466,532,581,655,733,815,901,991,1085,1156,1258,1364,1474,1588,1706,1828,1921,2051,2185,2323,2465,2611,2761,2876,3034,3196,3362,3532,3706];I.getSymbolSize=function(t){if(!t)throw new Error('"version" cannot be null or undefined');if(t<1||t>40)throw new Error('"version" should be in range from 1 to 40');return t*4+17};I.getSymbolTotalCodewords=function(t){return Rt[t]};I.getBCHDigit=function(e){let t=0;for(;e!==0;)t++,e>>>=1;return t};I.setToSJISFunction=function(t){if(typeof t!="function")throw new Error('"toSJISFunc" is not a valid function.');it=t};I.isKanjiModeEnabled=function(){return typeof it<"u"};I.toSJIS=function(t){return it(t)};var $={};(function(e){e.L={bit:1},e.M={bit:0},e.Q={bit:3},e.H={bit:2};function t(i){if(typeof i!="string")throw new Error("Param is not a string");switch(i.toLowerCase()){case"l":case"low":return e.L;case"m":case"medium":return e.M;case"q":case"quartile":return e.Q;case"h":case"high":return e.H;default:throw new Error("Unknown EC Level: "+i)}}e.isValid=function(o){return o&&typeof o.bit<"u"&&o.bit>=0&&o.bit<4},e.from=function(o,n){if(e.isValid(o))return o;try{return t(o)}catch{return n}}})($);function ht(){this.buffer=[],this.length=0}ht.prototype={get:function(e){const t=Math.floor(e/8);return(this.buffer[t]>>>7-e%8&1)===1},put:function(e,t){for(let i=0;i>>t-i-1&1)===1)},getLengthInBits:function(){return this.length},putBit:function(e){const t=Math.floor(this.length/8);this.buffer.length<=t&&this.buffer.push(0),e&&(this.buffer[t]|=128>>>this.length%8),this.length++}};var Lt=ht;function V(e){if(!e||e<1)throw new Error("BitMatrix size must be defined and greater than 0");this.size=e,this.data=new Uint8Array(e*e),this.reservedBit=new Uint8Array(e*e)}V.prototype.set=function(e,t,i,o){const n=e*this.size+t;this.data[n]=i,o&&(this.reservedBit[n]=!0)};V.prototype.get=function(e,t){return this.data[e*this.size+t]};V.prototype.xor=function(e,t,i){this.data[e*this.size+t]^=i};V.prototype.isReserved=function(e,t){return this.reservedBit[e*this.size+t]};var _t=V,wt={};(function(e){const t=I.getSymbolSize;e.getRowColCoords=function(o){if(o===1)return[];const n=Math.floor(o/7)+2,r=t(o),s=r===145?26:Math.ceil((r-13)/(2*n-2))*2,c=[r-7];for(let u=1;u=0&&n<=7},e.from=function(n){return e.isValid(n)?parseInt(n,10):void 0},e.getPenaltyN1=function(n){const r=n.size;let s=0,c=0,u=0,a=null,l=null;for(let p=0;p=5&&(s+=t.N1+(c-5)),a=f,c=1),f=n.get(w,p),f===l?u++:(u>=5&&(s+=t.N1+(u-5)),l=f,u=1)}c>=5&&(s+=t.N1+(c-5)),u>=5&&(s+=t.N1+(u-5))}return s},e.getPenaltyN2=function(n){const r=n.size;let s=0;for(let c=0;c=10&&(c===1488||c===93)&&s++,u=u<<1&2047|n.get(l,a),l>=10&&(u===1488||u===93)&&s++}return s*t.N3},e.getPenaltyN4=function(n){let r=0;const s=n.data.length;for(let u=0;u=0;){const s=r[0];for(let u=0;u0){const r=new Uint8Array(this.degree);return r.set(o,n),r}return o};var Ut=st,pt={},L={},ut={};ut.isValid=function(t){return!isNaN(t)&&t>=1&&t<=40};var P={};const Bt="[0-9]+",Ft="[A-Z $%*+\\-./:]+";let v="(?:[u3000-u303F]|[u3040-u309F]|[u30A0-u30FF]|[uFF00-uFFEF]|[u4E00-u9FAF]|[u2605-u2606]|[u2190-u2195]|u203B|[u2010u2015u2018u2019u2025u2026u201Cu201Du2225u2260]|[u0391-u0451]|[u00A7u00A8u00B1u00B4u00D7u00F7])+";v=v.replace(/u/g,"\\u");const kt="(?:(?![A-Z0-9 $%*+\\-./:]|"+v+`)(?:.|[\r

]))+`;P.KANJI=new RegExp(v,"g");P.BYTE_KANJI=new RegExp("[^A-Z0-9 $%*+\\-./:]+","g");P.BYTE=new RegExp(kt,"g");P.NUMERIC=new RegExp(Bt,"g");P.ALPHANUMERIC=new RegExp(Ft,"g");const zt=new RegExp("^"+v+"$"),vt=new RegExp("^"+Bt+"$"),Vt=new RegExp("^[A-Z0-9 $%*+\\-./:]+$");P.testKanji=function(t){return zt.test(t)};P.testNumeric=function(t){return vt.test(t)};P.testAlphanumeric=function(t){return Vt.test(t)};(function(e){const t=ut,i=P;e.NUMERIC={id:"Numeric",bit:1,ccBits:[10,12,14]},e.ALPHANUMERIC={id:"Alphanumeric",bit:2,ccBits:[9,11,13]},e.BYTE={id:"Byte",bit:4,ccBits:[8,16,16]},e.KANJI={id:"Kanji",bit:8,ccBits:[8,10,12]},e.MIXED={bit:-1},e.getCharCountIndicator=function(r,s){if(!r.ccBits)throw new Error("Invalid mode: "+r);if(!t.isValid(s))throw new Error("Invalid version: "+s);return s>=1&&s<10?r.ccBits[0]:s<27?r.ccBits[1]:r.ccBits[2]},e.getBestModeForData=function(r){return i.testNumeric(r)?e.NUMERIC:i.testAlphanumeric(r)?e.ALPHANUMERIC:i.testKanji(r)?e.KANJI:e.BYTE},e.toString=function(r){if(r&&r.id)return r.id;throw new Error("Invalid mode")},e.isValid=function(r){return r&&r.bit&&r.ccBits};function o(n){if(typeof n!="string")throw new Error("Param is not a string");switch(n.toLowerCase()){case"numeric":return e.NUMERIC;case"alphanumeric":return e.ALPHANUMERIC;case"kanji":return e.KANJI;case"byte":return e.BYTE;default:throw new Error("Unknown mode: "+n)}}e.from=function(r,s){if(e.isValid(r))return r;try{return o(r)}catch{return s}}})(L);(function(e){const t=I,i=j,o=$,n=L,r=ut,s=7973,c=t.getBCHDigit(s);function u(w,f,m){for(let y=1;y<=40;y++)if(f<=e.getCapacity(y,m,w))return y}function a(w,f){return n.getCharCountIndicator(w,f)+4}function l(w,f){let m=0;return w.forEach(function(y){const T=a(y.mode,f);m+=T+y.getBitsLength()}),m}function p(w,f){for(let m=1;m<=40;m++)if(l(w,m)<=e.getCapacity(m,f,n.MIXED))return m}e.from=function(f,m){return r.isValid(f)?parseInt(f,10):m},e.getCapacity=function(f,m,y){if(!r.isValid(f))throw new Error("Invalid QR Code version");typeof y>"u"&&(y=n.BYTE);const T=t.getSymbolTotalCodewords(f),h=i.getTotalCodewordsCount(f,m),E=(T-h)*8;if(y===n.MIXED)return E;const d=E-a(y,f);switch(y){case n.NUMERIC:return Math.floor(d/10*3);case n.ALPHANUMERIC:return Math.floor(d/11*2);case n.KANJI:return Math.floor(d/13);case n.BYTE:default:return Math.floor(d/8)}},e.getBestVersionForData=function(f,m){let y;const T=o.from(m,o.M);if(Array.isArray(f)){if(f.length>1)return p(f,T);if(f.length===0)return 1;y=f[0]}else y=f;return u(y.mode,y.getLength(),T)},e.getEncodedBits=function(f){if(!r.isValid(f)||f<7)throw new Error("Invalid QR Code version");let m=f<<12;for(;t.getBCHDigit(m)-c>=0;)m^=s<=0;)n^=Tt<0&&(o=this.data.substr(i),n=parseInt(o,10),t.put(n,r*3+1))};var Jt=_;const Yt=L,W=["0","1","2","3","4","5","6","7","8","9","A","B","C","D","E","F","G","H","I","J","K","L","M","N","O","P","Q","R","S","T","U","V","W","X","Y","Z"," ","$","%","*","+","-",".","/",":"];function D(e){this.mode=Yt.ALPHANUMERIC,this.data=e}D.getBitsLength=function(t){return 11*Math.floor(t/2)+6*(t%2)};D.prototype.getLength=function(){return this.data.length};D.prototype.getBitsLength=function(){return D.getBitsLength(this.data.length)};D.prototype.write=function(t){let i;for(i=0;i+2<=this.data.length;i+=2){let o=W.indexOf(this.data[i])*45;o+=W.indexOf(this.data[i+1]),t.put(o,11)}this.data.length%2&&t.put(W.indexOf(this.data[i]),6)};var Ot=D;const $t=L;function U(e){this.mode=$t.BYTE,typeof e=="string"?this.data=new TextEncoder().encode(e):this.data=new Uint8Array(e)}U.getBitsLength=function(t){return t*8};U.prototype.getLength=function(){return this.data.length};U.prototype.getBitsLength=function(){return U.getBitsLength(this.data.length)};U.prototype.write=function(e){for(let t=0,i=this.data.length;t=33088&&i<=40956)i-=33088;else if(i>=57408&&i<=60351)i-=49472;else throw new Error("Invalid SJIS character: "+this.data[t]+`